|

adi_3dtof_image_stitching package from adi_3dtof_image_stitching repoadi_3dtof_image_stitching |

|

|

Package Summary

| Tags | No category tags. |

| Version | 2.1.0 |

| License | BSD |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/analogdevicesinc/adi_3dtof_image_stitching.git |

| VCS Type | git |

| VCS Version | humble-devel |

| Last Updated | 2024-10-27 |

| Dev Status | MAINTAINED |

| CI status | No Continuous Integration |

| Released | UNRELEASED |

| Tags | No category tags. |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Package Description

Additional Links

Maintainers

- Analog Devices

Authors

Analog Devices 3DToF Image Stitching

Overview

The ADI 3DToF Image Stitching is a ROS (Robot Operating System) package for stitching depth images from multiple Time-Of-Flight sensors like ADI’s ADTF3175D ToF sensor. This node subscribes to captured Depth and IR images from multiple ADI 3DToF ADTF31xx nodes, stitches them to create a single expanded field of view and publishes the stitched Depth, IR and PointCloud as ROS topics. The node publishes Stitched Depth and IR Images at 2048x512 (16 bits per image) resolution @ 10FPS in realtime mode on AAEON BOXER-8250AI, while stitching inputs from 4 different EVAL-ADTF3175D Sensor Modules and giving an expanded FOV of 278 Degrees. Along with the Stitched Depth and IR frames, the Stitched Point Cloud is also published at 10FPS.

Background

- Supported Time-of-flight boards: ADTF3175D

- Supported ROS and OS distro: Humble (Ubuntu 22.04 and Ubuntu 20.04(Source Build))

- Supported platform: armV8 64-bit (arm64) and Intel Core x86_64(amd64) processors(Core i3, Core i5 and Core i7)

Hardware

For the tested setup with GPU inference support, the following are used:

- 4 x EVAL-ADTF3175D Modules

- 1 x AAEON BOXER-8250AI

- 1 x External 12V power supply

- 4 x Usb type-c to type-A cables - with 5gbps data speed support

Minimum requirements for a test setup on host laptop/computer CPU:

- 2 x EVAL-ADTF3175D Modules

- Host laptop with intel i5 or higher cpu running Ubuntu-20.04LTS or WSL2 with Ubuntu-20.04

- 2 x USB type-c to type-A cables - with 5gbps data speed support

- USB power hub

:memo: _Note: Refer the User Guide to ensure the eval module has adequate power supply during operation.

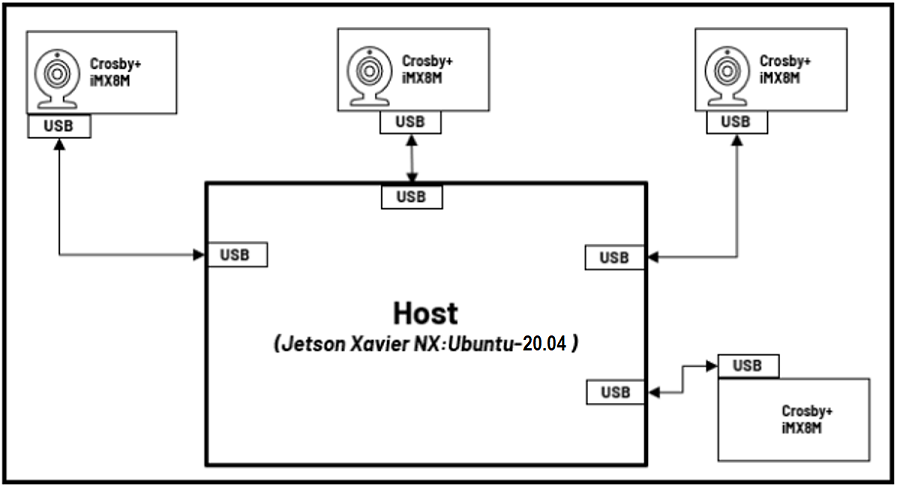

The image below shows the connection diagram of the setup (with labels):

The image below shows the actual setup used (for reference):

Hardware setup

Follow the below mentioned steps to get the hardware setup ready:

- Setup the ToF devices with adi_3dtof_adtf31xx_sensor node following the steps mentioned in the repository.

- Ensure all devices are running at

10fpsby following these steps. - Position the cameras properly as per the dimentions shown in the below Diagram.

:memo: Notes:

- Please contact the

Maintainersto get the CAD design for the baseplate setup shown above.

- Finally, connect the devices to the host(Linux PC or AAEON BOXER-8250AI) using usb cables as shown below.

Software

Software Dependencies

Assumptions before building this package:

- Linux System or WSL2(Only Simulation Mode supported) running Ubuntu 22.04LTS

- ROS2 Humble: If not installed, follow these steps.

- Setup colcon workspace (with workspace folder named as “ros2_ws”).

- System Date/Time is updated: Make sure the Date/Time is set properly before compiling and running the application. Connecting to a WiFi network would make sure the Date/Time is set properly.

- NTP server is setup for device Synchronization. If not, please refer this Link to setup NTP server on Host.

Software Requirements for Running on AAEON BOXER-8250AI :

- Nvidia Jetpack OS 5.0.2 .

- CUDA 11.4. It comes preinstalled with Jetpack OS. If not, follow these steps.

Clone

- Clone the repo and checkout the correct release branch/ tag into ros2 workspace directory

$ cd ~/ros2_ws/src

$ git clone https://github.com/analogdevicesinc/adi_3dtof_image_stitching.git -b v2.0.0

Build

Do proper exports first:

$ source /opt/ros/<ROS version>/setup.bash

Where:

- “ROS version” is the user’s actual ROS version

Then:

For setup with GPU inference support using CUDA:

$ cd ~/ros2_ws/

$ colcon build --cmake-target clean

$ colcon build --symlink-install --cmake-args -DCMAKE_BUILD_TYPE=Release -DENABLE_GPU=True --event-handlers console_direct+

$ source install/setup.bash

For setup with CPU inference support using openMP:

$ cd ~/ros2_ws/

$ colcon build --cmake-target clean

$ colcon build --symlink-install --cmake-args -DCMAKE_BUILD_TYPE=Release --event-handlers console_direct+

$ source install/setup.bash

Preparing the sensors

Setting up the IP address

The default IP address of the sensor is set to 10.42.0.1. It is essential that each sensor has its own IP to avoid conflicts. To do so, ssh into the sensor with the credentials:

Username: analog Password: analog

- Update the “Address” field in

/etc/systemd/network/20-wired-usb0.networkfile. - Update the server address in

/etc/ntp.conf(server 10.4x.0.100 iburst) - Reboot the device and login with the new ip

Sharing the launch file

The sensor modules may be missing the required launch files that designate the transforms for the sensors in 3D space, which is essential the image stitching algorithm to work appropriately. to do so, we transfer the appropriate launch file, refer to the table below, to transfer to the corresponding sensor. Refer to the CAD diagram to determine the location and camera tag. |Camera|Launch file| |—|—| |cam1|adi_3dtof_adtf31xx_cam1_launch.py| |cam2|adi_3dtof_adtf31xx_cam2_launch.py| |cam3|adi_3dtof_adtf31xx_cam3_launch.py| |cam4|adi_3dtof_adtf31xx_cam4_launch.py|

To transfer the launch file

$ cd ~/ros2_ws/src/adi_3dtof_image_stitching/launch

$ scp adi_3dtof_adtf31xx_cam1.launch analog@10.42.0.1:/home/analog/ros2_ws/adi_3dtof_adtf31xx/launch

- Ensure rmw settings are updated in all the Devices and the Host to support muti-sensor usecases

#Update the default rmw xml profile file to the settings file present inside "rmw_config" foler $ export FASTRTPS_DEFAULT_PROFILES_FILE= ~/ros2_ws/src/adi_3dtof_image_stitching/rmw_config/rmw_settings.xml #Next restart ROS daemon for the profile changes to take effect $ ros2 daemon stop- The above mentioned steps for rmw settings setup can also be completed by running the “setup_rmw_settings.sh” script present inside the “rmw_config” folder.

$ cd ~/ros2_ws/src/adi_3dtof_image_stitching/rmw_config $ chmod +x setup_rmw_settings.sh $ source setup_rmw_settings.shNodes

adi_3dtof_image_stitching_node

:memo: _Note: For those with

in the topic names, these are ideally the names assigned for EVAL-ADTF3175D camera modules. For example, if there are 2 cameras used, with the names as cam1 and cam2 ,there should be two subscribed topics for depth_image, specifically /cam1/depth_image for camra 1 and then /cam2/depth_image for camera 2._

Published topics

These are the default topic names, topic names can be modified as a ROS parameter.

-

/adi_3dtof_image_stitching/depth_image

- Stitched 16-bit output Depth image of size 2048X512

-

/adi_3dtof_image_stitching/ir_image

- Stitched 16-bit output IR image of size 2048X512

-

/adi_3dtof_image_stitching/point_cloud

- Point cloud 3D model of the stitched environment of size 2048X512X3

Subscriber topics

- **/

/ir_image** - 512X512 16-bit IR image from sensor node

- **/

/depth_image** - 512X512 16-bit Depth image from sensor node

- **/

/camera_info** - Camera information of the sensor

Parameters

:memo: Notes:

- If any of these parameters are not set/declared, default values will be used.

adi_3dtof_image_stitching ROS Node Parameters

-

param_camera_prefixes (vector

, default: ["cam1","cam2","cam3","cam4"]) - This list indicates the camera names of sensors connected for image stitching.

- we support 2 sensors in minimum and 4 sensors at maximum for a horizontal setup at the moment.

- The camera names must match the camera name assigned in the adi_3dtof_adtf31xx sensor node for the respective sensors.

-

param_camera_link (String, default: “adi_camera_link”)

- Name of camera Link

-

param_enable_depth_ir_compression (Bool, default: True)

- Indicates if Depth compression is enabled for the inputs comming in from the adi_3dtof_adtf31xx sensor nodes

- set this to “False” if Depth compression is disabled

-

param_output_mode (int, default: 0)

- Enables/disables saving of stitched output.

- set this to 1 to enable video output saving.

:memo: _Notes:_Enabling video output slows down the speed of image stitching algorithm.

-

param_out_file_name (String, default: “stitched_output.avi”)

- output location to save stitched output if “param_output_mode” is enabled.

Launch

Simulation Mode

To do a quick test of image Stitching Algorithm, there is a simulation FILEIO setup that you can run. Idea is, 4 adi_3dtof_adtf31xx sensor nodes will run in FILEIO mode on recorded vectors, publising sensor Depth and IR data which will be subscribed and processed by adi_3dtof_image_stitching node:

- adi_3dtof_adtf31xx reads the binary recorded files and publishes depth_image ir_image and camera_info

- The adi_3dtof_image_stitching node subscribes to the incoming data and runs image stitching algorithm on them.

- It then publishes stitched Depth and IR images, along with stitched Point-cloud.

:memo: Notes:

- Running the adi_3dtof_image_stitching node in Simulation mode requires the setup of adi_3dtof_adtf31xx_sensor node to be done before hand.

- It is assumed that both adi_3dtof_image_stitching node and adi_3dtof_adtf31xx node are built in the location “~/ros2_ws/”.

- It is also assumed that the demo vectors folder “adi_3dtof_input_video_files” is copied to the location “~/ros2_ws/src/”. (please refer the “arg_in_file_name” in the launch files)

To proceed with the test, execute these following command:

| Terminal |

|---|

| <pre>$cd ~/ros2_ws/ $source /opt/ros/humble/setup.bash $source install/setup.bash $ros2 launch adi_3dtof_image_stitching adi_3dtof_image_stitching_launch.py |

Monitor the Output on Rviz2 Window

- Open a Rviz2 instance

- in Rviz2 window add display for “/adi_3dtof_image_stitching/depth_image” to monitor the Stitched Depth output.

- Add display for “/adi_3dtof_image_stitching/ir_image” to monitor the Stitched IR output.

- Add display for “/adi_3dtof_image_stitching/point_cloud” to display the 3D point CLoud.

Real-Time Mode

To test the Stitching Algorithm on a real-time setup the adi_3dtof_image_stitching node needs to be launched in Host-Only mode Idea is, the individual sensors connected to the host computer(Jetson NX host or Laptop) will publish their respective Depth and IR data independently in real-time:

- Sensors will publish their respective Depth and IR frames of size 512X512 independently

- The adi_3dtof_image_stitching node will subscribe to the incomming data from all sensors, synchronize them and run image stitching.

- The stitched output is then published as ROS messages which can be viewed on the Rviz2 window.

- Stitched output can also be saved into a video file by enabling the “enable_video_out” parameter.

To proceed with the test, first execute these following commands on four (4) different terminals (in sequence) to start image capture in the EVAL-ADTF3175D Modules: :memo:

- This is assuming that we are testing a 4-camera setup to get a 278 degrees FOV. Reduce the number of terminals accordingly for 2 or 3 camera setup.

- Please ensure the below mentioned launch files are available inside the launch folder for the adi_3dtof_adtf31xx code present inside the devices. If not please follow the following steps:-

- Copy the respective launch files from the launch folder of the adi_3dtof_image_stitching repository to the launch folder of the adi_3dtof_adtf31xx code inside the devices.

- Change the ‘arg_input_sensor_mode’ paameter value to 0 to help run the node in real-time capture mode.

- Build the adi_3dtof_adtf31xx node again on the device, so it can access these new launch files.

| Terminal 1 | Terminal 2 | Terminal 3 | Terminal 4 |

|---|---|---|---|

| <pre>~$ ssh analog@[ip of cam1] >cd ~/ros2_ws/ >source /opt/ros/humble/install/setup.bash >source install/setup.bash >ros2 launch adi_3dtof_adtf31xx adi_3dtof_adtf31xx_cam1_launch.py |

<pre>~$ ssh analog@[ip of cam2] >cd ~/ros2_ws/ >source /opt/ros/humble/install/setup.bash >source install/setup.bash >ros2 launch adi_3dtof_adtf31xx adi_3dtof_adtf31xx_cam2_launch.py |

<pre>~$ ssh analog@[ip of cam3] >cd ~/ros2_ws/ >source /opt/ros/humble/install/setup.bash >source install/setup.bash >ros2 launch adi_3dtof_adtf31xx adi_3dtof_adtf31xx_cam3_launch.py |

<pre>~$ ssh analog@[ip of cam4] >cd ~/ros2_ws/ >source /opt/ros/humble/install/setup.bash >source install/setup.bash >ros2 launch adi_3dtof_adtf31xx adi_3dtof_adtf31xx_cam4_launch.py |

:memo: _Notes:

- Its assumed that the adi_3dtof_adtf31xx nodes are already built within the EVAL-ADTF3175D Modules. It is also assumed that adi_3dtof_adtf31xx node are built in the location “~/ros2_ws/” within the sensor modules.

- It is assumed that the respective sensor launch files from the adi_3dtfo_image_stitching package are copied to the launch folder of adi_3dtof_adtf31xx package within the respective sensors and the input mode is changed to 0.(it is 2 by default)_

- The credentials to login to the devices is given below

username: analog password: analog

Next run the adi_3dtof_image_stitching node on Host in the Host-Only mode, by executing the following command:

| Terminal 5 |

— |

| <pre>$cd ~/ros2_ws/

$source /opt/ros/humble/setup.bash

$source install/setup.bash

$ros2 launch adi_3dtof_image_stitching adi_3dtof_image_stitching_host_only_launch.py |

:memo:

- It is assumed that both adi_3dtof_image_stitching node is built in the location “~/ros2_ws/”.

- Make sure that the Date/Time is correctly set for all the devices, this application makes use of the topic Timestamp for synchronization. Hence, if the time is not set properly the application will not run.

- If the Image Stitching Node is not subscribing/processing the depth and IR Data published by the connected sensors, please ensure the following points are checked:-

- Ensure that the camera name prefixes for the sensor topics, match the names listed in param_camera_prefixes parameter of the image stitching launch file.

- If the topics published by the sensors are uncompressed, please ensure to change the param_enable_depth_ir_compression parameter to False too in the launch file of Image Stitching Node.

Monitor the Output on Rviz2 Window

- Open a Rviz2 Instance

- in Rviz2 window add display for “/adi_3dtof_image_stitching/depth_image” to monitor the Stitched Depth output.

- Add display for “/adi_3dtof_image_stitching/ir_image” to monitor the Stitched IR output.

- Add display for “/adi_3dtof_image_stitching/point_cloud” to display the 3D point CLoud.

Limitations

- Currently a maximum of 4 sensors and a minimum of 2 sensors are supported for stitching, in the horizontal setup proposed.

- Real-time Operation is not currently supported on WSL2 setups.

- AAEON BOXER-8250AI slows dows as the device heats up, hence proper cooling mechanism is necessary.

- Subscribing to stitched point cloud for real-time display might slow down the algorithm operation.

Known Issues

- While using WSL2 on a Windows system to run the simulation mode demo the auto-spawned Rviz2 window may stop in some cases. In such instances please open Rviz2 again from a new terminal and subscribe to the necessary topics to continue. Steps are mentioned here.

Support

Please contact the Maintainers if you want to evaluate the algorithm for your own setup/configuration.

Any other inquiries are also welcome.

Wiki Tutorials

Package Dependencies

System Dependencies

Dependant Packages

Launch files

Messages

Services

Plugins

Recent questions tagged adi_3dtof_image_stitching at Robotics Stack Exchange

|

adi_3dtof_image_stitching package from adi_3dtof_image_stitching repoadi_3dtof_image_stitching |

|

|

Package Summary

| Tags | No category tags. |

| Version | 1.1.0 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/analogdevicesinc/adi_3dtof_image_stitching.git |

| VCS Type | git |

| VCS Version | noetic-devel |

| Last Updated | 2024-10-18 |

| Dev Status | MAINTAINED |

| CI status | Continuous Integration |

| Released | RELEASED |

| Tags | No category tags. |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Package Description

Additional Links

Maintainers

- Analog Devices

Authors

Analog Devices 3DToF Image Stitching

Overview

The ADI 3DToF Image Stitching is a ROS (Robot Operating System) package for stitching depth images from multiple Time-Of-Flight sensors like ADI’s ADTF3175D ToF sensor. This node subscribes to captured Depth and IR images from multiple ADI 3DToF ADTF31xx nodes, stitches them to create a single expanded field of view and publishes the stitched Depth, IR and PointCloud as ROS topics. The node publishes Stitched Depth and IR Images at 2048x512 (16 bits per image) resolution @ 10FPS in realtime mode on AAEON BOXER-8250AI, while stitching inputs from 4 different EVAL-ADTF3175D Sensor Modules and giving an expanded FOV of 278 Degrees. Along with the Stitched Depth and IR frames, the Stitched Point Cloud is also published at 10FPS.

Background

- Supported Time-of-flight boards: ADTF3175D

- Supported ROS and OS distro: Noetic (Ubuntu 20.04)

- Supported platform: armV8 64-bit (arm64) and Intel Core x86_64(amd64) processors(Core i3, Core i5 and Core i7)

Hardware

For the tested setup with GPU inference support, the following are used:

- 4 x EVAL-ADTF3175D Modules

- 1 x AAEON BOXER-8250AI

- 1 x External 12V power supply

- 4 x Usb type-c to type-A cables - with 5gbps data speed support

Minimum requirements for a test setup on host laptop/computer CPU:

- 2 x EVAL-ADTF3175D Modules

- Host laptop with intel i5 or higher cpu running Ubuntu-20.04LTS or WSL2 with Ubuntu-20.04

- 2 x USB type-c to type-A cables - with 5gbps data speed support

- USB power hub

:memo: _Note: Refer the User Guide to ensure the eval module has adequate power supply during operation.

The image below shows the connection diagram of the setup (with labels):

The image below shows the actual setup used (for reference):

Hardware setup

Follow the below mentioned steps to get the hardware setup ready:

- Setup the ToF devices with adi_3dtof_adtf31xx_sensor node following the steps mentioned in the repository.

- Ensure all devices are running at

10fpsby following these steps. - Position the cameras properly as per the dimentions shown in the below Diagram.

:memo: Notes:

- Please contact the

Maintainersto get the CAD design for the baseplate setup shown above.

- Finally, connect the devices to the host(Linux PC or AAEON BOXER-8250AI) using usb cables as shown below.

Software

Software Dependencies

Assumptions before building this package:

- Linux System or WSL2(Only Simulation Mode supported) running Ubuntu 20.04LTS

- ROS Noetic: If not installed, follow these steps.

- Setup catkin workspace (with workspace folder named as “catkin_ws”). If not done, follow these steps.

- System Date/Time is updated: Make sure the Date/Time is set properly before compiling and running the application. Connecting to a WiFi network would make sure the Date/Time is set properly.

- NTP server is setup for device Synchronization. If not, please refer this Link to setup NTP server on Host.

Software Requirements for Running on AAEON BOXER-8250AI :

- Nvidia Jetpack OS 5.0.2 .

- CUDA 11.4. It comes preinstalled with Jetpack OS. If not, follow these steps.

Clone

- Clone the repo and checkout the corect release branch/ tag into catkin workspace directory

$ cd ~/catkin_ws/src

$ git clone https://github.com/analogdevicesinc/adi_3dtof_image_stitching.git -b v1.1.0

Setup

Build

Do proper exports first:

$ source /opt/ros/<ROS version>/setup.bash

Where:

- “ROS version” is the user’s actual ROS version

Then:

For setup with GPU inference support using CUDA:

$ cd ~/catkin_ws/

$ catkin_make clean

$ catkin_make -DENABLE_GPU=true -DCMAKE_BUILD_TYPE=Release -j2

$ source devel/setup.bash

For setup with CPU inference support using openMP:

$ cd ~/catkin_ws/

$ catkin_make clean

$ catkin_make -DCMAKE_BUILD_TYPE=Release -j2

$ source devel/setup.bash

Preparing the sensors

Setting up the IP address

The default IP address of the sensor is set to 10.42.0.1. It is essential that each sensor has its own IP to avoid conflicts. To do so, ssh into the sensor with the credentials:

Username: analog Password: analog

- Update the “Address” field in

/etc/systemd/network/20-wired-usb0.networkfile. - Update the

ROS_MASTER_URIandROS_IPvalues in~/.bashrc. For example,

$ export ROS_MASTER_URI=http://10.43.0.100:11311

$ export ROS_IP=10.43.0.1

- Update the server address in

/etc/ntp.conf(server 10.4x.0.100 iburst) - Reboot the device and login with the new ip

Sharing the launch file

The sensor modules may be missing the required launch files that designate the transforms for the sensors in 3D space, which is essential the image stitching algorithm to work appropriately. to do so, we transfer the appropriate launch file, refer to the table below, to transfer to the corresponding sensor. Refer to the CAD diagram to determine the location and camera tag. |Camera|Launch file| |—|—| |cam1|adi_3dtof_adtf31xx_cam1.launch| |cam2|adi_3dtof_adtf31xx_cam2.launch| |cam3|adi_3dtof_adtf31xx_cam3.launch| |cam4|adi_3dtof_adtf31xx_cam4.launch|

To transfer the launch file

$ cd ~/catkin_ws/src/adi_3dtof_image_stitching/launch

$ scp adi_3dtof_adtf31xx_cam1.launch analog@10.42.0.1:/home/analog/catkin_ws/adi_3dtof_adtf31xx/launch

Nodes

adi_3dtof_image_stitching_node

:memo: _Note: For those with

in the topic names, these are ideally the names assigned for EVAL-ADTF3175D camera modules. For example, if there are 2 cameras used, with the names as cam1 and cam2 ,there should be two subscribed topics for depth_image, specifically /tmc_info_0 for camra 1 and then /tmc_info_1 for camera 2._

Published topics

These are the default topic names, topic names can be modified as a ROS parameter.

-

/adi_3dtof_image_stitching/depth_image

- Stitched 16-bit output Depth image of size 2048X512

-

/adi_3dtof_image_stitching/ir_image

- Stitched 16-bit output IR image of size 2048X512

-

/adi_3dtof_image_stitching/point_cloud

- Point cloud 3D model of the stitched environment of size 2048X512X3

Subscriber topics

- **/

/ir_image** - 512X512 16-bit IR image from sensor node

- **/

/depth_image** - 512X512 16-bit Depth image from sensor node

- **/

/camera_info** - Camera information of the sensor

Parameters

:memo: Notes:

- If any of these parameters are not set/declared, default values will be used.

adi_3dtof_image_stitching ROS Node Parameters

-

param_camera_prefixes (vector

, default: ["cam1","cam2","cam3","cam4"]) - This list indicates the camera names of sensors connected for image stitching.

- we support 2 sensors in minimum and 4 sensors at maximum for a horizontal setup at the moment.

- The camera names must match the camera name assigned in the adi_3dtof_adtf31xx sensor node for the respective sensors.

-

param_camera_link (String, default: “adi_camera_link”)

- Name of camera Link

-

image_transport (String, default: “compressedDepth”)

- Indicates if Depth compression is enabled for the inputs comming in from the adi_3dtof_adtf31xx sensor nodes

- set this to “raw” if Depth compression is disabled

-

param_output_mode (int, default: 0)

- Enables/disables saving of stitched output.

- set this to 1 to enable video output saving.

:memo: _Notes:_Enabling video output slows down the speed of image stitching algorithm.

-

param_out_file_name (String, default: “stitched_output.avi”)

- output location to save stitched output if “param_output_mode” is enabled.

Launch

Simulation Mode

To do a quick test of image Stitching Algorithm, there is a simulation FILEIO setup that you can run. Idea is, 4 adi_3dtof_adtf31xx sensor nodes will run in FILEIO mode on recorded vectors, publising sensor Depth and IR data which will be subscribed and processed by adi_3dtof_image_stitching node:

- adi_3dtof_adtf31xx reads the binary recorded files and publishes depth_image ir_image and camera_info

- The adi_3dtof_image_stitching node subscribes to the incoming data and runs image stitching algorithm on them.

- It then publishes stitched Depth and IR images, along with stitched Point-cloud.

The adi_3dtof_input_video_files folder contains the binary video files which can be obtained by running the get_video.sh script provided by the release file. This folder is to be located in the source folder of your workspace. For example ~/catkin_ws/src.

:memo: Notes:

- Running the adi_3dtof_image_stitching node in Simulation mode requires the setup of adi_3dtof_adtf31xx_sensor node to be done before hand.

- It is assumed that both adi_3dtof_image_stitching node and adi_3dtof_adtf31xx node are built in the location “~/catkin_ws/”.

- It is also assumed that the demo vectors folder “adi_3dtof_input_video_files” is copied to the location “~/catkin_ws/src/”. (please refer the “arg_in_file_name” in the launch files)

To proceed with the test, execute these following command:

| Terminal |

|---|

| <pre>$cd ~/catkin_ws/ $source /opt/ros/noetic/setup.bash $source devel/setup.bash $roslaunch adi_3dtof_image_stitching adi_3dtof_image_stitching.launch |

When running the launch file, ensure that sensor mode of all the dependant launch files is set to

2, which sets launch to FILEIO mode.

Monitor the Output on Rviz Window

:memo: Notes:

- in Rviz window add display for “/adi_3dtof_image_stitching/depth_image” to monitor the Stitched Depth output.

- Add display for “/adi_3dtof_image_stitching/ir_image” to monitor the Stitched IR output.

- Add display for “/adi_3dtof_image_stitching/point_cloud” to display the 3D point CLoud.

Real-Time Mode

To run the Stitching Algorithm on a real-time setup the adi_3dtof_image_stitching node needs to be launched in Host-Only mode. Idea is, the individual sensors connected to the host computer(Jetson NX host or Laptop) will publish their respective Depth and IR data independently in real-time:

- Sensors will publish their respective Depth and IR frames of size 512X512 independently

- The adi_3dtof_image_stitching node will subscribe to th e incomming data from all sensors, synchronize them and run image stitching.

- The stitched output is then published as ROS messages which can be viewed on the Rviz window.

- Stitched output can also be saved into a video file by enabling the “enable_video_out” parameter.

To proceed with the test, first execute these following commands on four (4) different terminals (in sequence) to start image capture in the EVAL-ADTF3175D Modules:

:memo: Note: This is assuming that we are testing a 4-camera setup to get a 278 degrees FOV. Reduce the number of terminals accordingly for 2 or 3 camera setup

| Terminal 1 | Terminal 2 | Terminal 3 | Terminal 4 |

|---|---|---|---|

| <pre>~$ ssh analog@[ip of cam1] >cd ~/catkin_ws/ >source /opt/ros/noetic/setup.bash >source devel/setup.bash >roslaunch adi_3dtof_adtf31xx adi_3dtof_cam1.launch |

<pre>~$ ssh analog@[ip of cam2] >cd ~/catkin_ws/ >source /opt/ros/noetic/setup.bash >source devel/setup.bash >roslaunch adi_3dtof_adtf31xx adi_3dtof_cam2.launch |

<pre>~$ ssh analog@[ip of cam3] >cd ~/catkin_ws/ >source /opt/ros/noetic/setup.bash >source devel/setup.bash >roslaunch adi_3dtof_adtf31xx adi_3dtof_cam3.launch |

<pre>~$ ssh analog@[ip of cam4] >cd ~/catkin_ws/ >source /opt/ros/noetic/setup.bash >source devel/setup.bash >roslaunch adi_3dtof_adtf31xx adi_3dtof_cam4.launch |

:memo: _Notes:

- Its assumed that the adi_3dtof_adtf31xx nodes are already built within the EVAL-ADTF3175D Modules. It is also assumed that adi_3dtof_adtf31xx node are built in the location “~/catkin_ws/” within the sensor modules._

- The credentials to login to the devices is given below

username: analog password: analog

Next run the adi_3dtof_image_stitching node on Host in the Host-Only mode, by executing the following command:

| Terminal 5 |

— |

| <pre>$cd ~/catkin_ws/

$source /opt/ros/noetic/setup.bash

$source devel/setup.bash

$roslaunch adi_3dtof_image_stitching adi_3dtof_image_stitching_host_only.launch |

Remember to edit the list of arguments depending on the number of sensors use. For example, if we were to use only three sensors instead of 4, the line is edited to look like:

Before

<arg name="arg_camera_prefixes" default="[$(arg ns_prefix_cam1),$(arg ns_prefix_cam2),$(arg ns_prefix_cam3),$(arg ns_prefix_cam4)]"/>

After

<arg name="arg_camera_prefixes" default="[$(arg ns_prefix_cam1),$(arg ns_prefix_cam2),$(arg ns_prefix_cam3)]"/>

Simply drop the corresponding camera from the list.

:memo: Note: It is assumed that both adi_3dtof_image_stitching node is built in the location “~/catkin_ws/”.

Monitor the Output on Rviz Window

:memo: Notes:

- in Rviz window add display for “/adi_3dtof_image_stitching/depth_image” to monitor the Stitched Depth output.

- Add display for “/adi_3dtof_image_stitching/ir_image” to monitor the Stitched IR output.

- Add display for “/adi_3dtof_image_stitching/point_cloud” to display the 3D point CLoud.

Limitations

- Currently a maximum of 4 sensors and a minimum of 2 sensors are supported for stitching, in the horizontal setup proposed.

- Real-time Operation is not currently supported on WSL2 setups.

- AAEON BOXER-8250AI slows dows as the device heats up, hence proper cooling mechanism is necessary.

- Subscribing to stitched point cloud for real-time display might slow down the algorithm operation.

Support

Please contact the Maintainers if you want to evaluate the algorithm for your own setup/configuration.

Any other inquiries are also welcome.

Wiki Tutorials

Package Dependencies

| Deps | Name |

|---|---|

| roscpp | |

| cv_bridge | |

| std_msgs | |

| sensor_msgs | |

| eigen_conversions | |

| image_geometry | |

| image_transport | |

| compressed_depth_image_transport | |

| tf2 | |

| tf2_ros | |

| tf2_geometry_msgs | |

| pcl_ros | |

| image_view | |

| catkin |

System Dependencies

Dependant Packages

Launch files

- launch/adi_3dtof_image_stitching_host_only.launch

-

- ns_prefix_stitch [default: adi_3dtof_image_stitching]

- ns_prefix_cam1 [default: cam1]

- ns_prefix_cam2 [default: cam2]

- ns_prefix_cam3 [default: cam3]

- ns_prefix_cam4 [default: cam4]

- arg_camera_prefixes [default: [$(arg ns_prefix_cam1),$(arg ns_prefix_cam2),$(arg ns_prefix_cam3),$(arg ns_prefix_cam4)]]

- stitched_cam_base_frame_optical [default: stitch_frame_link_optical]

- stitched_cam_base_frame [default: stitch_frame_link]

- compression_parameter [default: compressedDepth]

- arg_output_mode [default: 0]

- arg_out_file_name [default: no filename]

- launch/adi_3dtof_adtf31xx_cam1.launch

-

- ns_launch_delay [default: 5.0]

- ns_prefix_cam1 [default: cam1]

- arg_input_sensor_mode [default: 0]

- arg_in_file_name [default: $(find adi_3dtof_adtf31xx)/../adi_3dtof_input_video_files/adi_3dtof_height_170mm_yaw_135degrees_cam1.bin]

- arg_camera_height_from_ground_in_mtr [default: 0.15]

- arg_enable_depth_ir_compression [default: 1]

- cam1_base_frame_optical [default: $(eval arg('ns_prefix_cam1') + '_adtf31xx_optical')]

- cam1_base_frame [default: $(eval arg('ns_prefix_cam1') + '_adtf31xx')]

- launch/adi_3dtof_adtf31xx_cam3.launch

-

- ns_launch_delay [default: 5.0]

- ns_prefix_cam1 [default: cam3]

- arg_input_sensor_mode [default: 0]

- arg_in_file_name [default: $(find adi_3dtof_adtf31xx)/../adi_3dtof_input_video_files/adi_3dtof_height_170mm_yaw_0degrees_cam3.bin]

- arg_camera_height_from_ground_in_mtr [default: 0.15]

- arg_enable_depth_ir_compression [default: 1]

- cam1_base_frame_optical [default: $(eval arg('ns_prefix_cam1') + '_adtf31xx_optical')]

- cam1_base_frame [default: $(eval arg('ns_prefix_cam1') + '_adtf31xx')]

- launch/adi_3dtof_adtf31xx_cam2.launch

-

- ns_launch_delay [default: 5.0]

- ns_prefix_cam1 [default: cam2]

- arg_input_sensor_mode [default: 0]

- arg_in_file_name [default: $(find adi_3dtof_adtf31xx)/../adi_3dtof_input_video_files/adi_3dtof_height_170mm_yaw_67_5degrees_cam2.bin]

- arg_camera_height_from_ground_in_mtr [default: 0.15]

- arg_enable_depth_ir_compression [default: 1]

- cam1_base_frame_optical [default: $(eval arg('ns_prefix_cam1') + '_adtf31xx_optical')]

- cam1_base_frame [default: $(eval arg('ns_prefix_cam1') + '_adtf31xx')]

- launch/adi_3dtof_image_stitching.launch

- launch/adi_3dtof_adtf31xx_cam4.launch

-

- ns_launch_delay [default: 5.0]

- ns_prefix_cam1 [default: cam4]

- arg_input_sensor_mode [default: 0]

- arg_in_file_name [default: $(find adi_3dtof_adtf31xx)/../adi_3dtof_input_video_files/adi_3dtof_height_170mm_yaw_minus_67_5degrees_cam4.bin]

- arg_camera_height_from_ground_in_mtr [default: 0.15]

- arg_enable_depth_ir_compression [default: 1]

- cam1_base_frame_optical [default: $(eval arg('ns_prefix_cam1') + '_adtf31xx_optical')]

- cam1_base_frame [default: $(eval arg('ns_prefix_cam1') + '_adtf31xx')]