Package Summary

| Tags | No category tags. |

| Version | 0.2.5 |

| License | Apache 2 |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/lovepark/o3d3xx-ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2020-05-27 |

| Dev Status | UNMAINTAINED |

| CI status | No Continuous Integration |

| Released | UNRELEASED |

| Tags | No category tags. |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Package Description

Additional Links

Maintainers

- Tom Panzarella

Authors

- Tom Panzarella

:warning: DEPRECATION NOTICE: The libo3d3xx libary has been superceded by ifm3d. Similarly, this package has been deprecated in favor of ifm3d-ros which is also available in the ROS distribution (starting with ROS Kinetic)

o3d3xx-ros

o3d3xx-ros is a wrapper around libo3d3xx enabling the usage of IFM Efector O3D3xx ToF cameras from within ROS software systems.

Software Compatibility Matrix

| o3d3xx-ros version | libo3d3xx version | ROS distribution(s) |

|---|---|---|

| 0.1.3 | 0.1.9 | Indigo |

| 0.1.4 | 0.1.9 | Indigo |

| 0.1.5 | 0.1.11 | Indigo |

| 0.1.6 | 0.1.11 | Indigo |

| 0.1.7 | 0.2.0 | Indigo |

| 0.1.8 | 0.2.0 | Indigo |

| 0.2.0 | 0.3.0 | Indigo |

| 0.2.1 | 0.4.0 | Indigo |

| 0.2.2 | 0.4.0, 0.4.1, 0.4.2 | Indigo |

| 0.2.3 | 0.4.3 | Indigo, Kinetic |

| 0.2.4 | 0.4.4 | Indigo, Kinetic |

| 0.2.5 | 0.4.5, 0.4.6, 0.4.8, 0.4.9, 0.5.0, 0.6.0, 0.7.0 | Indigo, Kinetic |

Prerequisites

Additionally, your compiler must support C++11. This package has been validated with:

- g++ 4.8.2 on Ubuntu 14.04 LTS, ROS Indigo

- g++ 5.3.x on Ubuntu 16.04 LTS, ROS Kinetic

Building and Installing the Software

NOTE: Since we are talking about ROS here, we assume you are on Ubuntu Linux.

You should first ensure that you have installed ROS by following

these instructions. The

desktop-full installation is highly recommended.

Next, you should be sure to install

libo3d3xx. This ROS package assumes

you have installed libo3d3xx via the supported debian installer. Step-by-step

instructions for that process are located

here. If you already

have libo3d3xx installed you can skip this step. However, it is important to

point out that the table above denotes a software compatibility matrix and you

should be sure to link o3d3xx-ros against a compatible version of

libo3d3xx.

We now move on to building o3d3xx-ros.

Building and installing o3d3xx-ros is accomplished by utilizing the ROS catkin tool. There are many tutorials and other pieces of advice available on-line advising how to most effectively utilize catkin. However, the basic idea is to provide a clean separation between your source code repository and your build and runtime environments. The instructions that now follow represent how we choose to use catkin to build and permanently install a ROS package from source.

First, we need to decide where we want our software to ultimately be

installed. For purposes of this document, we will assume that we will install

our ROS packages at ~/ros. For convenience, we add the following to our

~/.bash_profile:

if [ -f /opt/ros/indigo/setup.bash ]; then

source /opt/ros/indigo/setup.bash

fi

cd ${HOME}

export LPR_ROS=${HOME}/ros

if [ -d ${LPR_ROS} ]; then

for i in $(ls ${LPR_ROS}); do

if [ -d ${LPR_ROS}/${i} ]; then

if [ -f ${LPR_ROS}/${i}/setup.bash ]; then

source ${LPR_ROS}/${i}/setup.bash --extend

fi

fi

done

fi

Next, we need to get the code from github. We assume we keep all of our git

repositories in ~/dev.

$ cd ~/dev

$ git clone https://github.com/lovepark/o3d3xx-ros.git

We now have the code in ~/dev/o3d3xx-ros. Next, we want to create a catkin

workspace that we can use to build and install that code from. It is the

catkin philosophy that we do not do this directly in the source directory.

$ cd ~/dev

$ mkdir o3d3xx-catkin

$ cd o3d3xx-catkin

$ mkdir src

$ cd src

$ catkin_init_workspace

$ ln -s ~/dev/o3d3xx-ros o3d3xx

So, you should have a catkin workspace set up to build the o3d3xx-ros code that looks basically like:

[ ~/dev/o3d3xx-catkin/src ]

tpanzarella@jelly: $ pwd

/home/tpanzarella/dev/o3d3xx-catkin/src

[ ~/dev/o3d3xx-catkin/src ]

tpanzarella@jelly: $ ls -l

total 0

lrwxrwxrwx 1 tpanzarella tpanzarella 49 Dec 2 15:26 CMakeLists.txt -> /opt/ros/indigo/share/catkin/cmake/toplevel.cmake

lrwxrwxrwx 1 tpanzarella tpanzarella 32 Dec 2 15:24 o3d3xx -> /home/tpanzarella/dev/o3d3xx-ros

Now we are ready to build the code.

$ cd ~/dev/o3d3xx-catkin

$ catkin_make -DCATKIN_ENABLE_TESTING=ON

$ catkin_make run_tests

$ catkin_make -DCMAKE_INSTALL_PREFIX=${LPR_ROS}/o3d3xx install

The ROS package should now be installed in ~/ros/o3d3xx. To test everything

out you should open a fresh bash shell, and start up a ROS core:

$ roscore

Open another shell and start the primary camera node:

$ roslaunch o3d3xx camera.launch ip:=192.168.10.69

NOTE: The IP address of your camera may differ. If you are using the

factory default (192.168.0.69), you do not need to specify it on the above

roslaunch line.

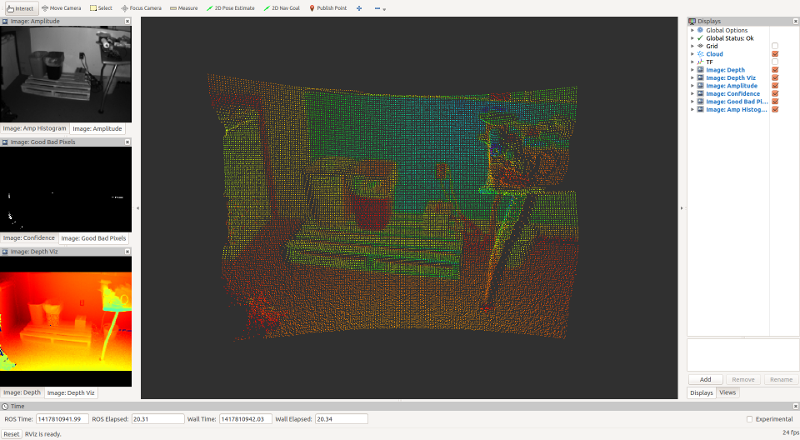

Open another shell and start the rviz node to visualize the data coming from the camera:

$ roslaunch o3d3xx rviz.launch

At this point, you should see an rviz window that looks something like:

Congratulations! You can now utilize o3d3xx-ros.

Nodes

/o3d3xx/camera

This node provides a real-time feed to the camera data. This node is started

from the primary camera.launch file:

$ roslaunch o3d3xx camera.launch

The naming of the camera can be customized via the ns (namespace) and nn

(node name) command line arguments passed to the camera.launch file. For

example, if you specify your roslaunch command as:

$ roslaunch o3d3xx camera.launch ns:=robot nn:=front_camera

The node will have the name /robot/front_camera in the ROS computation

graph.

Published Topics

| Topic | Message | Description |

|---|---|---|

| /o3d3xx/camera/amplitude | sensor_msgs/Image | 16-bit gray scale encoding of the sensor Amplitude image normalized wrt exposure time. |

| /o3d3xx/camera/raw_amplitude | sensor_msgs/Image | 16-bit gray scale encoding of the sensor Amplitude image non-normalized. |

| /o3d3xx/camera/cloud | sensor_msgs/PointCloud2 | A 3D PCL point cloud of point type `XYZI`. In this encoding the intensity channel is represented by the corresponding pixel's amplitude data. The units of this point cloud are in meters. |

| /o3d3xx/camera/confidence | sensor_msgs/Image | An 8-bit mono image encoding of the confidence image. The meaning of each bit of each pixel value is discussed in the official IFM documentation for the camera. |

| /o3d3xx/camera/depth | sensor_msgs/Image | A 16-bit mono image encoding of the radial depth map from the camera. The depth units are in millimeters. |

| /o3d3xx/camera/depth_viz | sensor_msgs/Image | A rendering of the depth image utilizing a colormap more human-friendly for visualization purposes. For performance reasons, messages are only published to this topic when the `publish_viz_images` parameter is set to true at launch time. |

| /o3d3xx/camera/good_bad_pixels | sensor_msgs/Image | A binary image showing good vs. bad pixels on the pixel array. Bad pixels can be caused by numerous reasons (e.g., motion blur over an integration/exposure timestep). Visualizing this data is useful for when you are tuning your imager parameters. For performance reasons, messages are only published to this topic when the `publish_viz_images` parameter is set to true at launch time. |

| /o3d3xx/camera/xyz_image | sensor_msgs/Image | An OpenCV image encoding (CV_16SC3) of the same point cloud that is published to `/o3d3xx/camera/cloud` where the three image planes are 0 = x, 1 = y, 2 = z. Units are in millimeters yet the coord frame is consistent with the point cloud. |

| /o3d3xx/camera/unit_vectors | sensor_msgs/Image | An OpenCV image encoding (CV_32FC3) of the rotated unit vectors that can be used together with the translation vector from the camera extrinsics and the radial distance image to compute the cartesian coordinates for each pixel in the imager array off-board the camera. This topic is latched. |

| /o3d3xx/camera/extrinsics | Extrinsics.msg | Extrinsics as reported by the camera. The translation vector here is used together with the unit vectors and the radial distance image to compute the Cartesian coordinates for each pixel in the imager array. It should be noted that for this message, the translational units are in mm and the rotational units are in degrees. This is to be consistent with the camera eventhough it is not necessarily consistent with ROS conventions. Usage of this message is really for very specialized use-cases. |

Advertised Services

| Service Name | Service Definition | Description |

|---|---|---|

| /o3d3xx/camera/Config | Config.srv | Mutates camera settings based upon an input JSON file. NOTE: Due to what appears to be limitations in the YAML parsing of the stock ROS `rosservice` command line tool (i.e., it does not handle JSON as string payload well) you will have to use the /o3d3xx/camera/config_node to configure the camera. This is explained in further detail below. |

| /o3d3xx/camera/Dump | Dump.srv | Dumps the current configuration of the camera to a JSON string. The output of this dump is suitable for editing and passing to the `Config` service for configuring the camera. |

| /o3d3xx/camera/GetVersion | GetVersion.srv | Returns the current version of the underlying libo3d3xx library that this ROS node is linked to. |

| /o3d3xx/camera/Rm | Rm.srv | Removes an application from the camera. This service will restrict removing the current active application. |

| /o3d3xx/camera/Trigger | Trigger.srv | Sends a software trigger signal to the camera. |

Parameters

| Name | Data Type | Description |

|---|---|---|

| ip | string | IP address of the camera |

| xmlrpc_port | int | TCP port the camera's XMLRPC server is listening on |

| password | string | Password to use to connect to the camera |

| schema_mask | uint16_t | Mask controlling which image types to stream back from the camera. This is useful for numerous reasons. It allows for conserving bandwidth between the host computer and the camera or lessens the CPU cycles required by the camera to compute image data which may result in an increased frame rate. See the o3d3xx-schema command line tool for generating custom masks. |

| timeout_millis | int | Time, in milliseconds, to block when waiting for a frame from the camera before timing out. |

| timeout_tolerance_secs | double | Time, in seconds, to wait before trying to restart the underlying framegrabber if it is currently experiencing timeouts while trying to capture image data. |

| publish_viz_images | bool | In general, for a runtime system, the core data a system will want from this camera include the `cloud`, `depth`, `amplitude`, and `confidence` images. This node will always publish those data. However, if you set this parameter to `true` a few additional images are published. These are `depth_viz` and `good_bad_pixels` (they are described above in the `Topics` section). These viz images are intended for human analysis and visualization in `rviz`. |

| assume_sw_trigger | bool | If your application intends to use software triggering (as opposed to a free-running framegrabber), this parameter does four things. First, it will not print out framegrabber timeout messages to the rosconsole. Second, it will set, a-priori, the `timeout_millis` parameter to 500 milliseconds (this is so that the node will release the framegrabber mutex in the main publishing loop). Third, this will set the `timeout_tolerance_secs` to 600 seconds so that the framegrabber is not constantly getting recycled unnecessarily. Fourth, it sets the `GLOG_minloglevel` environment variable to 3 (ERROR). This is so the underlying `libo3d3xx` library does not fill your log files with timeout messages which it emits at the WARNING level. |

| frame_id_base | string | tf frame prefix |

/o3d3xx/camera_tf

This node is of type tf/static_transform_publisher. It establishes a frame_id

for the camera in the global tf tree. This node is launched from the primary

camera.launch file:

$ roslaunch o3d3xx camera.launch

When run as above, the tf publishing node would be named /o3d3xx/camera_tf

and the camera coordinate frame would be o3d3xx/camera_link in the tf tree.

You can customize this naming via the frame_id_base arg or implicitly through

the ns (namespace) and nn (node name) command line arguments passed to the

camera.launch file. For example, if you specify your roslaunch command as:

$ roslaunch o3d3xx camera.launch ns:=robot nn:=front_camera

The node name will be /robot/front_camera_tf and the camera frame will

be robot/front_camera_link in the tf tree.

/o3d3xx/camera/config_node

This node is used as a proxy to simplify calling the /o3d3xx/camera/Config

service offered by the /o3d3xx/camera node. It was noted above that there

appears to be a limitation in the YAML parser of the ROS rosservice command

line tool. Specifically, it seems that it is not capable of assigning a JSON

string to a variable. This is the reason for this node. This is not a

long-running node but rather works like a typical command-line tool would: you

invoke it, it runs, and exits. The following command line will launch this

node:

$ roslaunch o3d3xx config.launch

Parameters

| Name | Data Type | Description |

|---|---|---|

| infile | string | By default, this node will read `stdin` for a JSON string to use to pass to the `/o3d3xx/camera/Config` service. However, if this parameter is specified it will read the JSON from this file. |

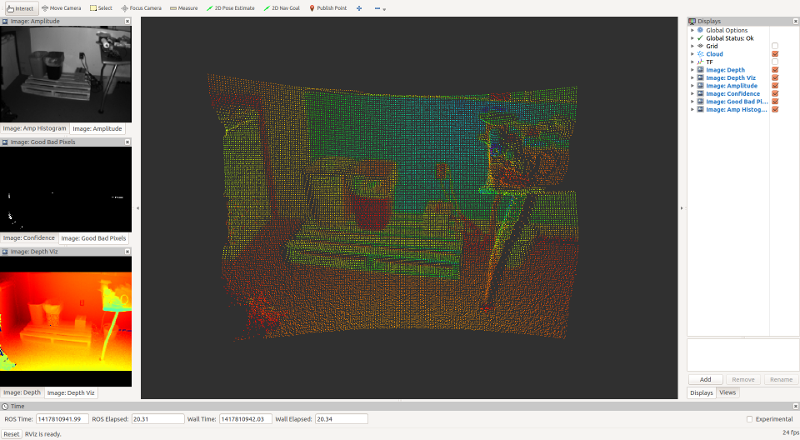

/rviz

This package offers a launch script that wraps the execution of rviz so that

the display will be conveniently configured for visualizing the

/o3d3xx/camera data. To launch this node:

$ roslaunch o3d3xx rviz.launch

Running the command as above will color the point cloud with the data from the normalized amplitude image (i.e., the intensity).

The rviz window should look something like (assuming you are coloring the point cloud with the intensity data):

/o3d3xx/camera/XXX_throttler

This package offers a launch script that wraps the

topic_tools/throttler node

so that it can throttle the core topics from the camera. Specifically, it will

throttle /o3d3xx/camera/cloud to /o3d3xx/camera/cloud_throttle,

/o3d3xx/camera/amplitude to /o3d3xx/camera/amplitude_throttle,

/o3d3xx/camera/raw_amplitude_throttle,

/o3d3xx/camera/depth to /o3d3xx/camera/depth_throttle,

/o3d3xx/camera/confidence to /o3d3xx/camera/confidence_throttle. To launch

this node:

$ roslaunch o3d3xx throttled.launch

By default, it will throttle the above named topics to 1 Hz. You can change the

frequency with the hz command line argument. For example, to send data at 2

Hz:

$ roslaunch o3d3xx throttled.launch hz:=2.0

Using this launch file to launch this set of nodes is strictly optional. We

have found use for it in two ways. First, to slow down the publishing frequency

of the topics when used in conjunction with the /o3d3xx/camera/file_writer

node for collecting data (i.e., in those instances when we really do not need

all the data but rather some subsampling of it). Second, if we are running the

camera on a drone (for example) that has a slower radio link down to a ground

control station running rviz where we want to see what the camera sees while

the drone is in flight. Clearly there are other uses for this, YMMV.

Configuring Camera Settings

Configuring the camera is accomplished by passing a JSON string to the

/o3d3xx/camera/config_node which will call the /o3d3xx/camera/Config

service to mutate the camera settings. Using a JSON string to configure the

camera has the following primary benefits:

- Configuration is declarative. The camera configuration will reflect that which is described by the JSON file.

- The JSON data is human-consumable and easily edited in a text editor. This makes it very convenient for headless embedded systems.

- The JSON data is machine parsable, so configuring the camera on the fly via programmable logic is also possible.

There are also a few downfalls to using JSON. Most notably the lack of comments

and an enforceable schema. One could argue that the latter keeps things

flexible. None-the-less, JSON is the format used by libo3d3xx and, by

extension, this ROS package.

An exemplary JSON file is shown here (this is the result

of calling the /o3d3xx/camera/Dump service on a development system). When

passing a JSON string (like the previously linked to file) to the

/o3d3xx/camera/Config service (or to the /o3d3xx/camera/config_node) the

following rules are used to configure the camera:

- The

Devicesection is processed and saved on the camera. - The

Appssection is processed. For each app:- If the

Indexkey is present, a current app at thatIndexis looked up. If present, it is edited to reflect the data in the JSON file. If an app at thatIndexis not present, a new app is created with the parameters from the JSON file. It is not guaranteed that the new app will have the specifiedIndex. - If the

Indexkey is not present, a new app is created with the parameters as specified in the JSON file.

- If the

- The active application is set by consulting the desired index of the

ActiveApplicationfrom theDevicesection of the JSON. If the specifiedIndexdoes not exist, the active application is not set. - The

Netsection is processed. A reboot of the camera may be necessary after changing the camera's network parameters. Additionally, you will likely need to restart the/o3d3xx/cameranode pointing it to the new IP address (if that is what you changed).

It should also be noted that any portion of the JSON tree can be specfied to configure only that part of the camera. The only rule to follow is that all keys should be fully qualified. For example, to simply set the active application, you can use a JSON snippet like this:

{

"o3d3xx":

{

"Device":

{

"ActiveApplication": "2"

}

}

}

The above snippet is provided as an example here. To apply this to your camera, you can:

$ roslaunch o3d3xx config.launch infile:=/path/to/ex_set_active.json

It was also noted above that the /o3d3xx/camera/config_node will read stdin

by default, so you could also:

$ echo '{"o3d3xx":{"Device":{"ActiveApplication":"2"}}}' | roslaunch o3d3xx config.launch

Here is another example JSON file. This one will add a

new application to the camera, using the default values for the high-dynamic

range imager. We note that this application is added to the camera because no

Index is specified for the application. If an Index were specfied, the

application at the specified Index, if present, would be edited to reflect

this configuration.

In general, a simple way to configure camera settings without having to memorize the JSON syntax would be to simply dump the current camera settings to a file:

$ rosservice call /o3d3xx/camera/Dump > /tmp/camera.json

Then, open /tmp/camera.json with a text editor to create a declarative JSON

configuration for your camera. You should be sure to delete the variable names

from the rosservice output if you are following this example

word-for-word. Additionally, you can delete any unnecessary keys if you would

like, however it is not strictly necessary as the /o3d3xx/camera/Config

service will leave unedited values unchanged on the camera. Once you have a

configuration that you like, you can:

$ roslaunch o3d3xx config.launch infile:=/tmp/camera.json

You can check that your configuration is active by calling the

/o3d3xx/camera/Dump service again.

TODO

Please see the Github Issues.

LICENSE

Please see the file called LICENSE.

AUTHORS

Tom Panzarella tom@loveparkrobotics.com

Changes between o3d3xx-ros 0.2.4 and 0.2.5

- Addressed bug in unit tests (services test)

Changes between o3d3xx-ros 0.2.3 and 0.2.4

- Implemented a new

/Triggerservice for cameras configured in software-triggered mode. - Added a

assume_sw_triggerparameter to the camera launch file to optimize some settings when running in software triggered mode.

Changes between o3d3xx-ros 0.2.2 and 0.2.3

- Tweaks to support Kinetic Kame on Ubuntu 16.04 (Xenial)

Changes between o3d3xx-ros 0.2.1 and 0.2.2

- Loosened up the libo3d3xx version checking in CMakeLists.txt

Changes between o3d3xx-ros 0.2.0 and 0.2.1

Frame id is configurable and topic names are relative

- See PR17

libo3d3xx 0.4.0

- The new modularized

libo3d3xxis now used as the underlying driver.

test/test_services

- New test to ensure calling the

/Dumpand/Configservices work.

cmake/FindXXX

- Copied the following FindXXX cmake scripts from

libo3d3xx:- Findo3d3xx_camera

- Findo3d3xx_framegrabber

- Findo3d3xx_image

Deprecations and Eliminations

- FileWriteNode has been removed from project. Use

rosbag.

Changes between o3d3xx-ros 0.1.8 and 0.2.0

o3d3xx_node

- Aware of pluggable pcic schema masks

- Publishes unit vectors (on a latched topic)

- Publishes camera extrinsics

- Now that we are publishing a proper transform between the camera frame and the optical frame (see below) we are tagging our published topics with the appropriate frame_id. Specfically, the cartesian data are marked to be in the camera frame (using ROS conventions) and the other data are marked as in the optical frame (O3D conventions).

launch/camera.launch

You can now pass a schema mask parameter to the camera to selectively choose which images are streamed back from the camera to the host.

Now that we are providing the ability to compute the Cartesian data off-board the camera, the tf2 static_transform_publisher is now publishing out a proper transform between the camera frame and the optical frame. If you compute the Cartesian data off-board the camera by utilizing the extrinsics, unit vectors, and radial distance image, those computed Cartesian values will be expressed in the camera optical frame. To get them into a standard ROS coord frame, the data will need to be transformed. By publishing this transform via tf2, users can use the tf2 API to compute the transformation.

launch/o3d3xx.rviz

- Added an Axes marker to the display (not selected by default). This is necessary if you want to inspect the difference between the camera frame and its optical frame. Since there is no translation, only a rotation, flipping the coord on an axes marker seems easier to grok than how the tf display is rendering.

cmake/Findlibo3d3xx.cmake

- Aware of new

libo3d3xxdefault install location into/usr

test/test_camera.py

- Added new unit test that tests:

- Getting data from the camera

- Computing the Cartesian data and comparing it to ground truth

- To do the comparison to ground truther, the computed cartesian data must also be transformed, to do that we use the tf2 API and hence The transform from the optical frame to the camera frame that we are publishing is also tested.

NOTE: The unit test(s) currently require the hardware to be present as it works with live data.

Deprecations and Eliminations

- The amplitude histogram is no longer being published.

- The

rvizconfig that colors the point cloud pixels with the x-depth has been eliminated. Pixels will be colored with the normalized amplitude. - FileWriteNode has been deprecated. It is slated to disappear in the next

minor release and definitely will be vaporized by 1.0.0. Data collection

pipelines should be updated to use

rosbagor some other tool.

Changes between o3d3xx-ros 0.1.7 and 0.1.8

o3d3xx_node

- A new parameter,

timeout_tolerance_secshas been added. This is a timeout period to wait before attempting to restart the underlying frame grabber if it is currently timing out while asking for image data. This has been added to add a level of robustness to things like network cables getting yanked out or allowing the camera to undergo a power cycle without having to restart the ROS processes. Bascially, a way of autonomously recovering from anything that would break the TCP connection between the underlying ASIO event loop inlibo3d3xxand the O3D camera's PCIC interface. Good for robots.

Changes between o3d3xx-ros 0.1.6 and 0.1.7

o3d3xx_node

- Publishes the raw amplitude image

rviz

- The rviz configuration files now stack in the raw amplitude as a new pane along with the normalized amplitude and the amplitude histogram.

launch/throttled.launch

- throttles the

raw_amplitudetopic

file_writer_node

- The

raw_amplitudeimage is now serialized to the file system as a (losslessly compressed) PNG.

Changes between o3d3xx-ros 0.1.5 and 0.1.6

launch/throttled.launch

- throttles the

xyz_imagetopic

launch/camera.launch

- Moved to tf2 for the static_transform_publisher. The transform from /o3d3xx/camera_link to /o3d3xx/camera_optical_link is now published on /tf_static (a latched topic) for efficiency.

file_writer_node

- The

xyz_imageis now serialized to the file system in OpenCV FileStorage format (YAML).

Changes between o3d3xx-ros 0.1.4 and 0.1.5

o3d3xx_node

- The

xyzi_imagehas been replaced by anxyz_image. Thexyz_imageis a representation of the point cloud encoded as an opencv image (CV_16SC3) where the 3 image planes are: 0 = x, 1 = y, 2 = z. The data in this image are signed 16-bit integers and the units are in mm. This is a change from the original implementation of thexyzi_imageintroduced in version 0.1.4. The intensity data can still be referenced via theamplitudeimage. Keeping the units in mm allows for using the more efficient 16-bit signed int type as opposed to 32-bit floats. Also note that since the image is being constructed by the underlyinglibo3d3xxdriver, there is no longer a flag in the launch file to turn on/off the publishing of this data.

launch/camera.launch

- Fixed the parent-child relationship between the tf frames

Changes between o3d3xx-ros 0.1.3 and 0.1.4

o3d3xx_node

- All published topics now have timestamps in the message header

- The main camera node can now publish an

xyzi_image. This is a representation of the point cloud (same exact data) encoded as an opencv image (CV_32FC4) where the 4 image planes are: 0 = x, 1 = y, 2 = z, 3 = intensity. This has been introduced to enable easier interop between PCL and numpy for doing point cloud analysis. While you can iterate over point cloud messages in python with existing ROS-provided tools. It is dreadfully slow. By providing an OpenCV image encoding of the point cloud, a numpy array can be created at C++ speed. Publishing this image can be turned on/off via thecamera.launchfile so that no additional overhead will be realized by users who do not wish to exploit this feature.

file_writer_node

- A dump of the camera configuration (JSON) is now written to the output directory upon launching the node, prior to data capture.

The initial release of o3d3xx-ros was 0.1.3

Wiki Tutorials

Source Tutorials

Launch files

- launch/throttled.launch

-

- ns [default: o3d3xx]

- nn [default: camera]

- hz [default: 1.0]

- launch/camera.launch

-

- ns [default: o3d3xx]

- nn [default: camera]

- ip [default: 192.168.0.69]

- xmlrpc_port [default: 80]

- password [default: ]

- schema_mask [default: 15]

- timeout_millis [default: 500]

- timeout_tolerance_secs [default: 5.0]

- publish_viz_images [default: true]

- frame_id_base [default: $(arg ns)/$(arg nn)]

- respawn [default: false]

- assume_sw_triggered [default: false]

- launch/config.launch

-

- ns [default: o3d3xx]

- nn [default: camera]

- infile [default: -]

- launch/rviz.launch