Repository Summary

| Checkout URI | https://github.com/visiotec/vtec_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-03-16 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Tags | No category tags. |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| vtec_msgs | 0.1.0 |

| vtec_ros | 0.1.0 |

| vtec_tracker | 0.1.0 |

README

VisioTec ROS Packages

[ROS] (Kinetic and Melodic) packages developed at the VisioTec research group, CTI Renato Archer, Brazil. Further information about this group can be found here.

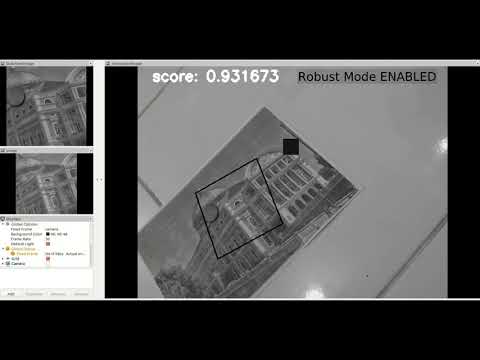

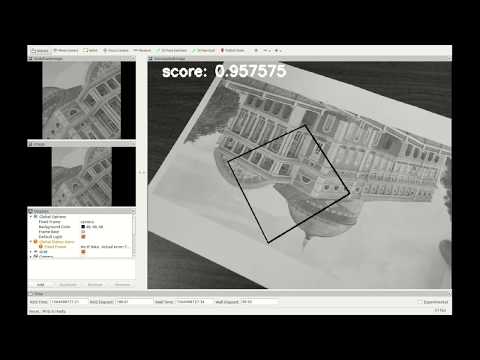

Video Examples

Click on the thumbnails to watch the videos on YouTube.

- Intensity-based visual tracking with full 8-DoF homography

- Robust intensity-based visual tracking with full 8-DoF homography and occlusion handling

- Unified intensity- and feature-based visual tracking with full 8-DoF homography

Documentation and Citing

If you use this work, please cite this paper:

@inproceedings{Nogueira2020TowardsAU,

title={Towards a Unified Approach to Homography Estimation Using Image Features and Pixel Intensities},

author={Lucas Nogueira and Ely Carneiro de Paiva and Geraldo F. Silveira},

booktitle={International Conference on Autonomic and Autonomous Systems},

pages={110-115},

year={2020}

}

There is a technical report available here describes the underlying algorithm and its working principles.

@TechReport{nogueira2019,

author = {Lucas Nogueira and Ely de Paiva and Geraldo Silveira},

title = {Visio{T}ec robust intensity-based homography optimization software},

number = {CTI-VTEC-TR-01-19},

institution = {CTI},

year = {2019},

address = {Brazil}

}

Installation

These packages were tested on:

- version (tag) 2.1 - ROS Neotic with Ubuntu 20.04 and OpenCV 4.2

- version (tag) 2.0 - ROS Melodic with Ubuntu 18.04.

- version (tag) 1 - ROS Kinetic with Ubuntu 16.04

Dependencies

OpenCV

This package depends on the OpenCV module xfeatures2d. It is necessary to install OpenCV from source, and configure its compilation to include it, because this module is not compiled by default or included in the opencv packages from the Ubuntu distribution.

(Exception: in ROS Kinetic/Ubuntu 16.04, this module is installed by default with the ROS packages, and therefore no further steps are needed.)

Here there are instructions about OpenCV instalation, for ROS Melodic:

- https://answers.ros.org/question/312669/ros-melodic-opencv-xfeatures2d/

- https://linuxize.com/post/how-to-install-opencv-on-ubuntu-18-04/

To simplify, here are direct URLs for the 4.2 versions of opencv and opencv_contrib repos: https://github.com/opencv/opencv/archive/refs/tags/4.2.0.tar.gz https://github.com/opencv/opencv_contrib/archive/refs/tags/4.2.0.tar.gz

and this is the cmake command used to compile opencv (the ENABLE_NONFREE option enables compiling the feature detectors; and the path on the EXTRA_MODULES_PATH option must point to your opencv_contrib directory.

cmake -D CMAKE_BUILD_TYPE=RELEASE

-D CMAKE_INSTALL_PREFIX=/usr/local

-D INSTALL_C_EXAMPLES=ON

-D INSTALL_PYTHON_EXAMPLES=ON

-D OPENCV_GENERATE_PKGCONFIG=ON

-D OPENCV_EXTRA_MODULES_PATH=~/opencv_build/opencv_contrib-4.2.0/modules

-D OPENCV_ENABLE_NONFREE=ON ..

-D BUILD_EXAMPLES=ON ..

The dependency on xfeatures2d is necessary because of the Feature detection algorithm. If you just want to use the intensity-based algorithms, you may use the v1 version of this repo, although it is dated.

usb_cam

Install the usb_cam driver from ROS repositories.

sudo apt-get install ros-[kinetic|melodic]-usb-cam

Build

Setup a ROS workspace.

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/visiotec/vtec_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-03-16 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Tags | No category tags. |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| vtec_msgs | 0.1.0 |

| vtec_ros | 0.1.0 |

| vtec_tracker | 0.1.0 |

README

VisioTec ROS Packages

[ROS] (Kinetic and Melodic) packages developed at the VisioTec research group, CTI Renato Archer, Brazil. Further information about this group can be found here.

Video Examples

Click on the thumbnails to watch the videos on YouTube.

- Intensity-based visual tracking with full 8-DoF homography

- Robust intensity-based visual tracking with full 8-DoF homography and occlusion handling

- Unified intensity- and feature-based visual tracking with full 8-DoF homography

Documentation and Citing

If you use this work, please cite this paper:

@inproceedings{Nogueira2020TowardsAU,

title={Towards a Unified Approach to Homography Estimation Using Image Features and Pixel Intensities},

author={Lucas Nogueira and Ely Carneiro de Paiva and Geraldo F. Silveira},

booktitle={International Conference on Autonomic and Autonomous Systems},

pages={110-115},

year={2020}

}

There is a technical report available here describes the underlying algorithm and its working principles.

@TechReport{nogueira2019,

author = {Lucas Nogueira and Ely de Paiva and Geraldo Silveira},

title = {Visio{T}ec robust intensity-based homography optimization software},

number = {CTI-VTEC-TR-01-19},

institution = {CTI},

year = {2019},

address = {Brazil}

}

Installation

These packages were tested on:

- version (tag) 2.1 - ROS Neotic with Ubuntu 20.04 and OpenCV 4.2

- version (tag) 2.0 - ROS Melodic with Ubuntu 18.04.

- version (tag) 1 - ROS Kinetic with Ubuntu 16.04

Dependencies

OpenCV

This package depends on the OpenCV module xfeatures2d. It is necessary to install OpenCV from source, and configure its compilation to include it, because this module is not compiled by default or included in the opencv packages from the Ubuntu distribution.

(Exception: in ROS Kinetic/Ubuntu 16.04, this module is installed by default with the ROS packages, and therefore no further steps are needed.)

Here there are instructions about OpenCV instalation, for ROS Melodic:

- https://answers.ros.org/question/312669/ros-melodic-opencv-xfeatures2d/

- https://linuxize.com/post/how-to-install-opencv-on-ubuntu-18-04/

To simplify, here are direct URLs for the 4.2 versions of opencv and opencv_contrib repos: https://github.com/opencv/opencv/archive/refs/tags/4.2.0.tar.gz https://github.com/opencv/opencv_contrib/archive/refs/tags/4.2.0.tar.gz

and this is the cmake command used to compile opencv (the ENABLE_NONFREE option enables compiling the feature detectors; and the path on the EXTRA_MODULES_PATH option must point to your opencv_contrib directory.

cmake -D CMAKE_BUILD_TYPE=RELEASE

-D CMAKE_INSTALL_PREFIX=/usr/local

-D INSTALL_C_EXAMPLES=ON

-D INSTALL_PYTHON_EXAMPLES=ON

-D OPENCV_GENERATE_PKGCONFIG=ON

-D OPENCV_EXTRA_MODULES_PATH=~/opencv_build/opencv_contrib-4.2.0/modules

-D OPENCV_ENABLE_NONFREE=ON ..

-D BUILD_EXAMPLES=ON ..

The dependency on xfeatures2d is necessary because of the Feature detection algorithm. If you just want to use the intensity-based algorithms, you may use the v1 version of this repo, although it is dated.

usb_cam

Install the usb_cam driver from ROS repositories.

sudo apt-get install ros-[kinetic|melodic]-usb-cam

Build

Setup a ROS workspace.

File truncated at 100 lines see the full file