|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

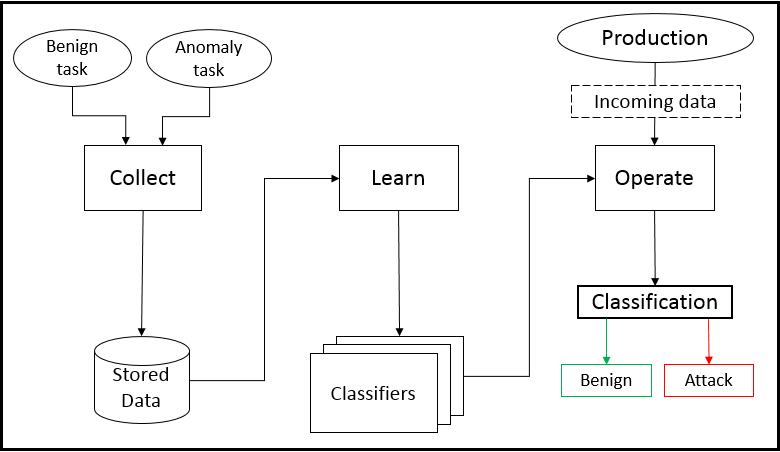

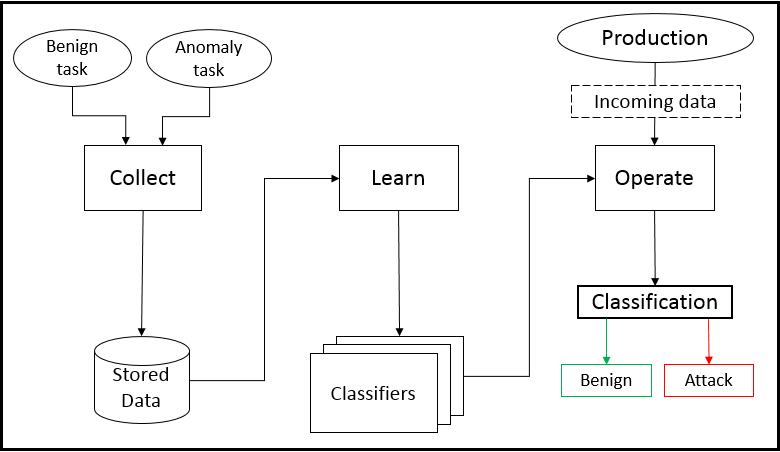

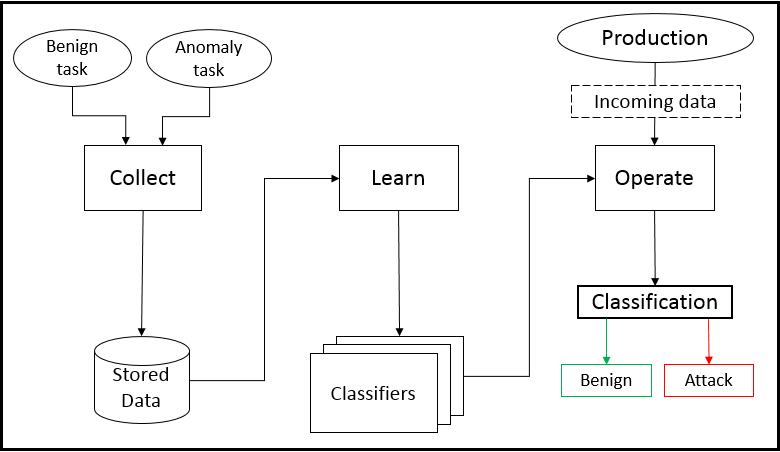

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

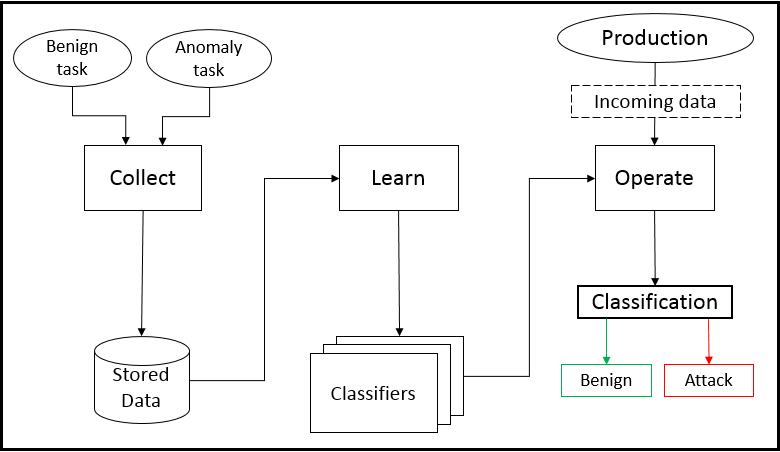

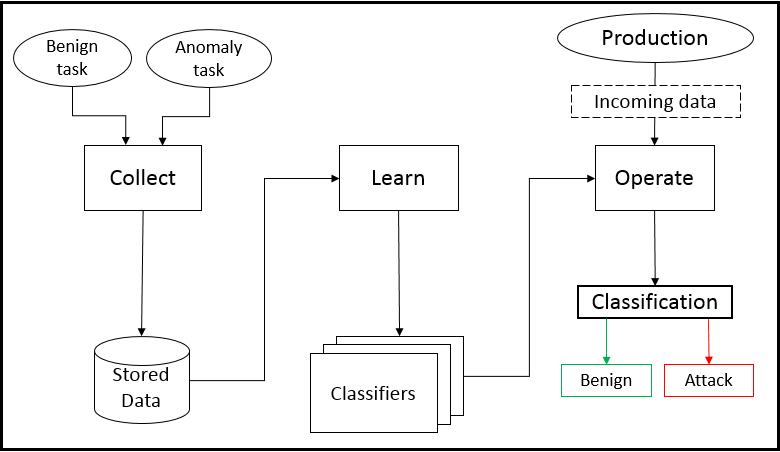

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

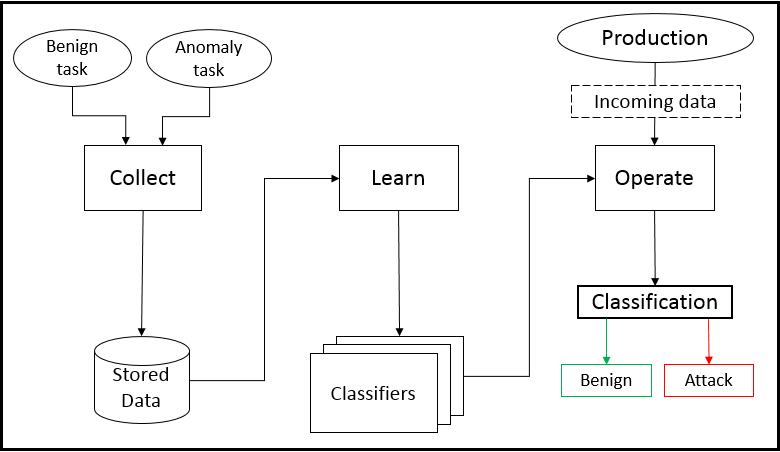

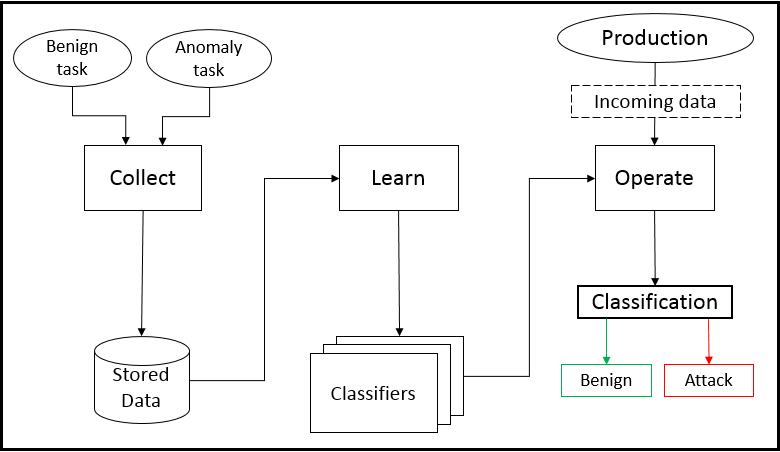

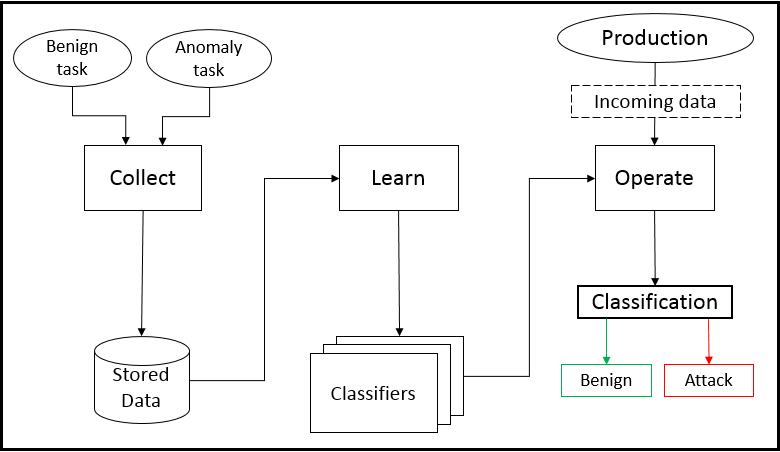

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

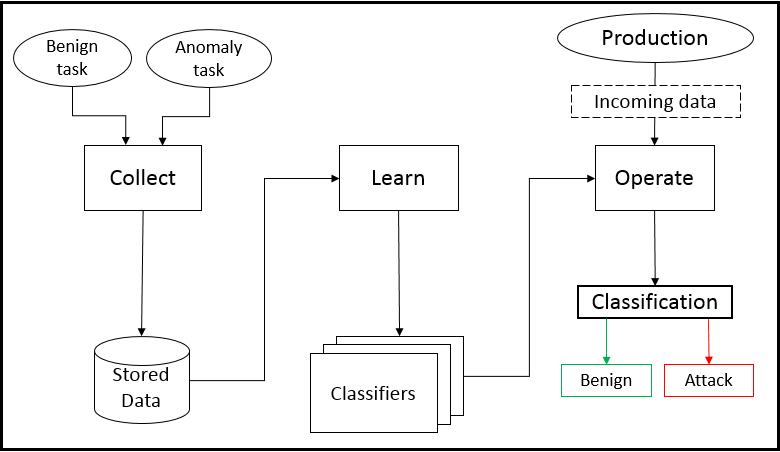

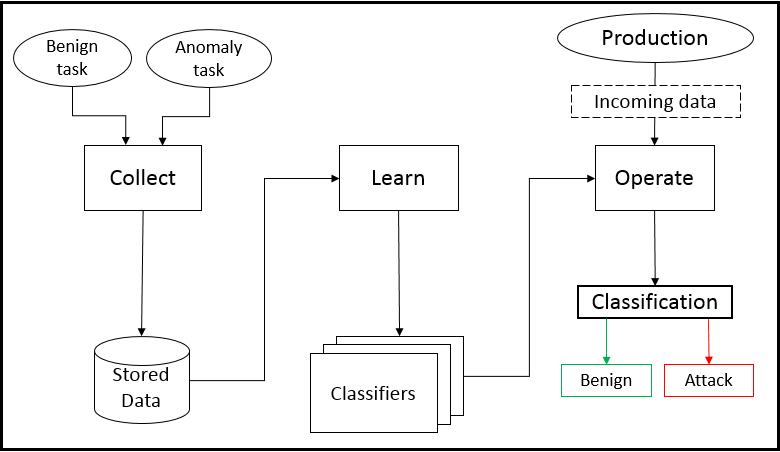

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

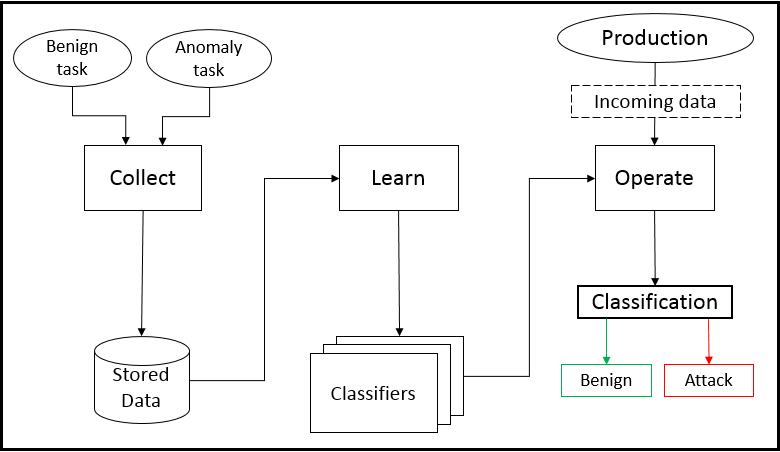

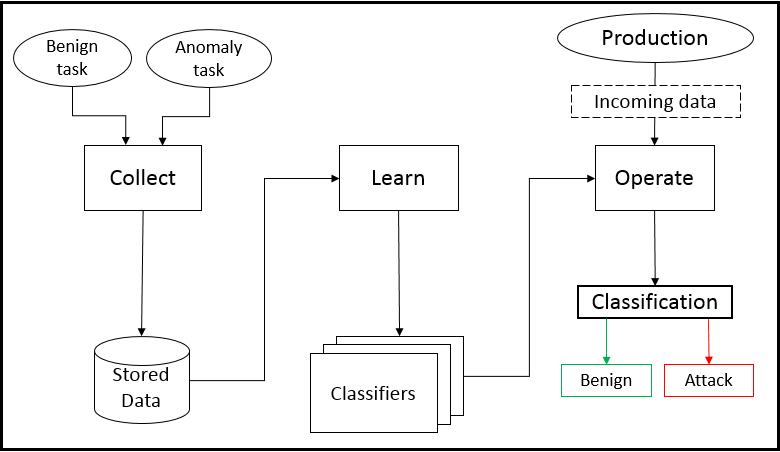

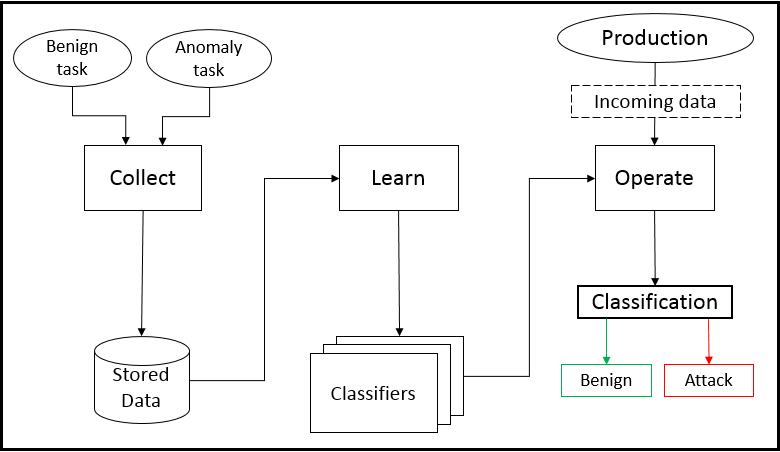

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

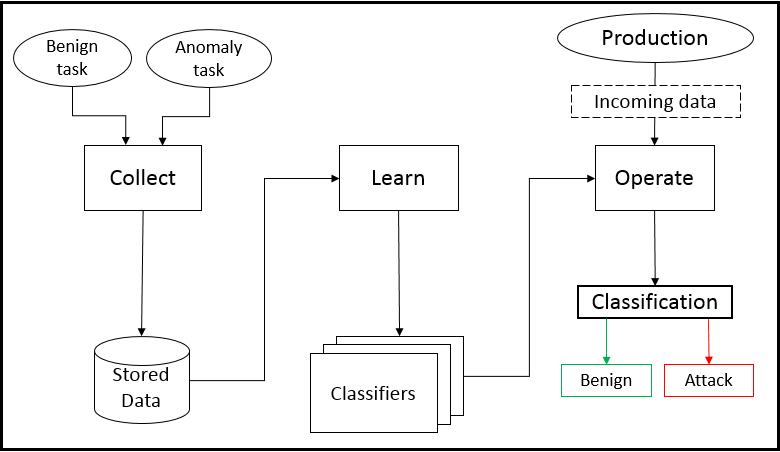

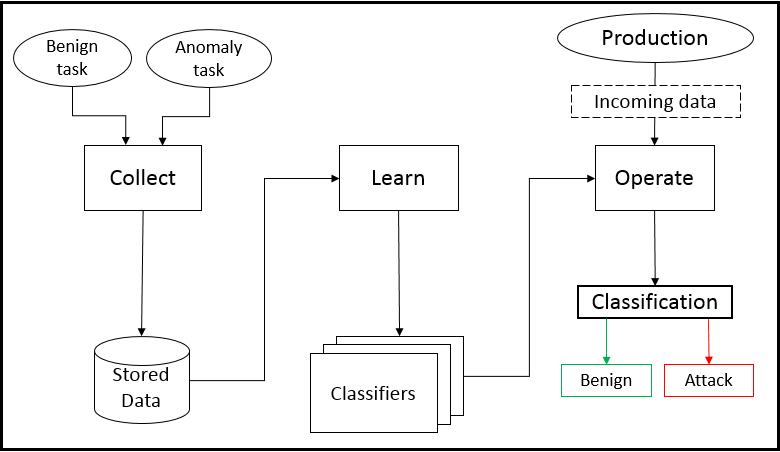

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

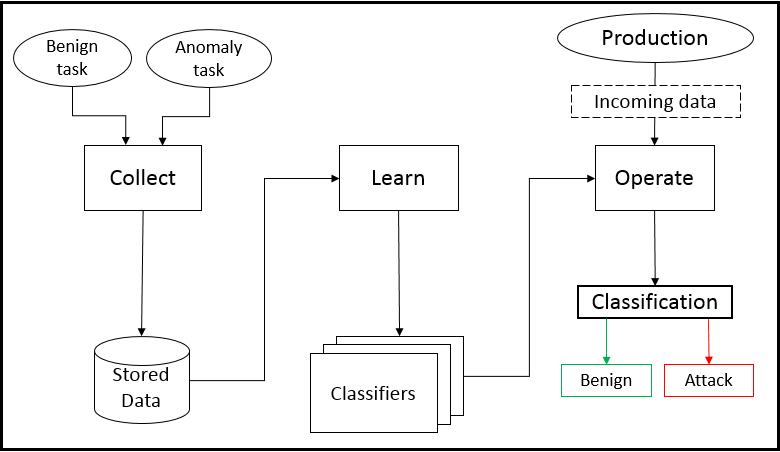

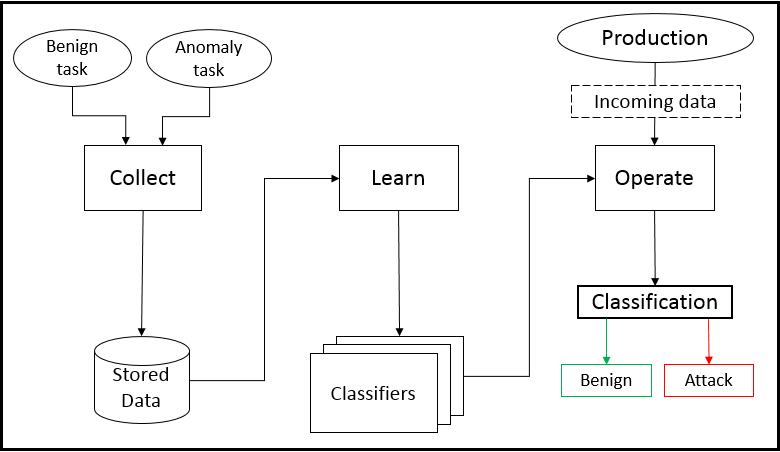

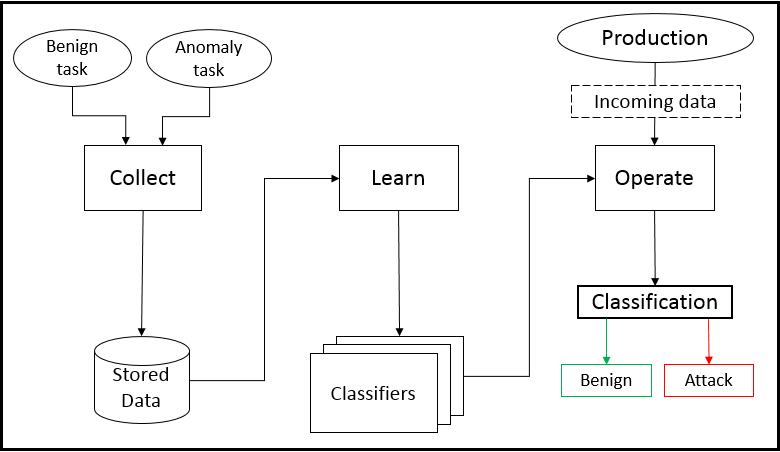

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

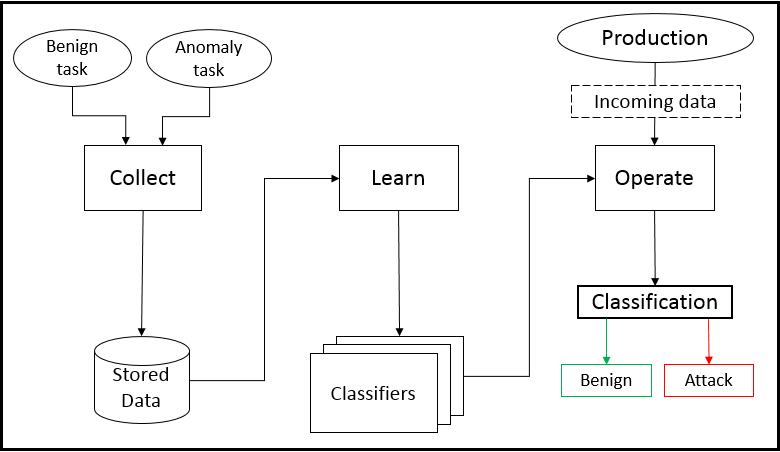

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode

The goal of this mode is submitting the user with trained classifiers. In order to accomplish this the user is depended on to supply not only the datasets for training, but also the classifiers of interest. These are supplied to an internal library via a script. The internal library semi-automates the learning process. Particularly, the user is only required to supply their script to the Interface component. It in turn, pre-processes the data (dimensionality reduction if specified), and the set of classifiers are all trained with their appropriate datasets. After training, the classifiers are saved in storage.

At a high level, the user’s responsibilities includes writing a simple script, and supplying the names of datasets in a structured manner. From a small set of functions calls to the Interface class, the classifiers are quickly trained and are efficiently prepared for deployment. There are four key, programmatic steps to accomplish in order to get training predictions and its associated accuracies. First, the Interface class needs to be instantiated by passing a YAML input file as an argument. YAML is a human-friendly data serialization method, that is interoperable between programming languages and systems. Next, the user creates instances of the classifiers they want trained. The user then passes off the classifiers to the Interface class for training, by calling the

``` function. Lastly, testing predictions can be gathered for all classifiers, by calling

```get_testing_predictions()

```.

The following python script presents an example of a script utilizing the learning library.

```python

if __name__ == "__main__":

unsupervised_models = []

supervised_models = []

# Step 1

input_file = sys.argv[1] # YAML input

interface = Interface(input_file)

# Step 2

ocsvm = svm.OneClassSVM(nu=0.5, kernel="rbf", gamma=1000)

unsupervised_models.append(('ocsvm', clf_ocsvm))

svm1 = svm.SVC(kernel='rbf', gamma=5, C=10)

supervised_models.append(('rbfsvm1', svm1))

svm2 = svm.SVC(kernel='rbf', gamma=50, C=100)

supervised_models.append(('rbfsvm2', svm2))

# Step 3

interface.genmodel_train(unsupervised_models, supervised_models)

# Step 4

unsup, sup = interface.get_testing_predictions()

Learning library

File truncated at 100 lines see the full file

Dependant Packages

Launch files

- launch/module.launch

-

- mode_arg

- other_args

- file_name

- pkg_name

- launch/run.launch

- THIS IS AN EXAMPLE FILE

-

Messages

Services

Plugins

Recent questions tagged mh5_anomaly_detector at Robotics Stack Exchange

|

mh5_anomaly_detector package from mh5_anomaly_detector repomh5_anomaly_detector |

ROS Distro

|

Package Summary

| Version | 0.1.6 |

| License | BSD |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Checkout URI | https://github.com/narayave/mh5_anomaly_detector.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2018-08-19 |

| Dev Status | MAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Package Description

Additional Links

Maintainers

- Vedanth Narayanan

Authors

- Vedanth Narayanan

ROS Anomaly Detector package

Overview

The ROS Anomaly Detector Module (ADM) is designed to execute alongside industrial robotic arm tasks to detect unintended deviations at the application level. The ADM utilizes a learning based technique to achieve this. The process has been made efficient by building the ADM as a ROS package that aptly fits in the ROS ecosystem. While this module is specific to anomaly detection for an industrial arm, it is extensible to other projects with similar goals. This is meant to be a starting point for other such projects.

The crux of anomaly detection within this module relies on a three step process. The steps includes creating datasets out of the published messages within ROS, training learning models from those datasets, and deploying it in production. Appropriately, the three modes that the ROS ADM can be executed in are Collect, Learn, and Operate. The following image presents the high level workflow that is meant to be followed.

Collect Mode

The ADM is first run in the Collect mode after the task has been programmed and assessed in the commissioning phase of the robot. This mode runs AND collects data as a node by subscribing to the ‘/joint_states’ topic, of message type ‘sensor_msgs/JointState,’ within ROS.

Learn Mode