Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

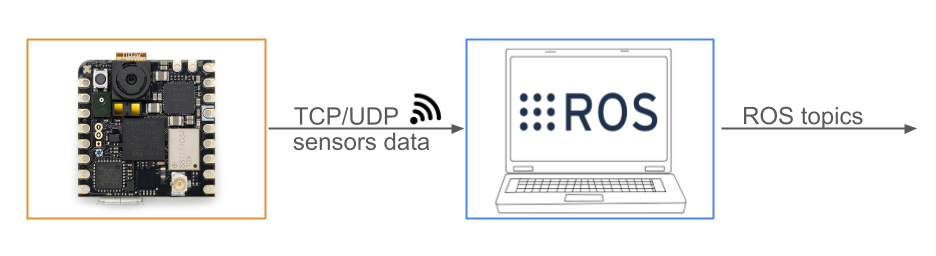

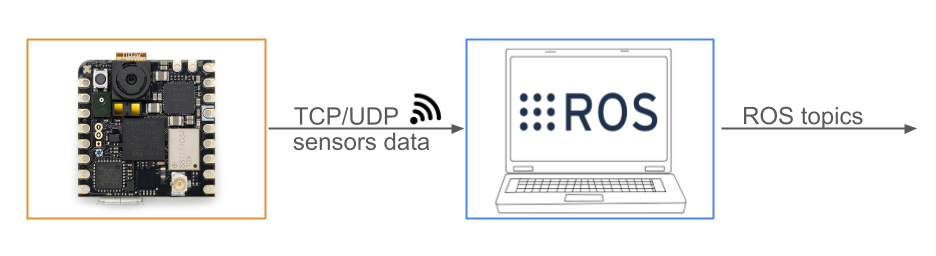

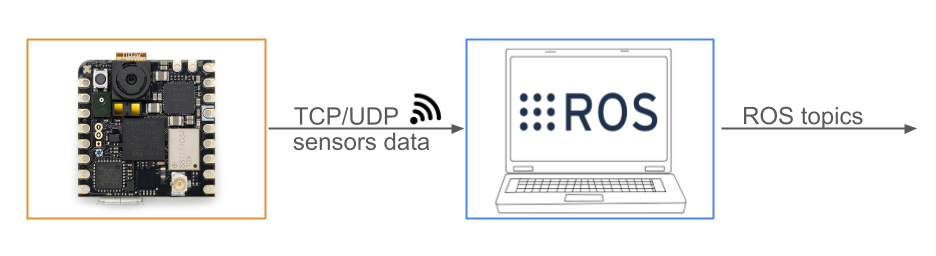

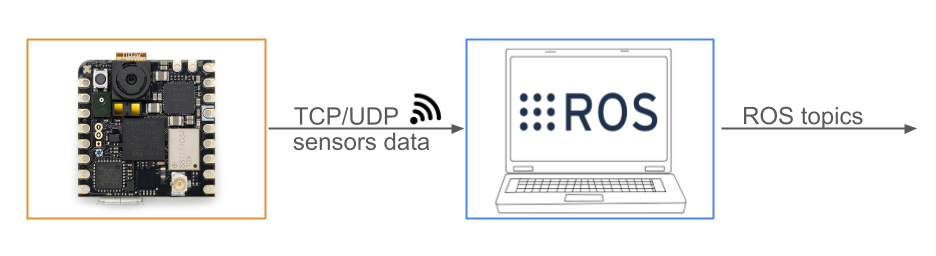

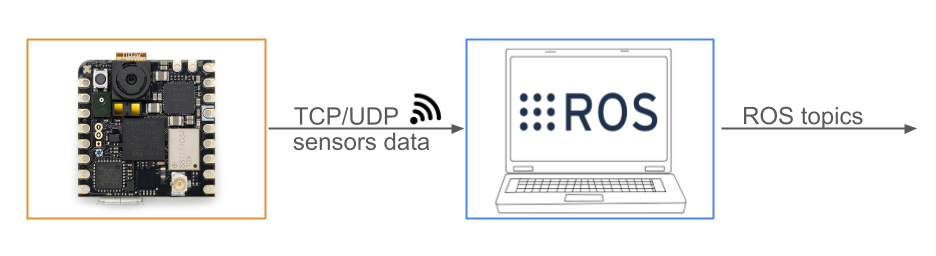

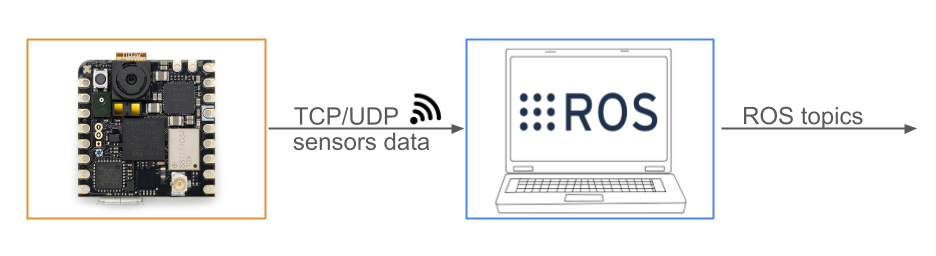

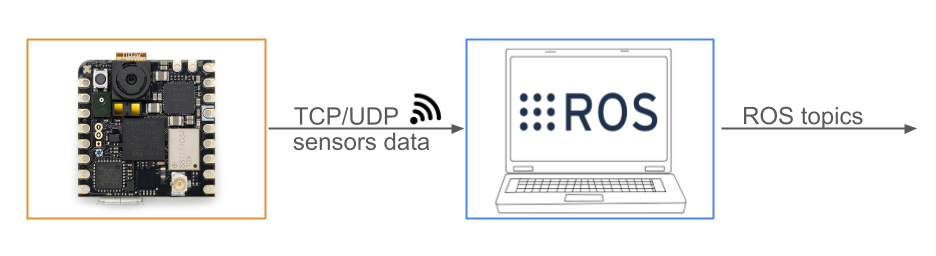

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

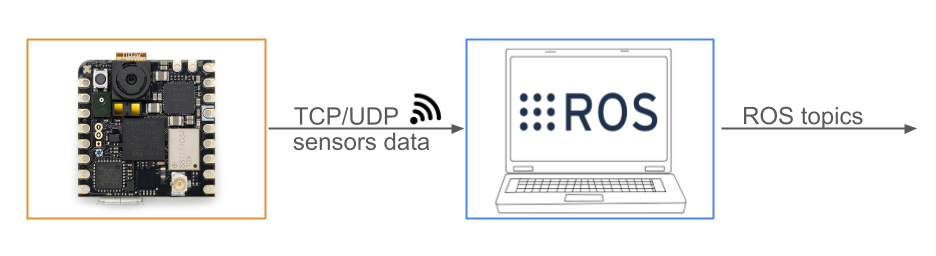

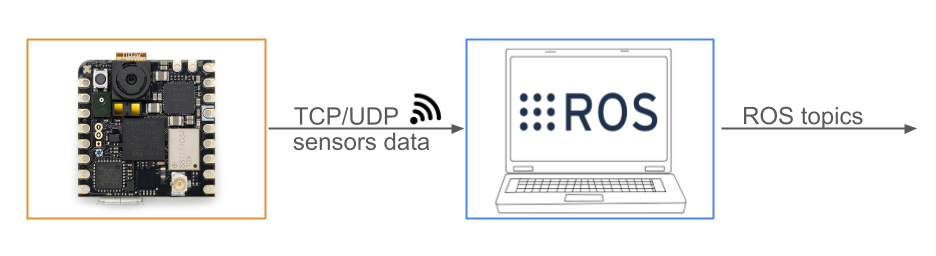

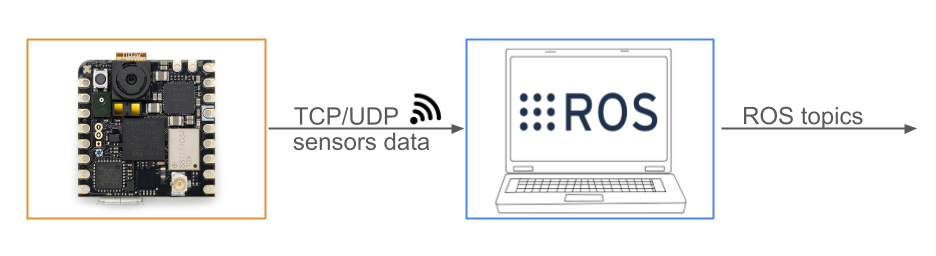

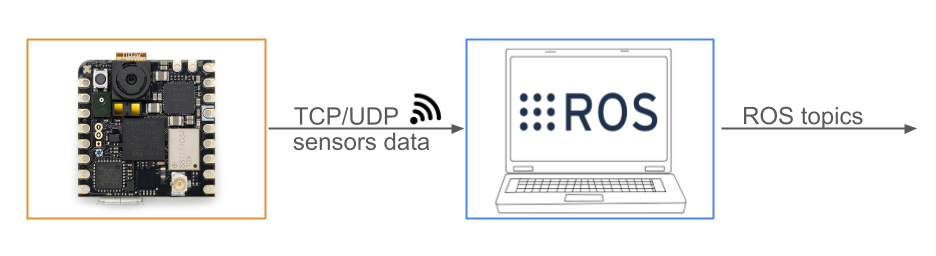

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

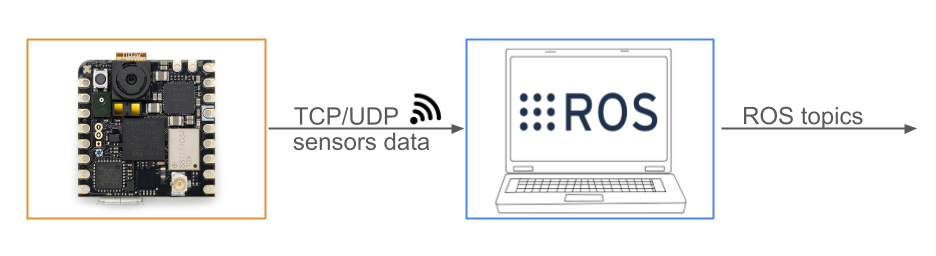

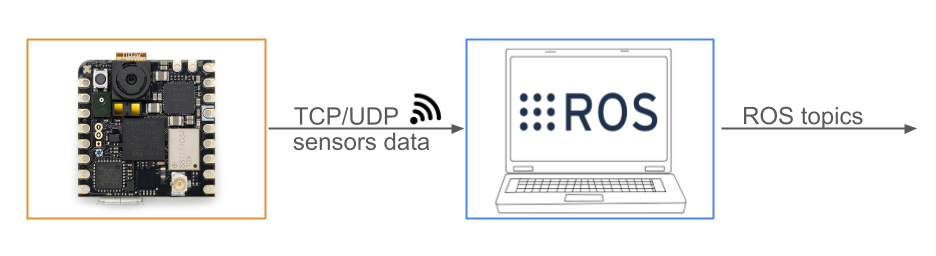

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

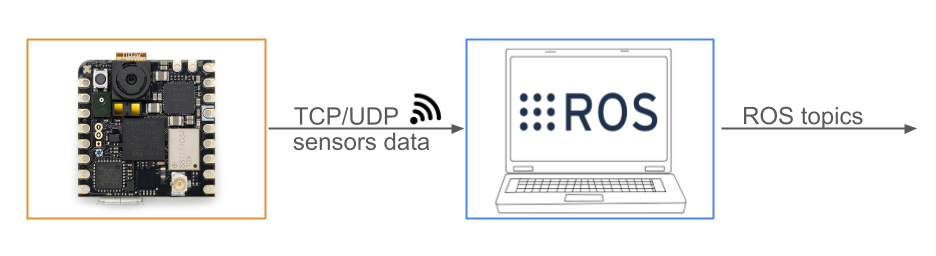

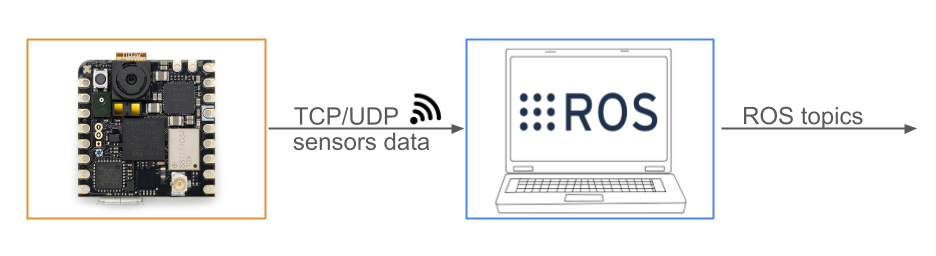

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

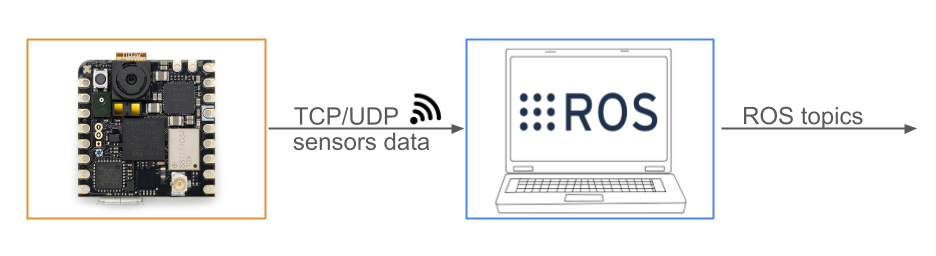

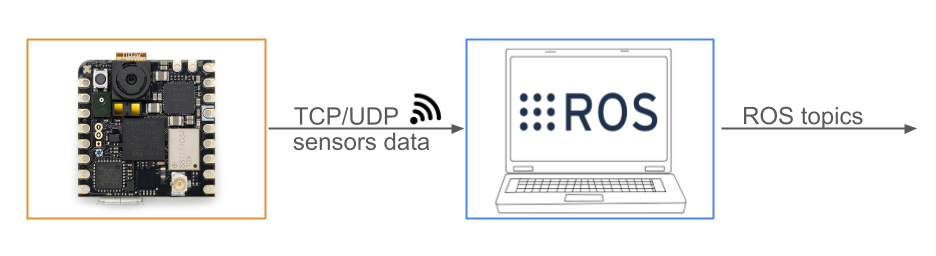

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

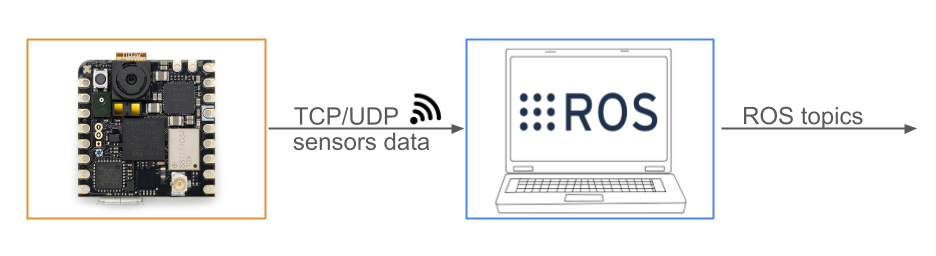

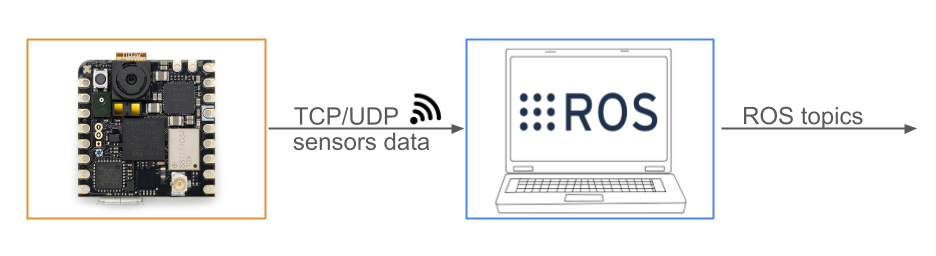

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-

Imu streams on:

/nicla/imu

The user can easily configure this package, by launch parameters, to receive sensors data via either UDP or TCP socket connections, specifying also the socket IP address. Moreover, the user can decide which sensor to be streamed within the ROS environment. In this repository you can find the Python code optimised for receiving the data by the board, and subsequently publishing it through ROS topics.

Table of Contents

Installation

Step-by-step instructions on how to get the ROS package running

Binaries available for noetic:

sudo apt install ros-$ROS_DISTRO-nicla-vision-ros

For ROS2, check https://github.com/ADVRHumanoids/nicla_vision_ros2.git

Source installation

Usual catkin build :

$ cd <your_workpace>/src

$ git clone https://github.com/ADVRHumanoids/nicla_vision_ros.git

$ cd <your_workpace>

$ catkin build

$ source <your_workpace>/devel/setup.bash

Arduino Nicla Vision setup

After having completed the setup steps in the Nicla Vision Drivers repository, just turn on your Arduino Nicla Vision. When you power on your Arduino Nicla Vision, it will automatically connect to the network and it will start streaming to your ROS-running machine.

Optional Audio Recognition with VOSK

It is possible to run a speech recognition feature directly on this module, that will then publish the recognized words on the /nicla/audio_recognized topic. At the moment, VOSK is utilized. Only Arduino version is supported.

VOSK setup

pip install vosk- Download a VOSK model https://alphacephei.com/vosk/models

- Check the

recognitionarguments in thenicla_receiver.launchfile

Usage

Follow the below steps for enjoying your Arduino Nicla Vision board with ROS!

Run the ROS package

- Launch the package:

$ roslaunch nicla_vision_ros nicla_receiver.launch receiver_ip:="x.x.x.x" connection_type:="tcp/udp/serial" <optional arguments>

- Set the socket type to be used, either TCP, UDP or Serial (`connection_type:=tcp`, `udp`, or `serial`).

- Set the `receiver_ip` with the IP address of your ROS-running machine.

You can get this IP address by executing the following command:

$ ifconfig

and taking the "inet" address under the "enp" voice. *Note* argument is ignored if `connection_type:="tcp/udp/serial`

Furthermore, using the `<optional arguments>`, you can decide:

- which sensor to be streamed in ROS

e.g. `enable_imu:=true enable_range:=true enable_audio:=true enable_audio_stamped:=false enable_camera_compressed:=true enable_camera_raw:=true`

- with Serial connection, set `camera_receive_compressed` according to how nicla is sending images (COMPRESS_IMAGE)

- on which socket port (default `receiver_port:=8002`). For Serial connection, `receiver_port` argument is used to specify the port used by the board

e.g. `receiver_port:=/dev/ttyACM0`

Once you run it, you will be ready for receiving the sensors data

File truncated at 100 lines see the full file

CONTRIBUTING

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.

Repository Summary

| Checkout URI | https://github.com/ADVRHumanoids/nicla_vision_ros.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2025-11-12 |

| Dev Status | MAINTAINED |

| Released | RELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| nicla_vision_ros | 1.0.2 |

README

Nicla Vision ROS package

:rocket: Check out the ROS2 version: Nicla Vision ROS2 repository :rocket:

Description

This ROS package enables the Arduino Nicla Vision board to be ready-to-use in the ROS world! :boom:

The implemented architecture is described in the above image: the Arduino Nicla Vision board streams the sensors data to a ROS-running machine through TCP/UDP socket or Serial, i.e. UART over the USB cable. This package will be running on the ROS-running machine, allowing to deserialize the received info, and stream it in the corresponding ROS topics

Here a list of the available sensors with their respective ROS topics:

-

2MP color camera streams on

/nicla/camera/camera_info/nicla/camera/image_raw/nicla/camera/image_raw/compressed

-

Time-of-Flight (distance) sensor streams on:

/nicla/tof

-

Microphone streams on:

/nicla/audio/nicla/audio_info/nicla/audio_stamped/nicla/audio_recognized

-