Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

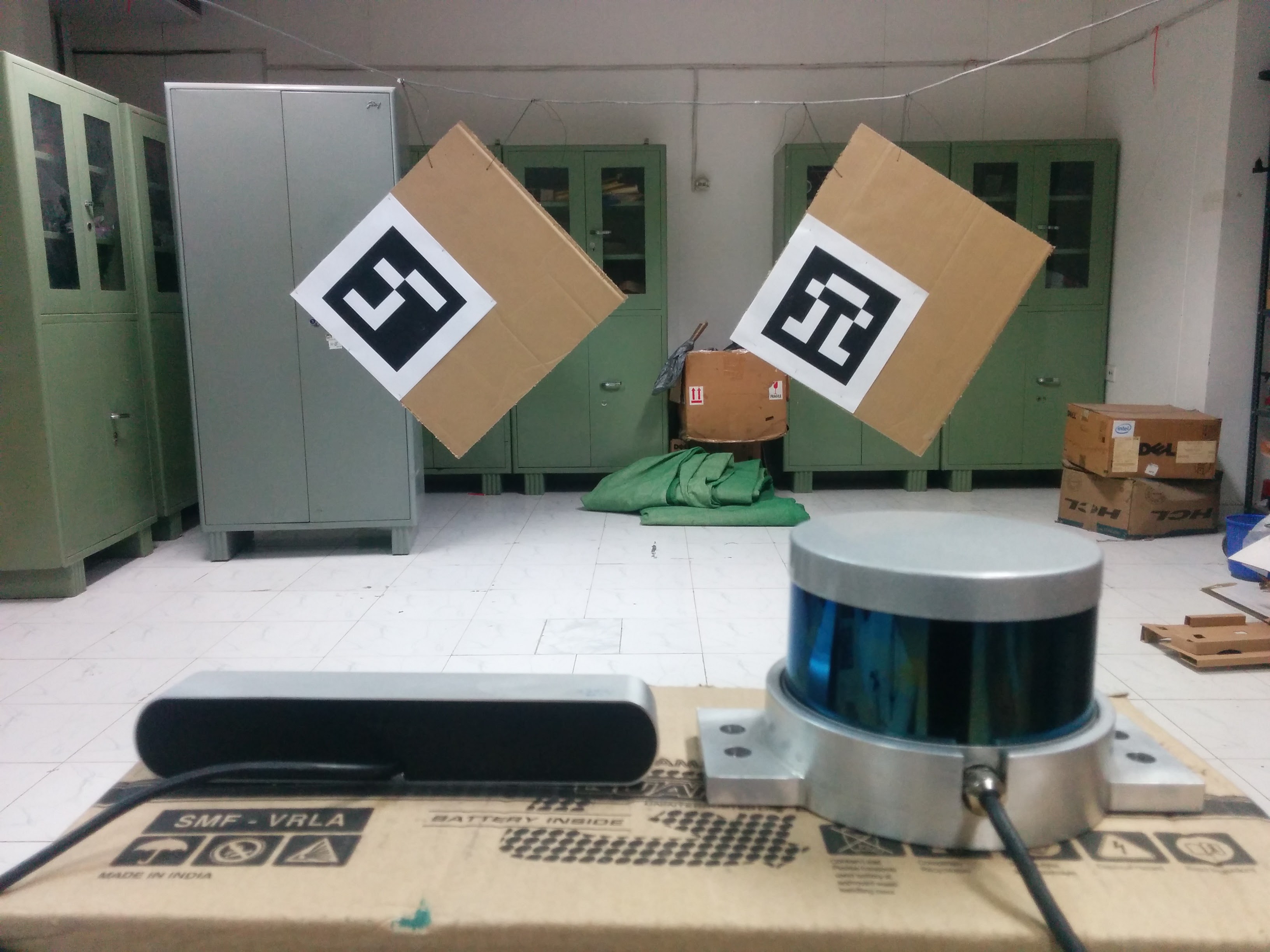

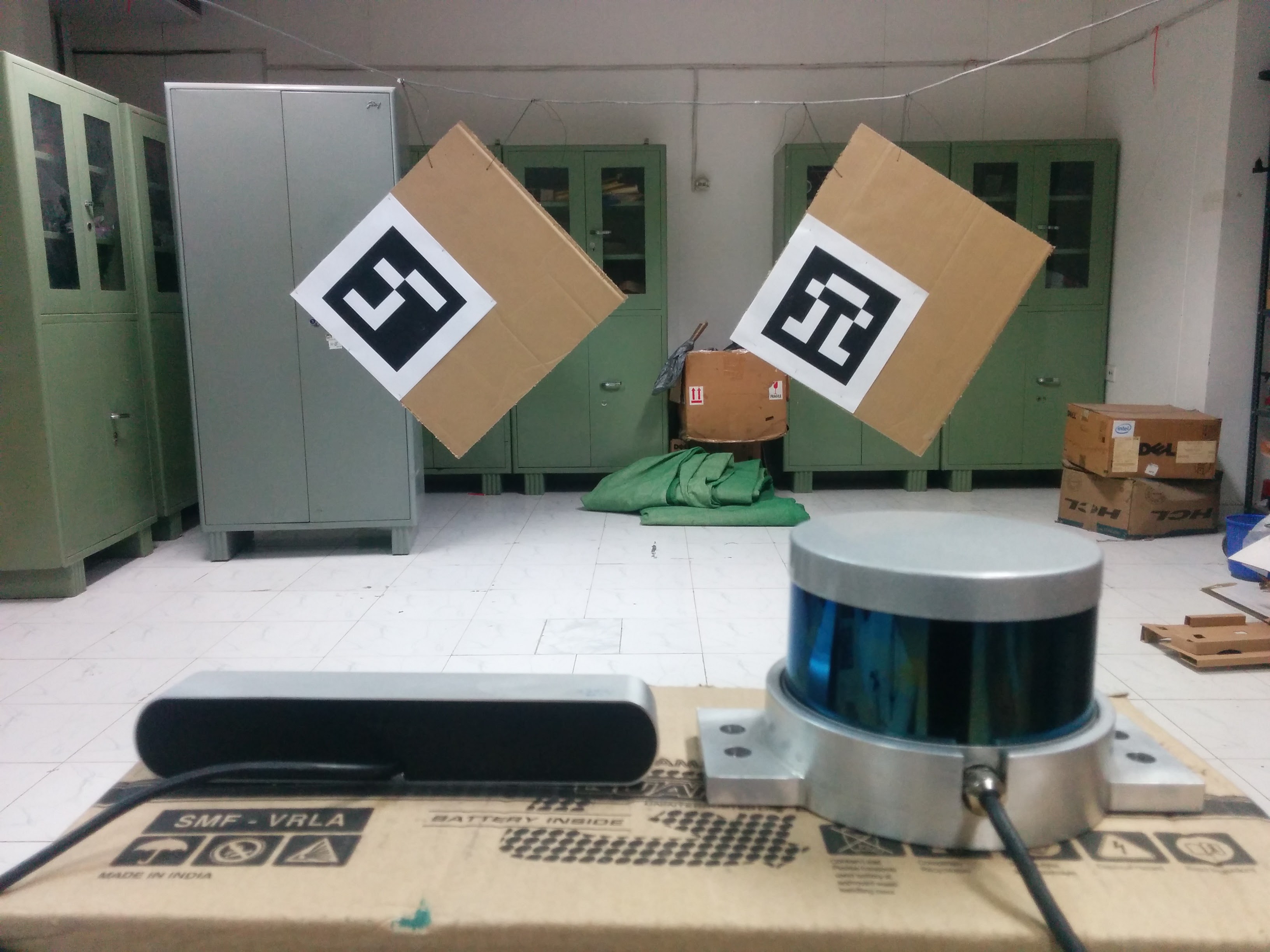

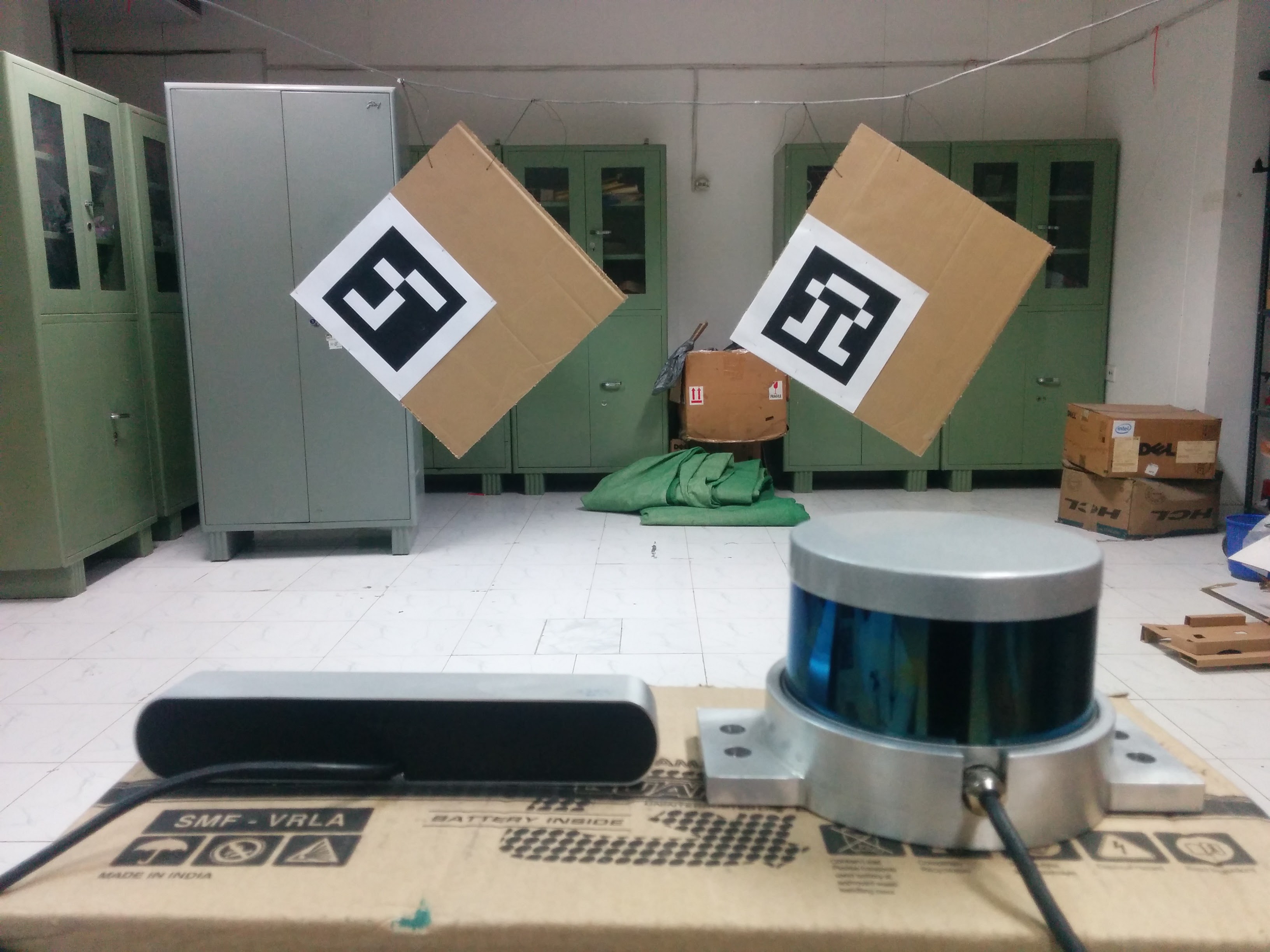

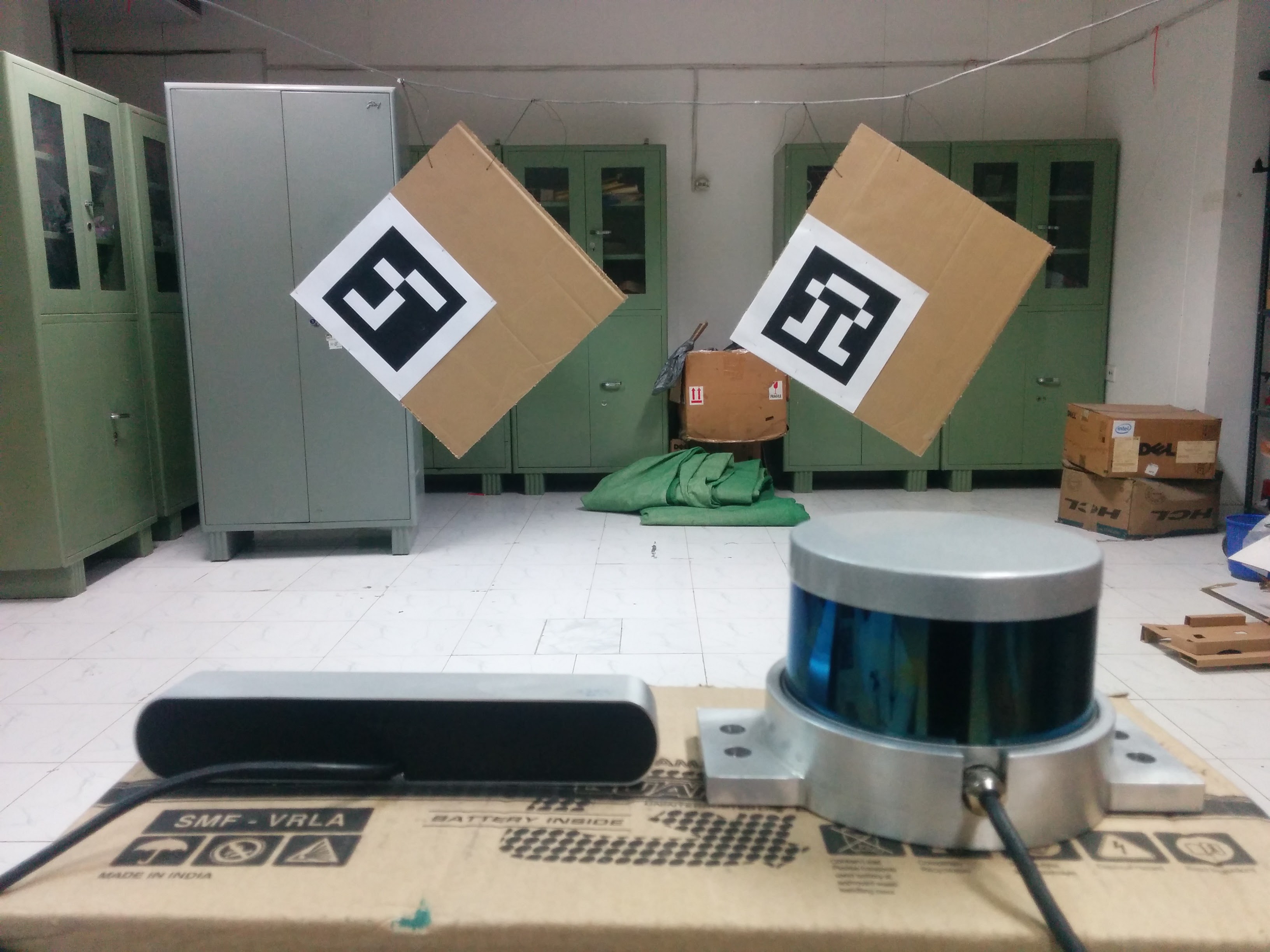

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

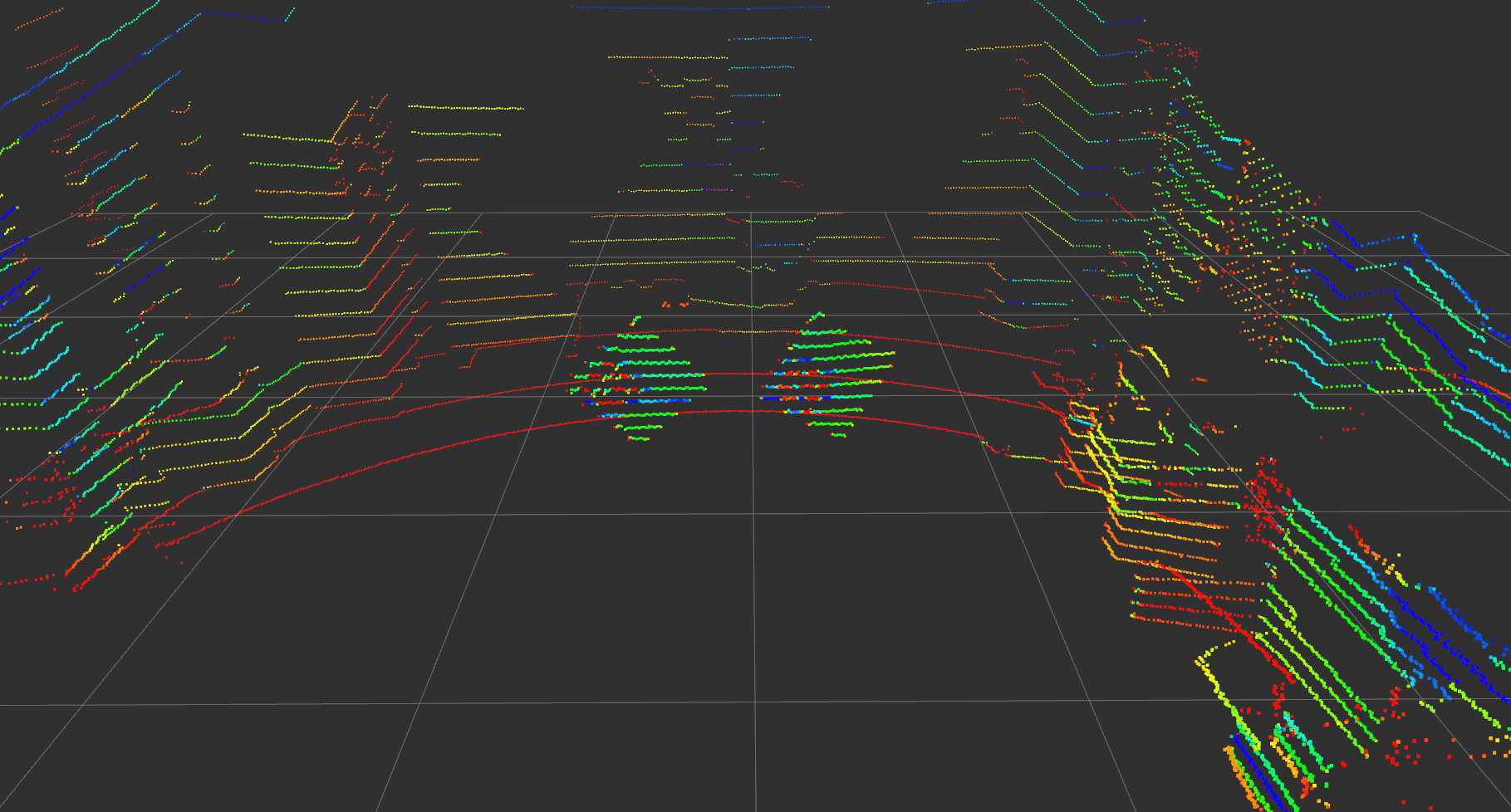

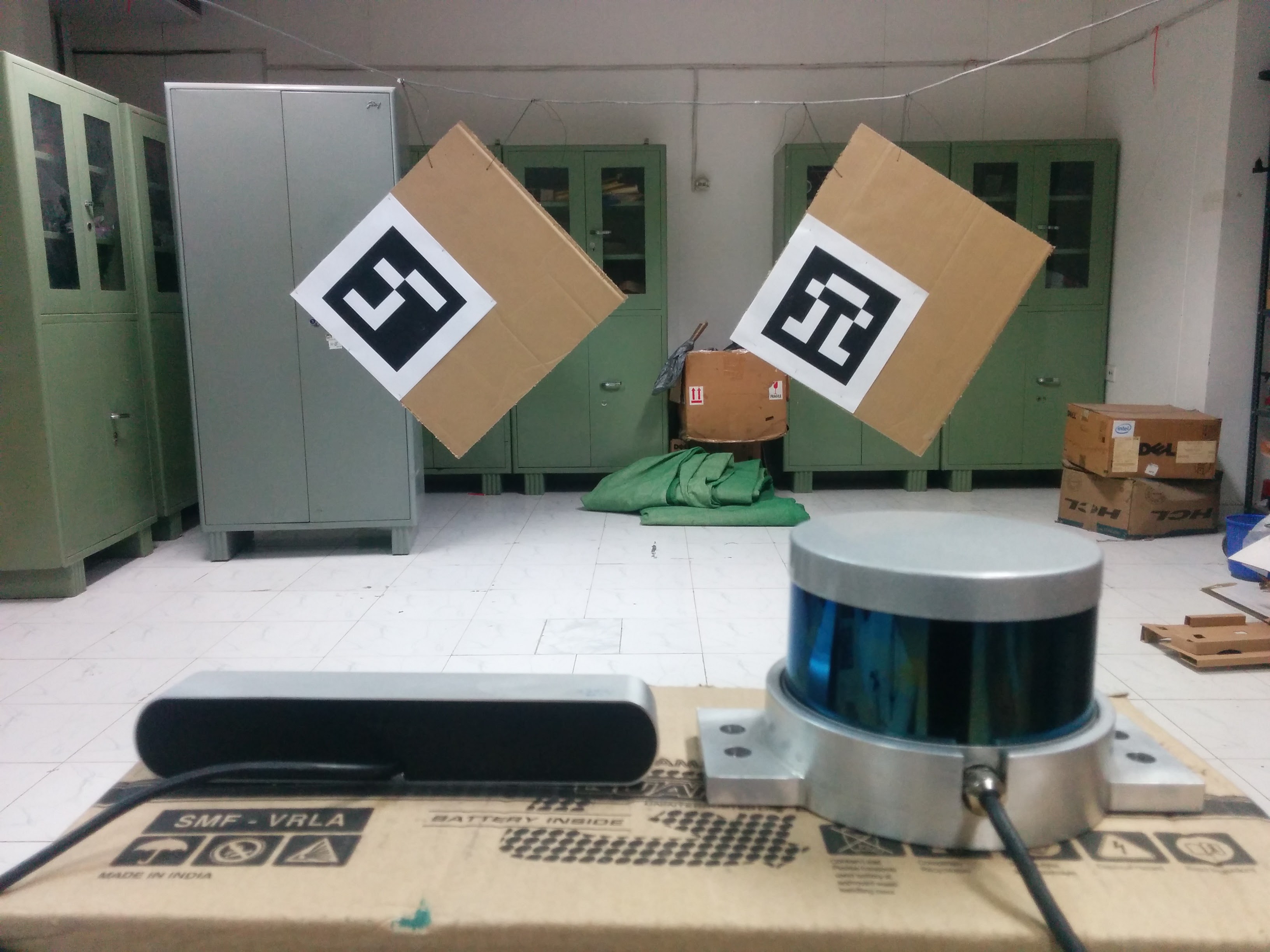

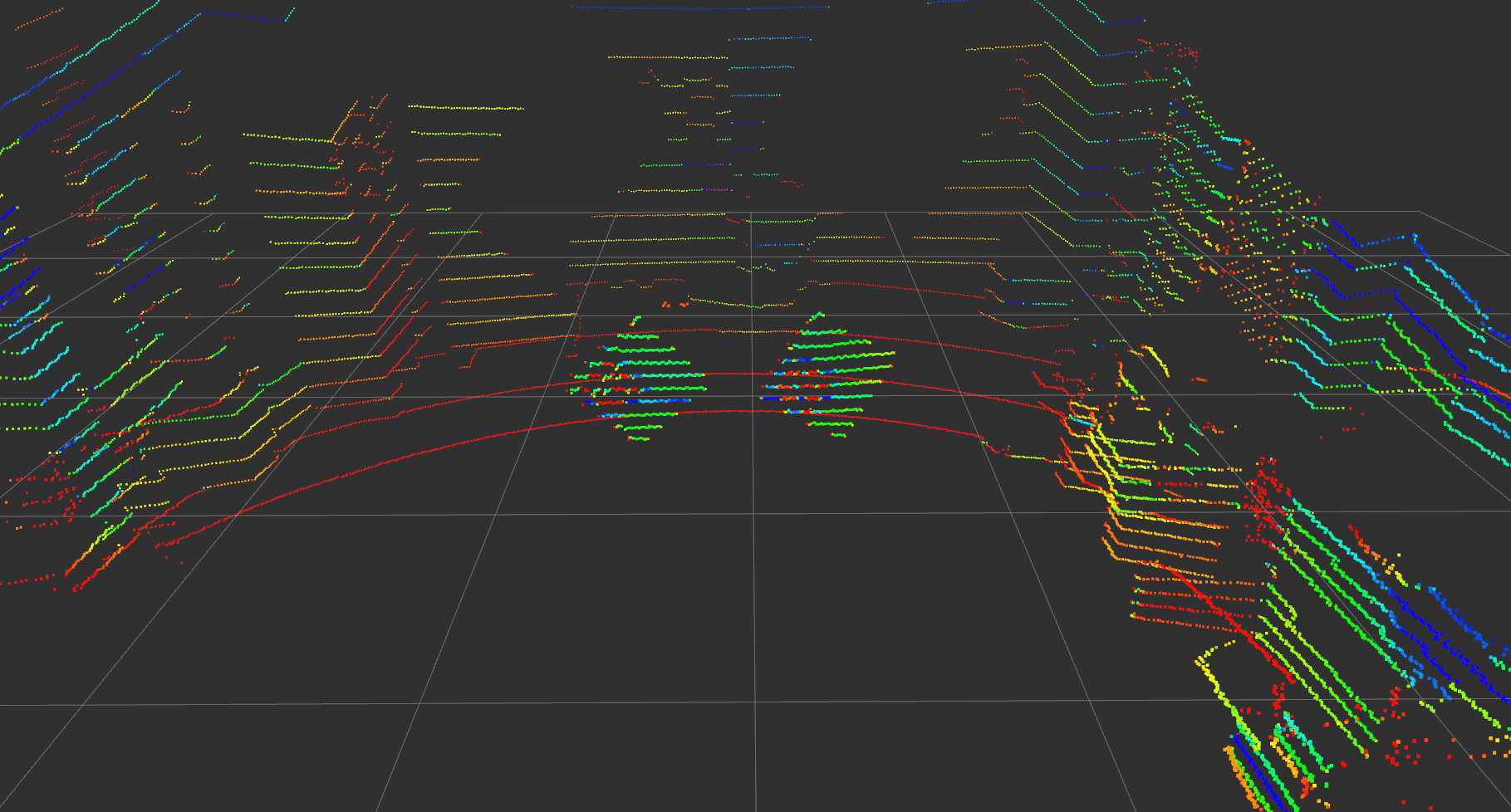

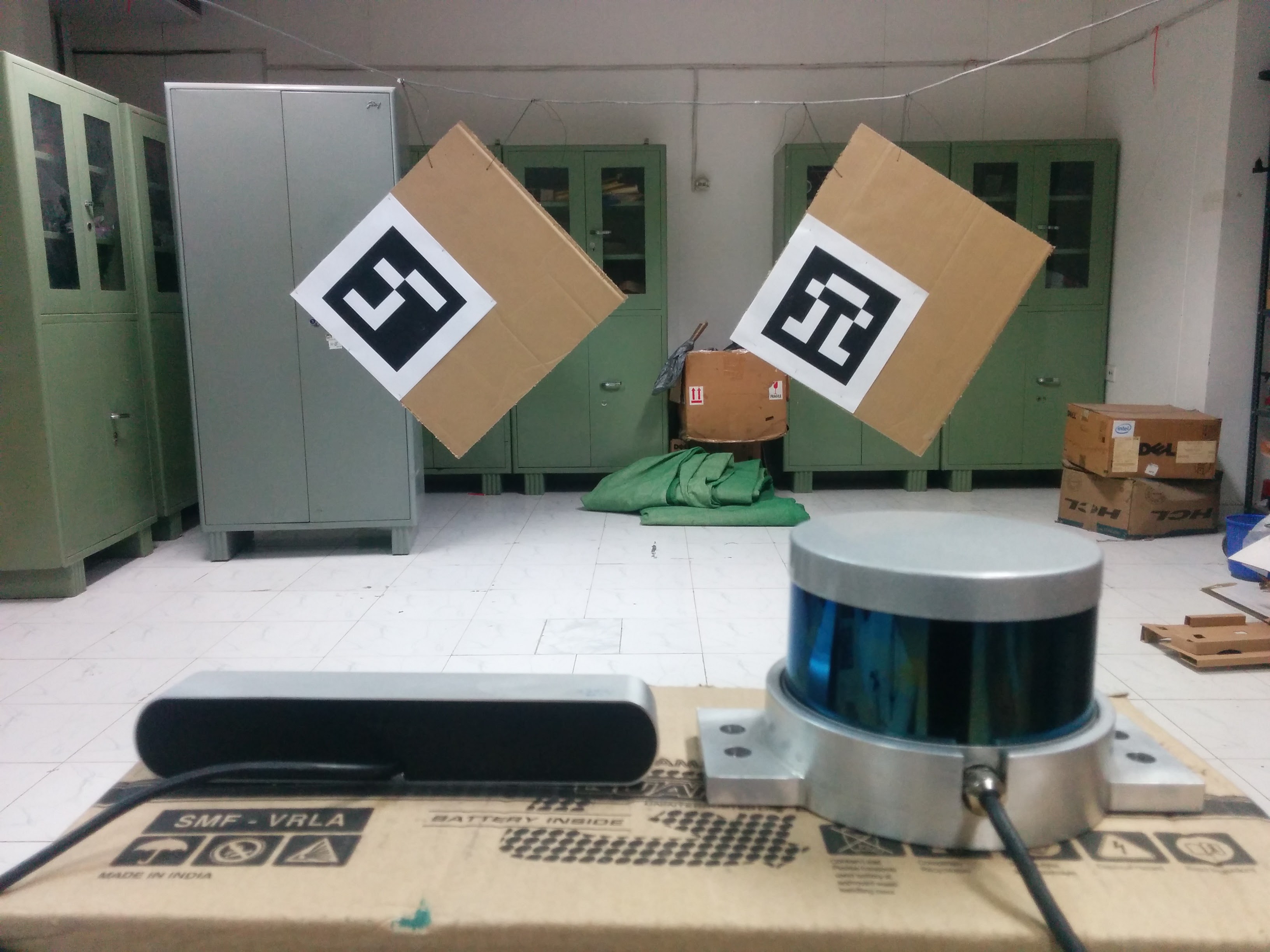

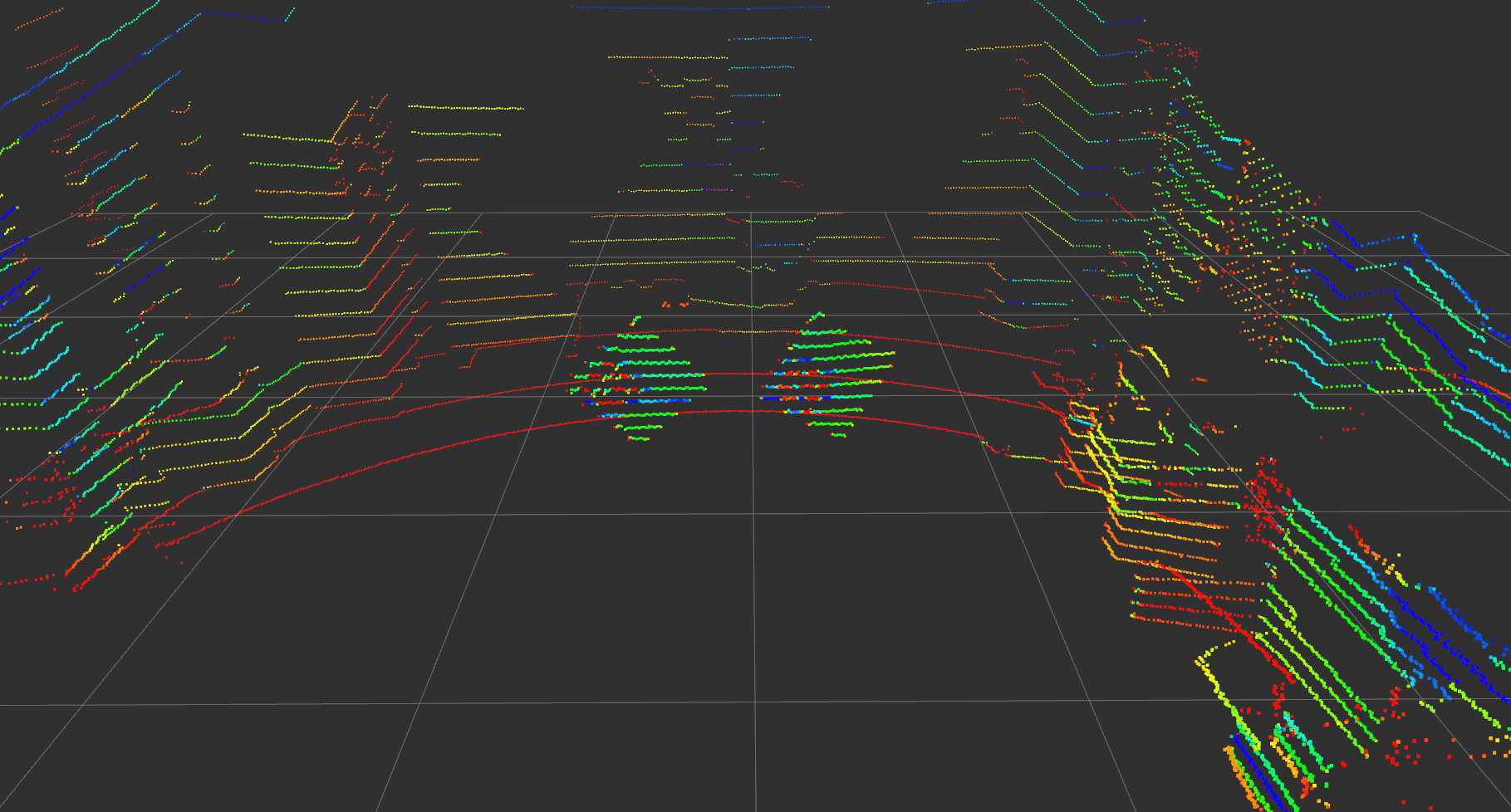

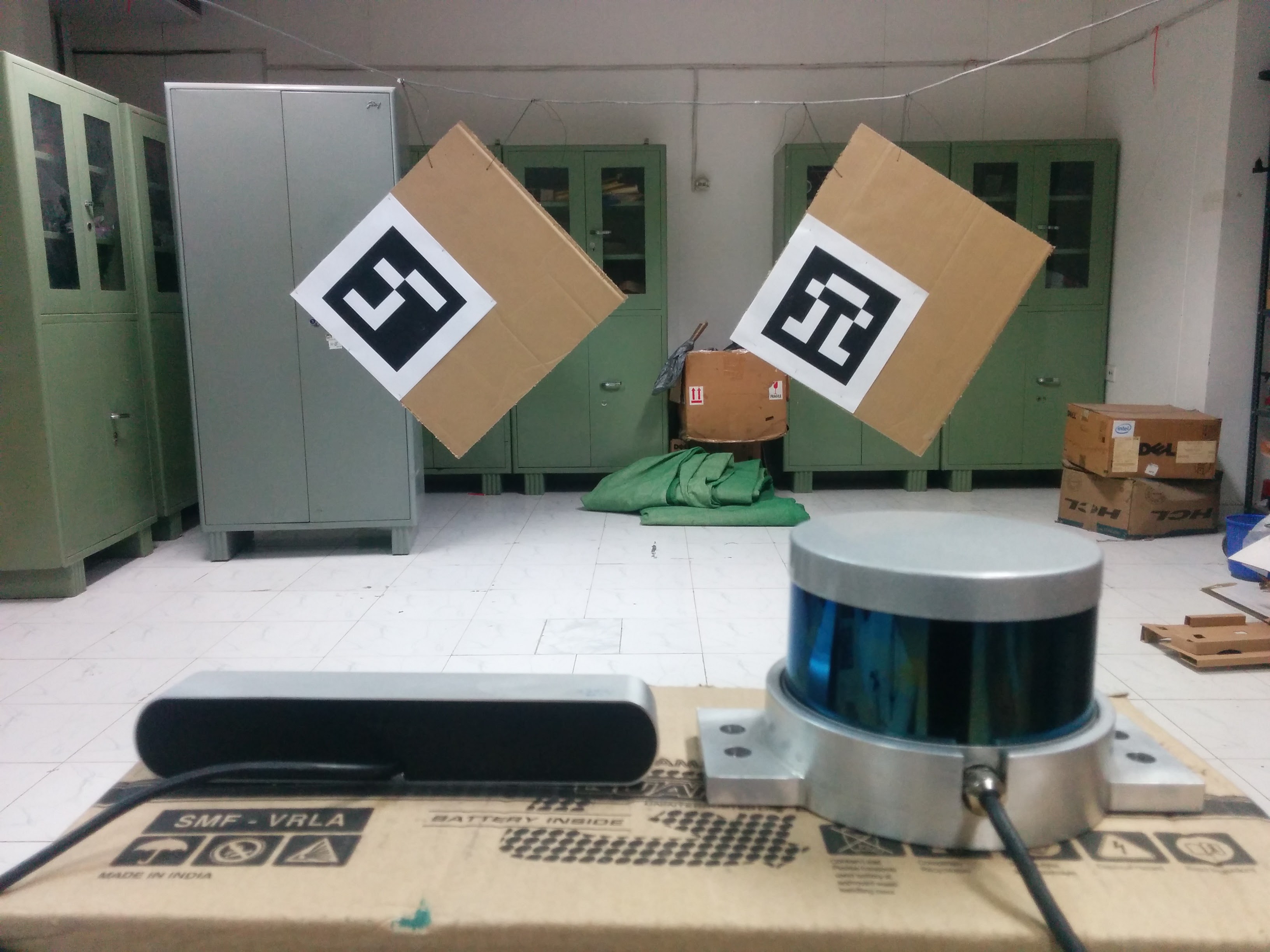

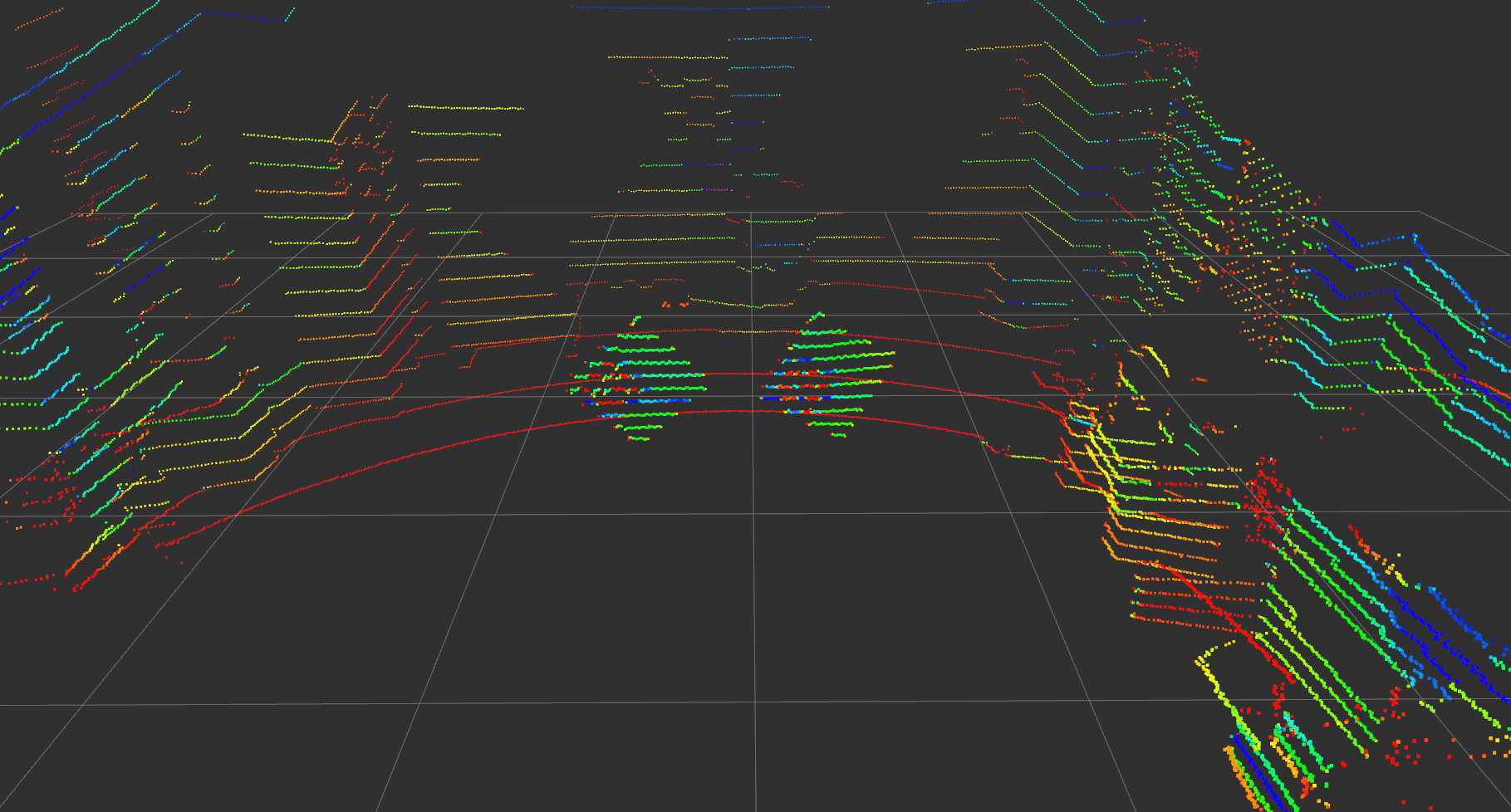

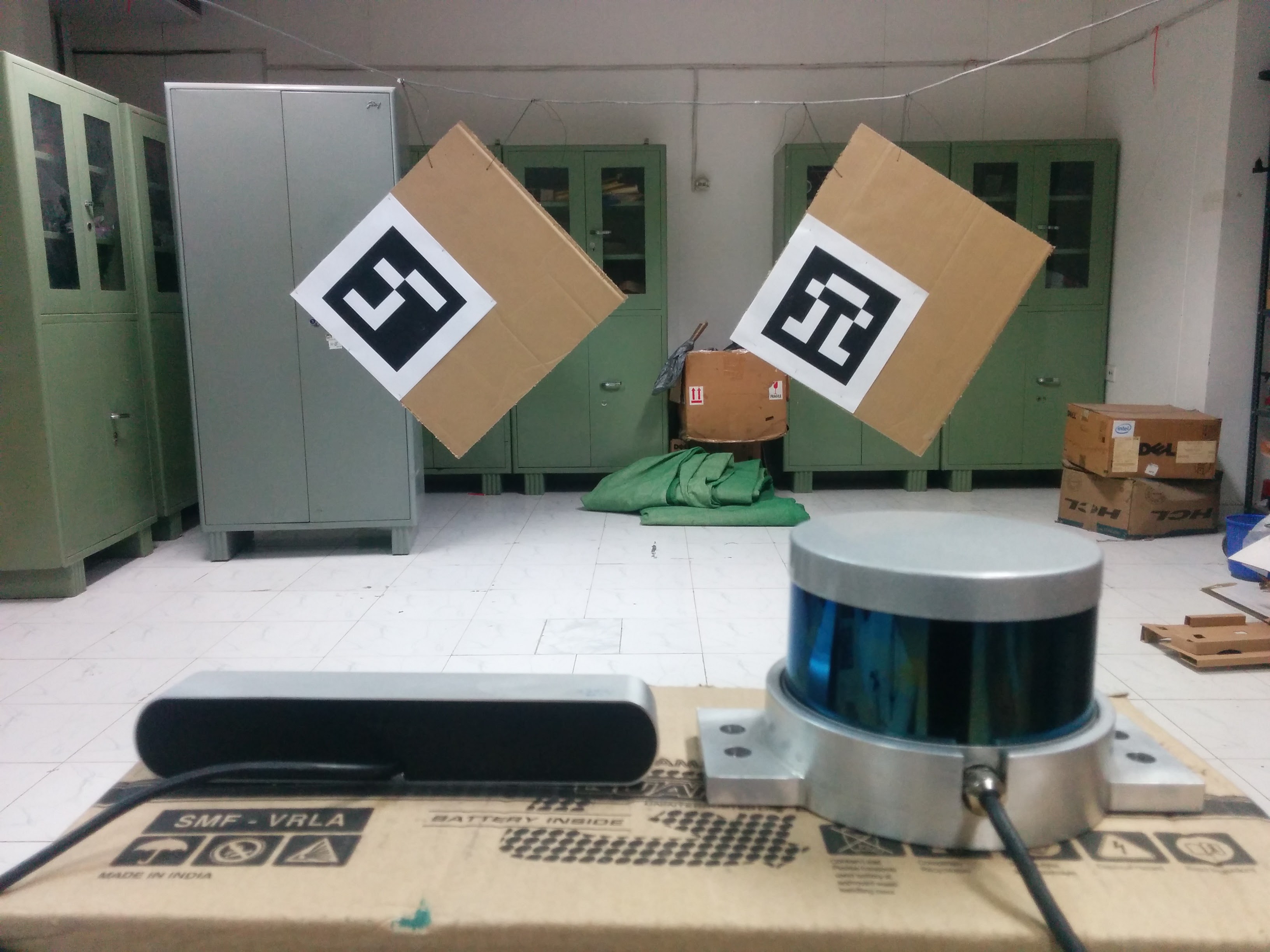

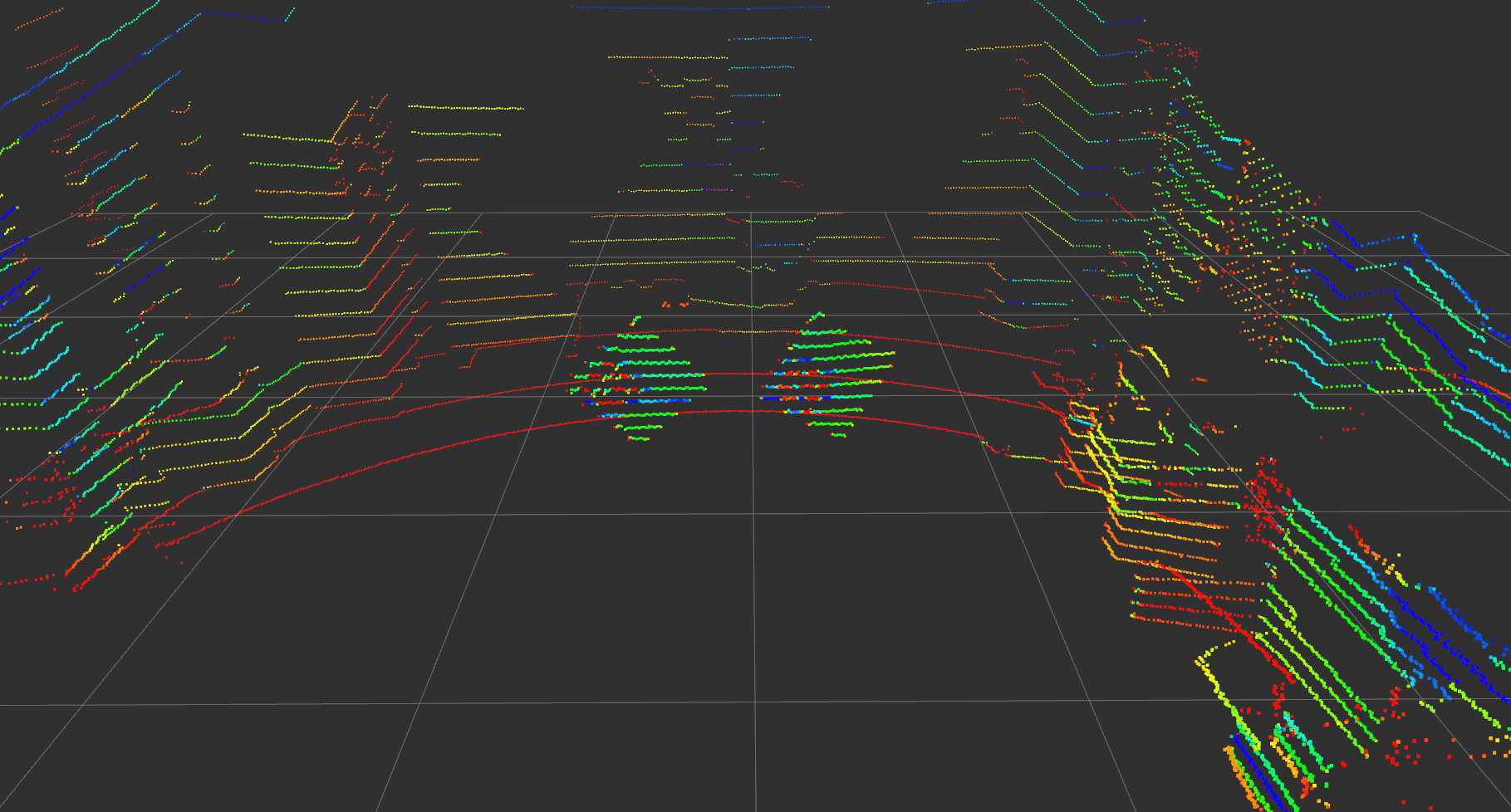

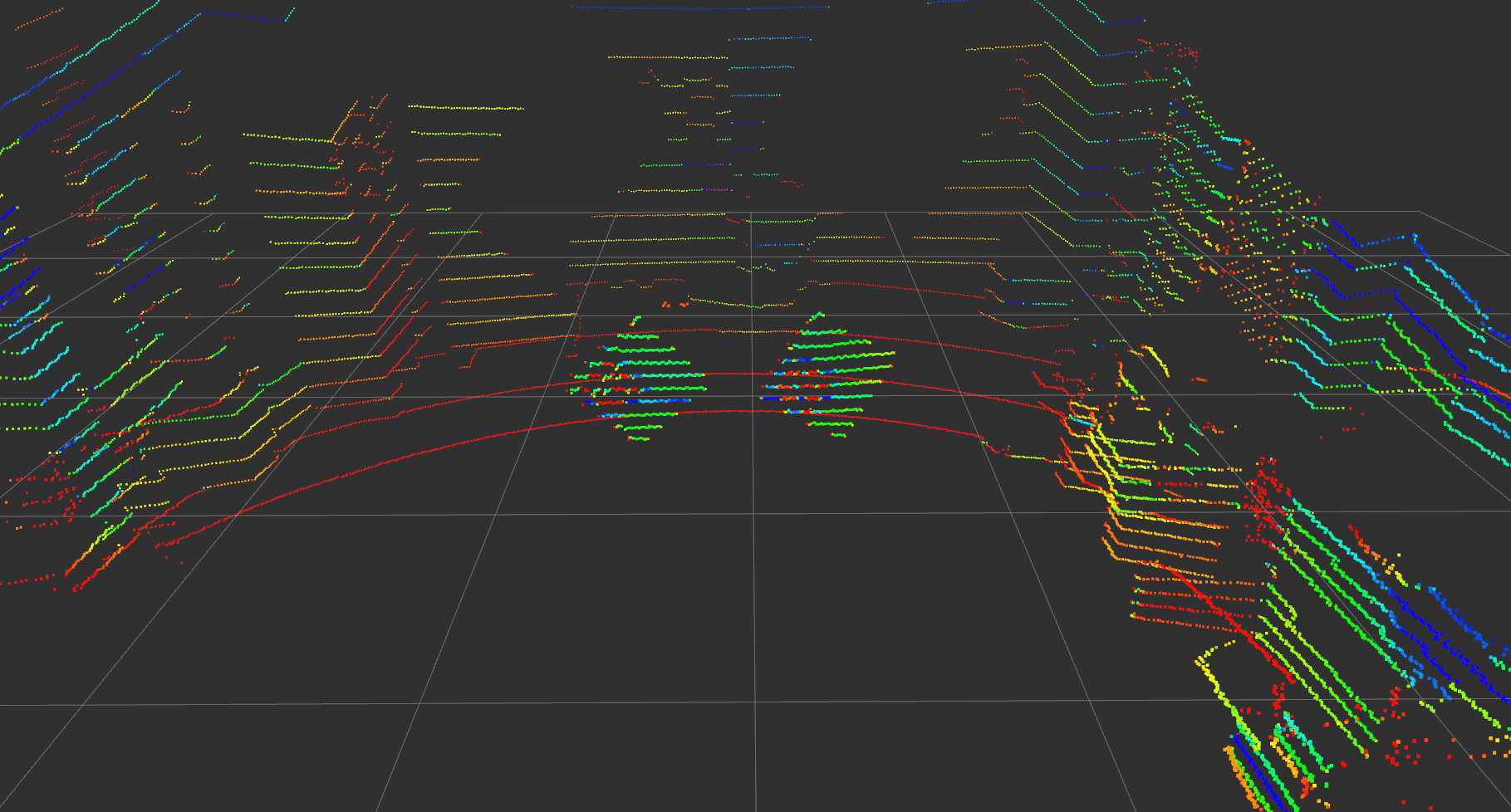

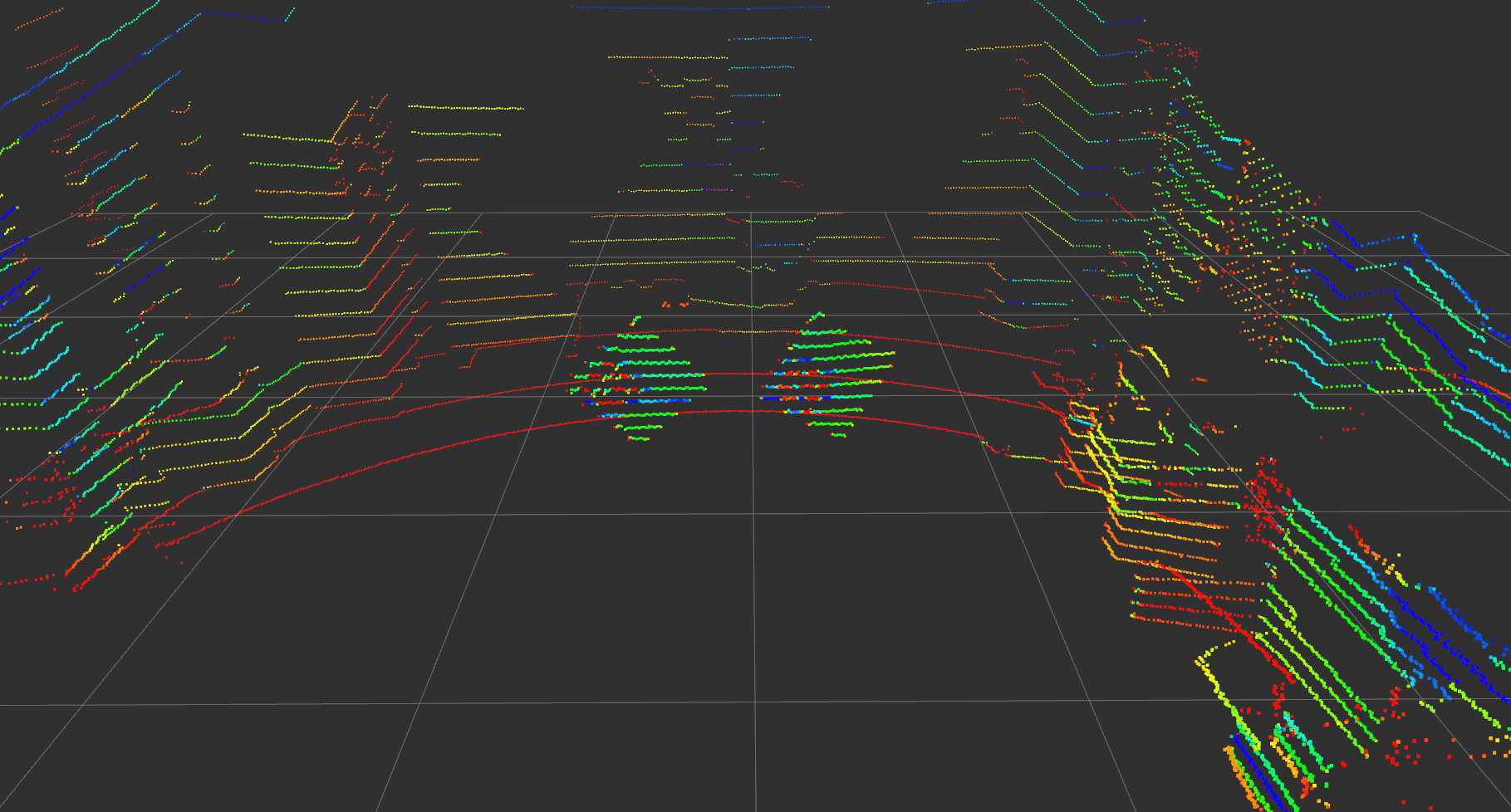

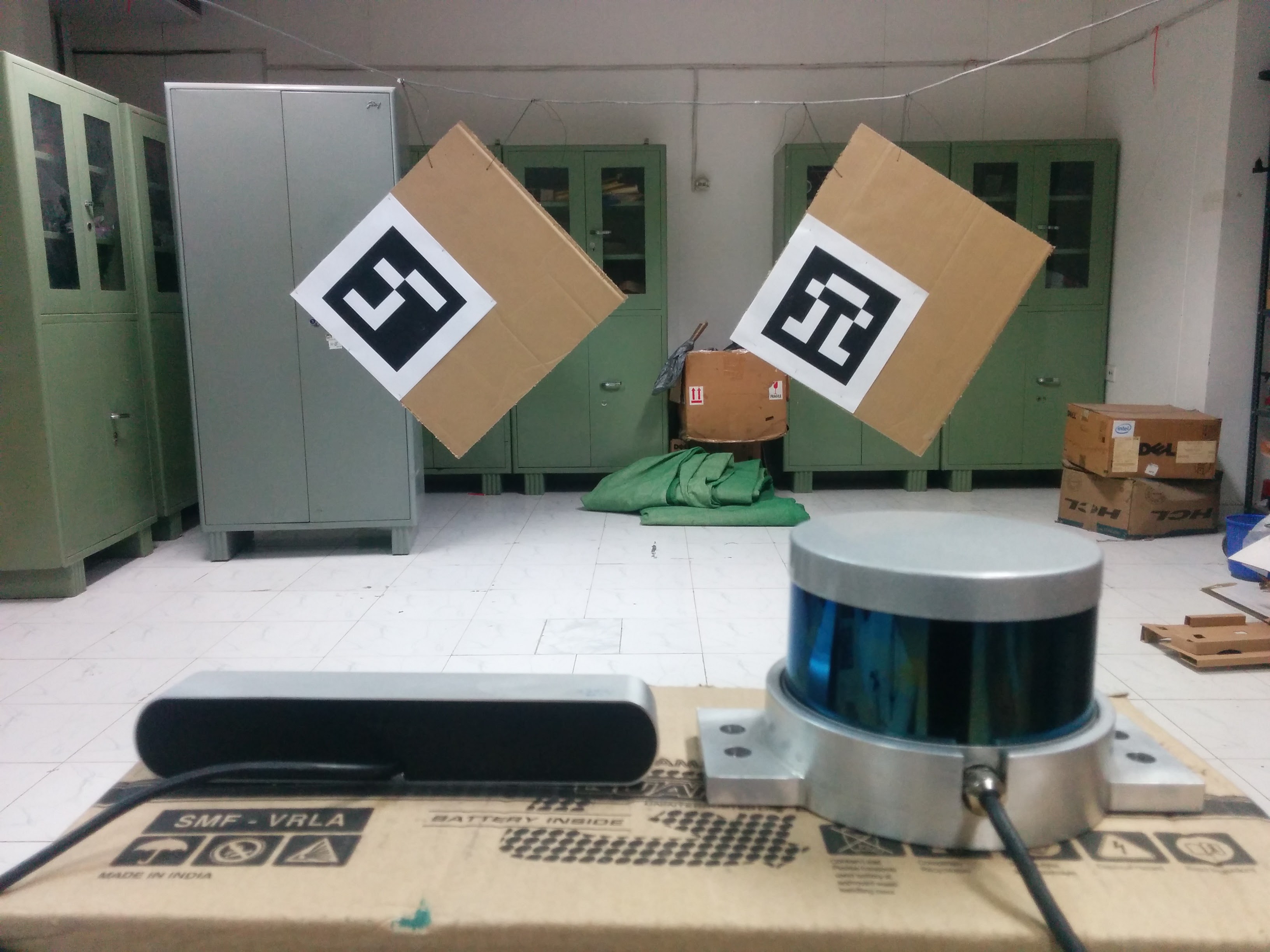

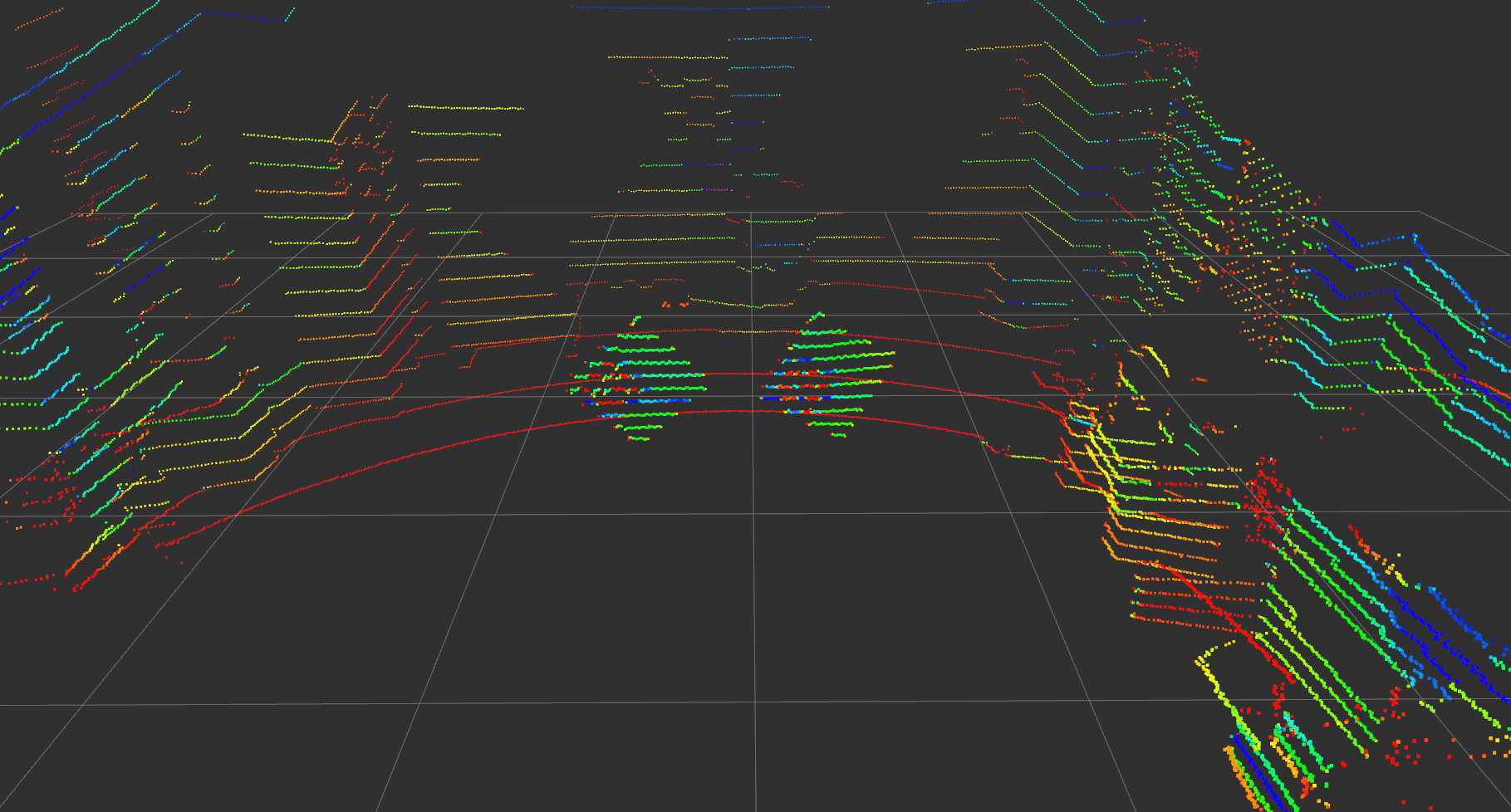

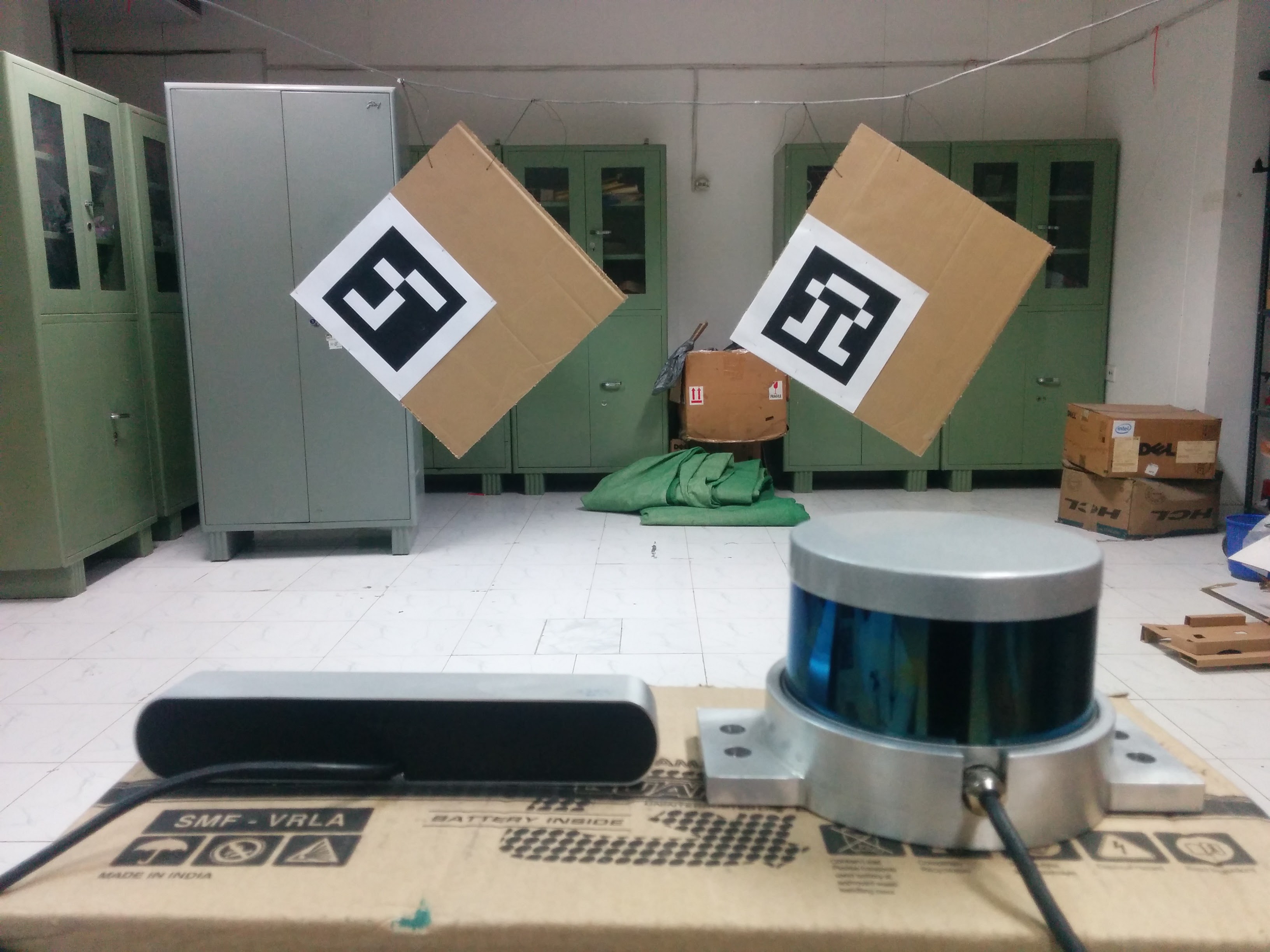

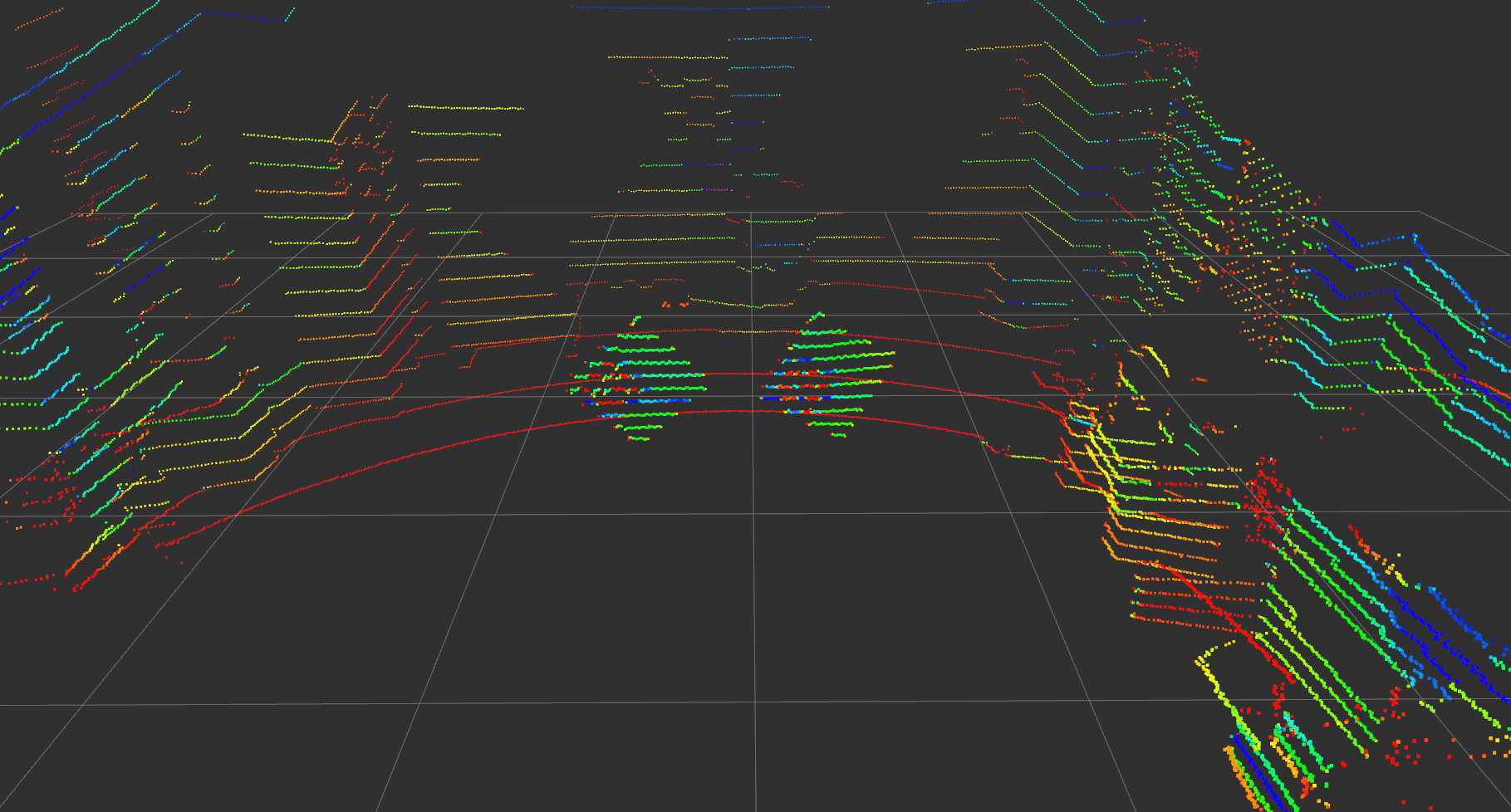

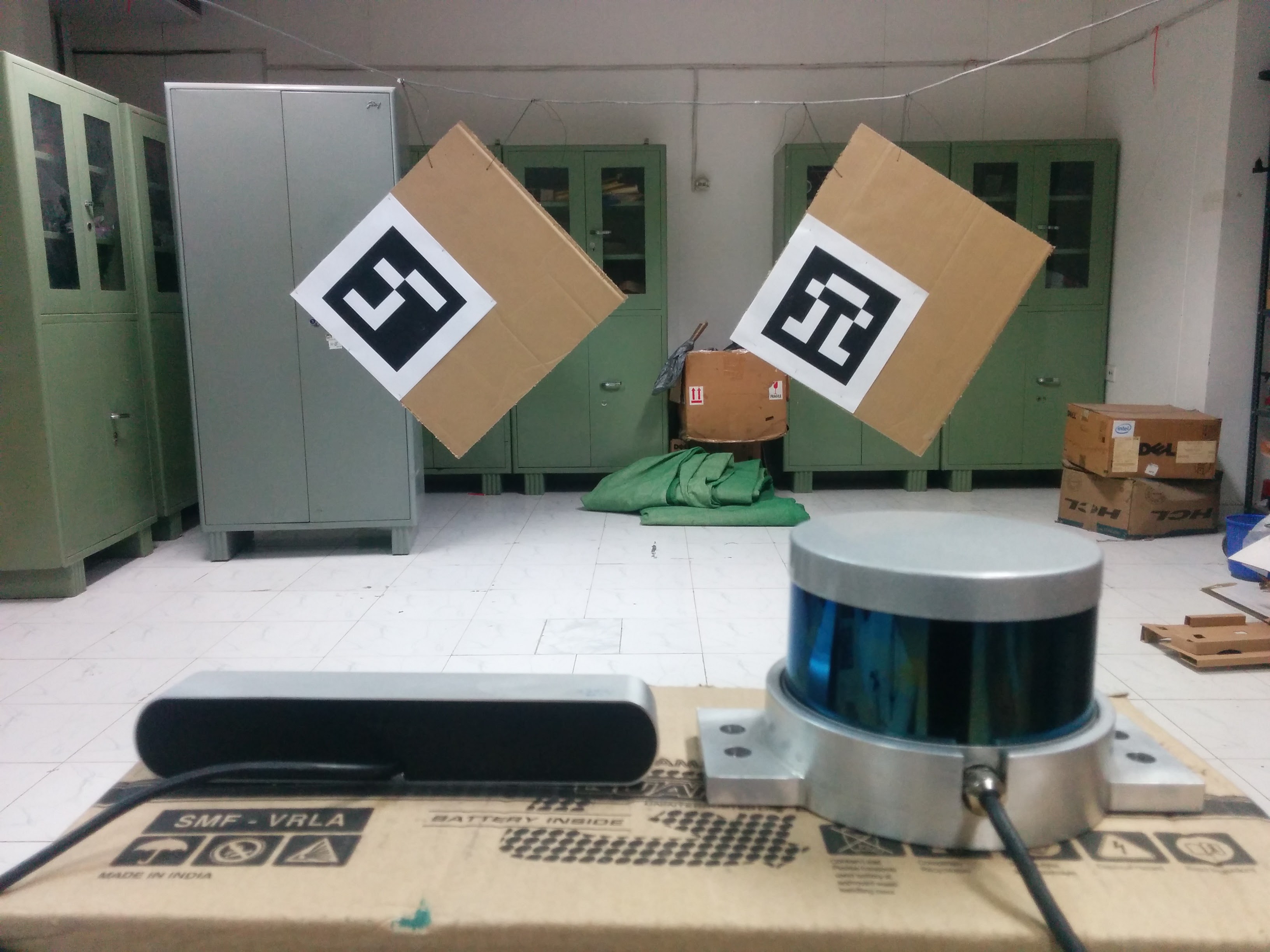

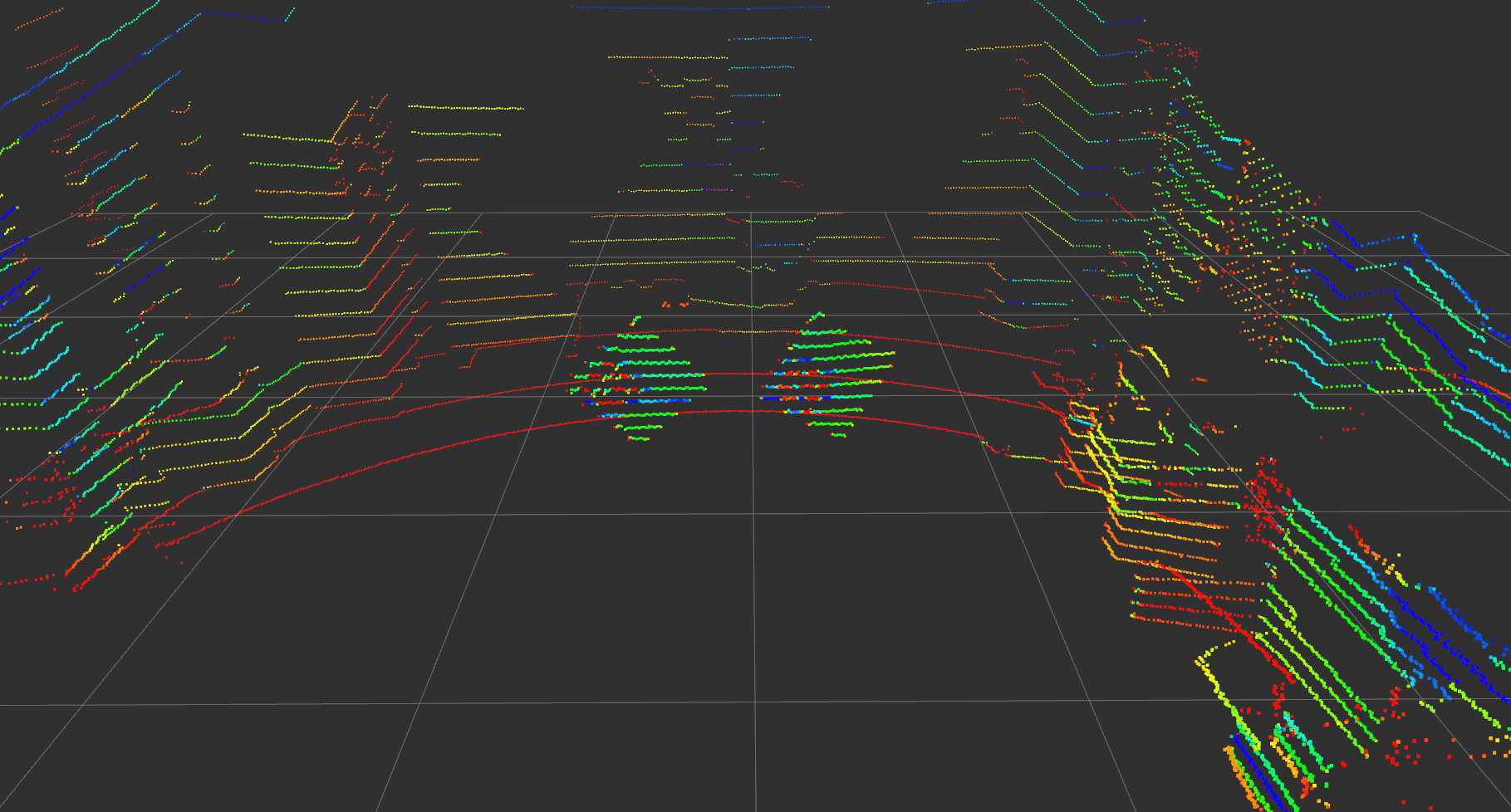

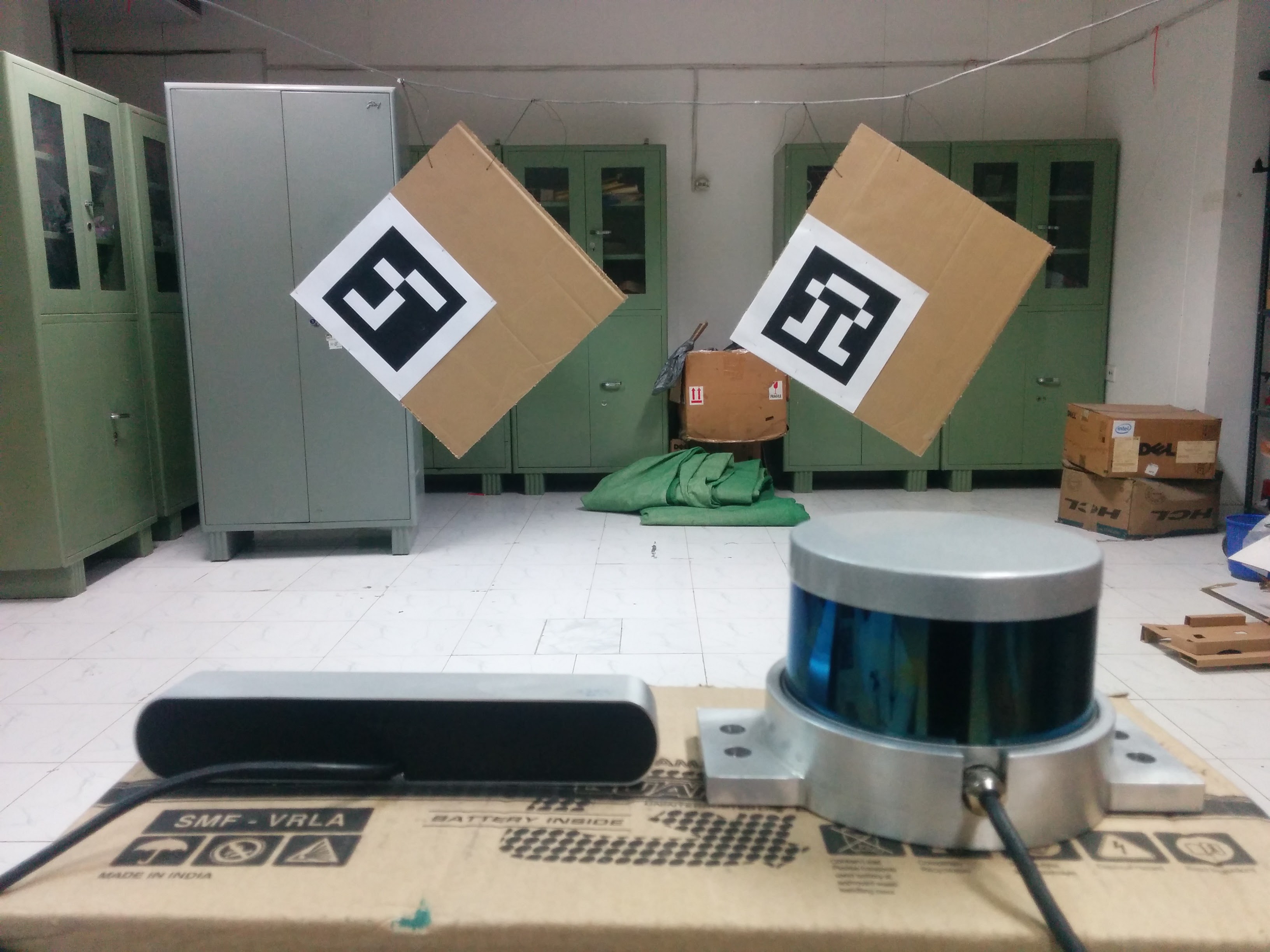

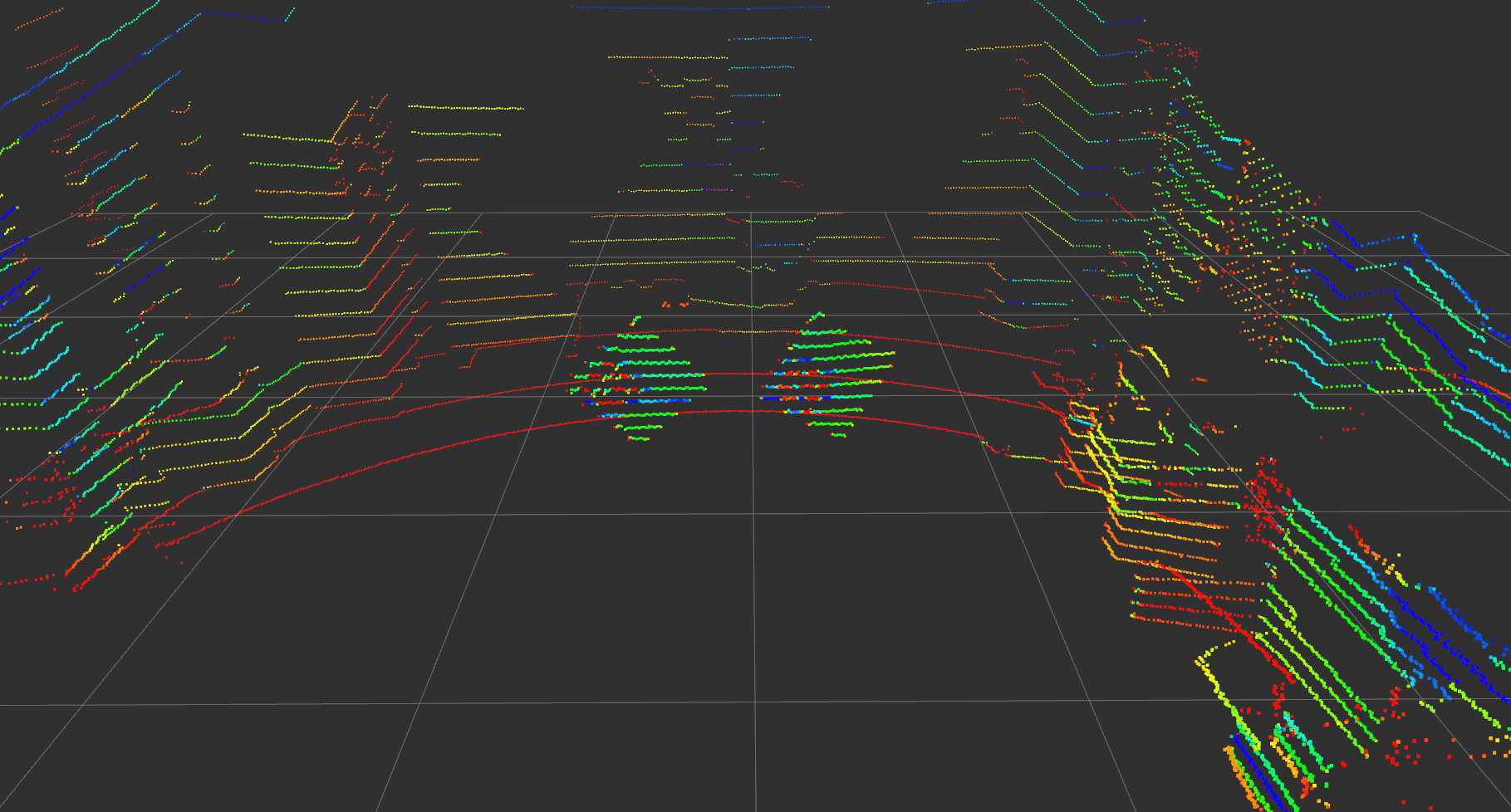

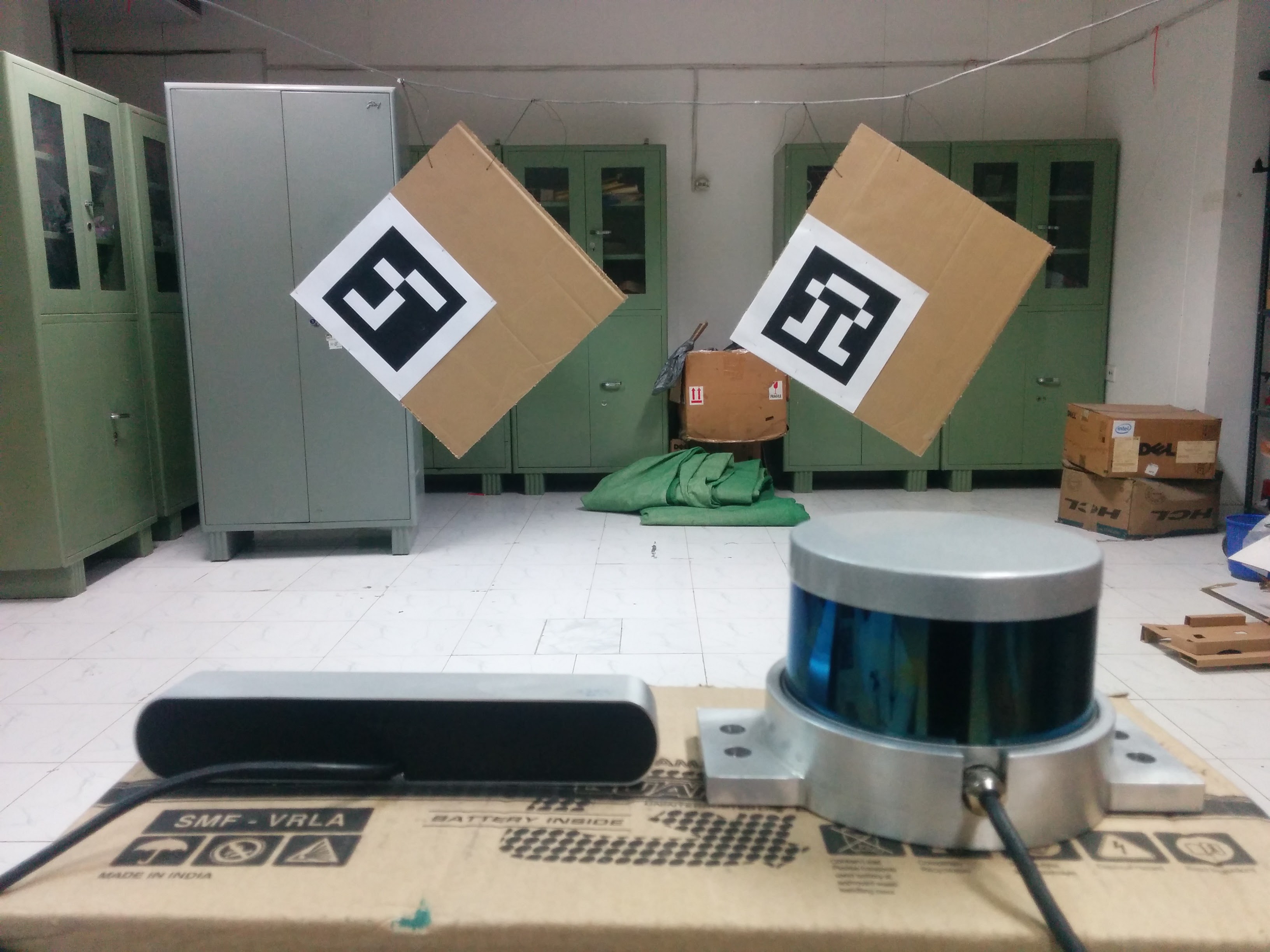

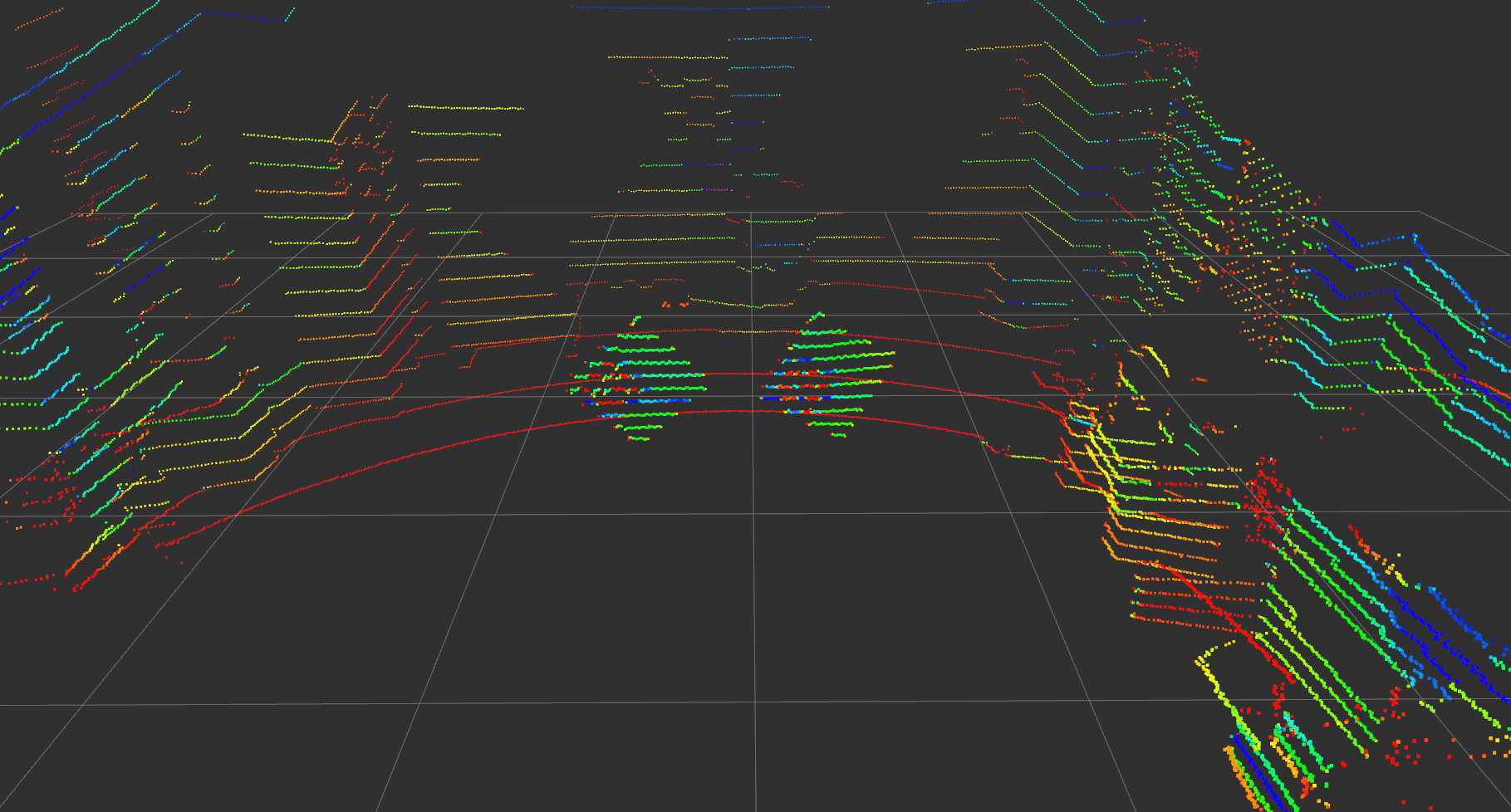

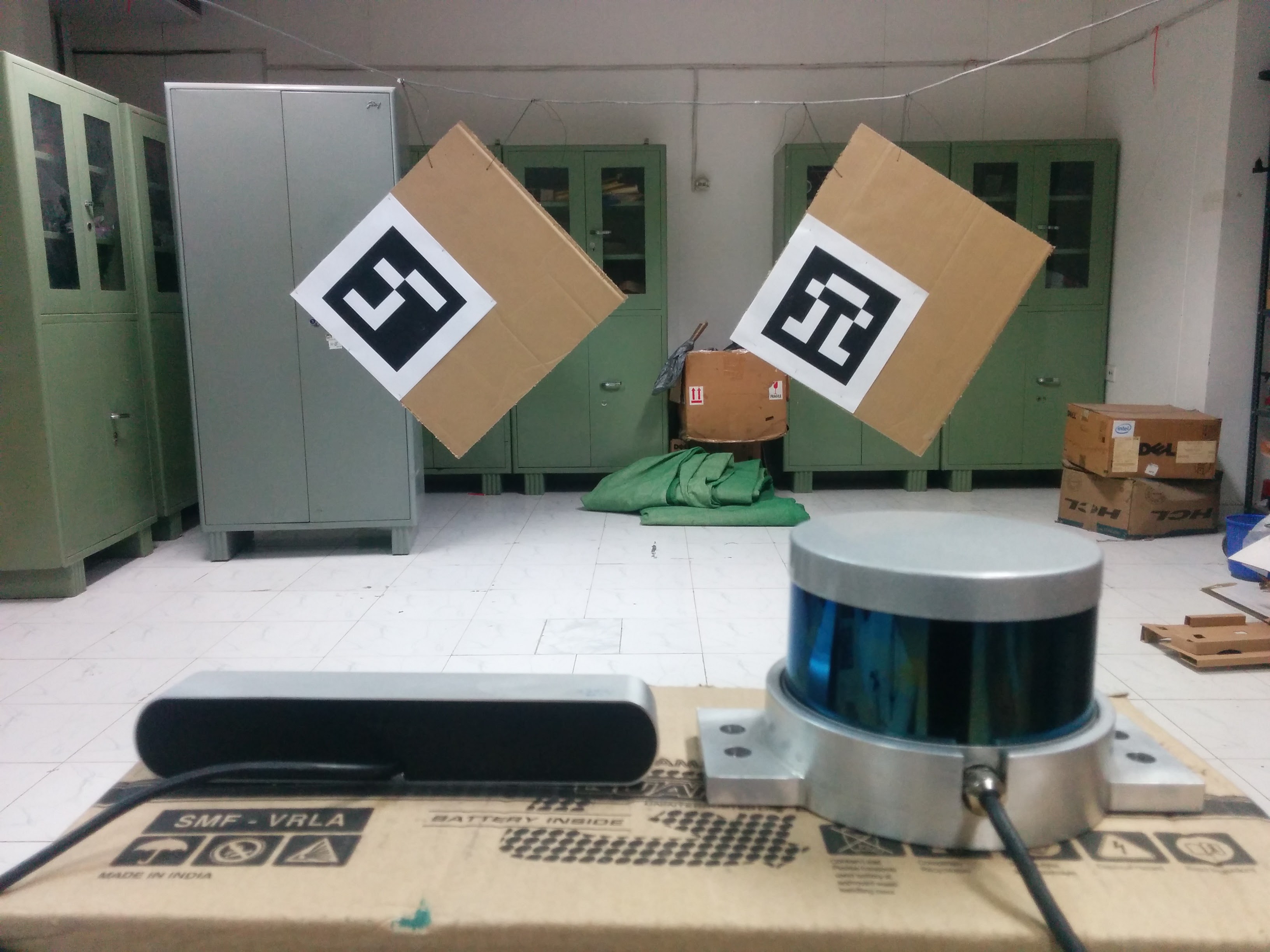

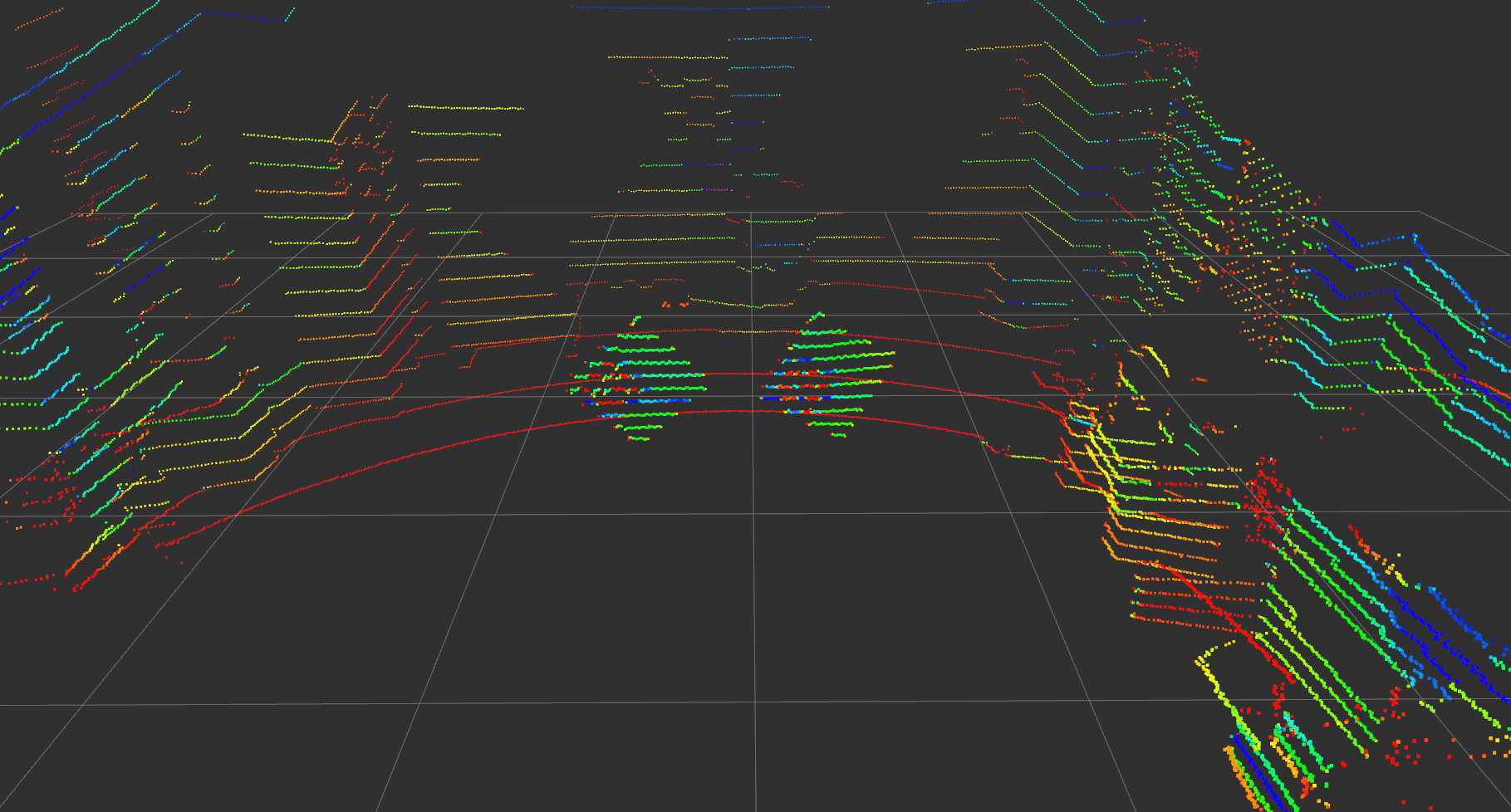

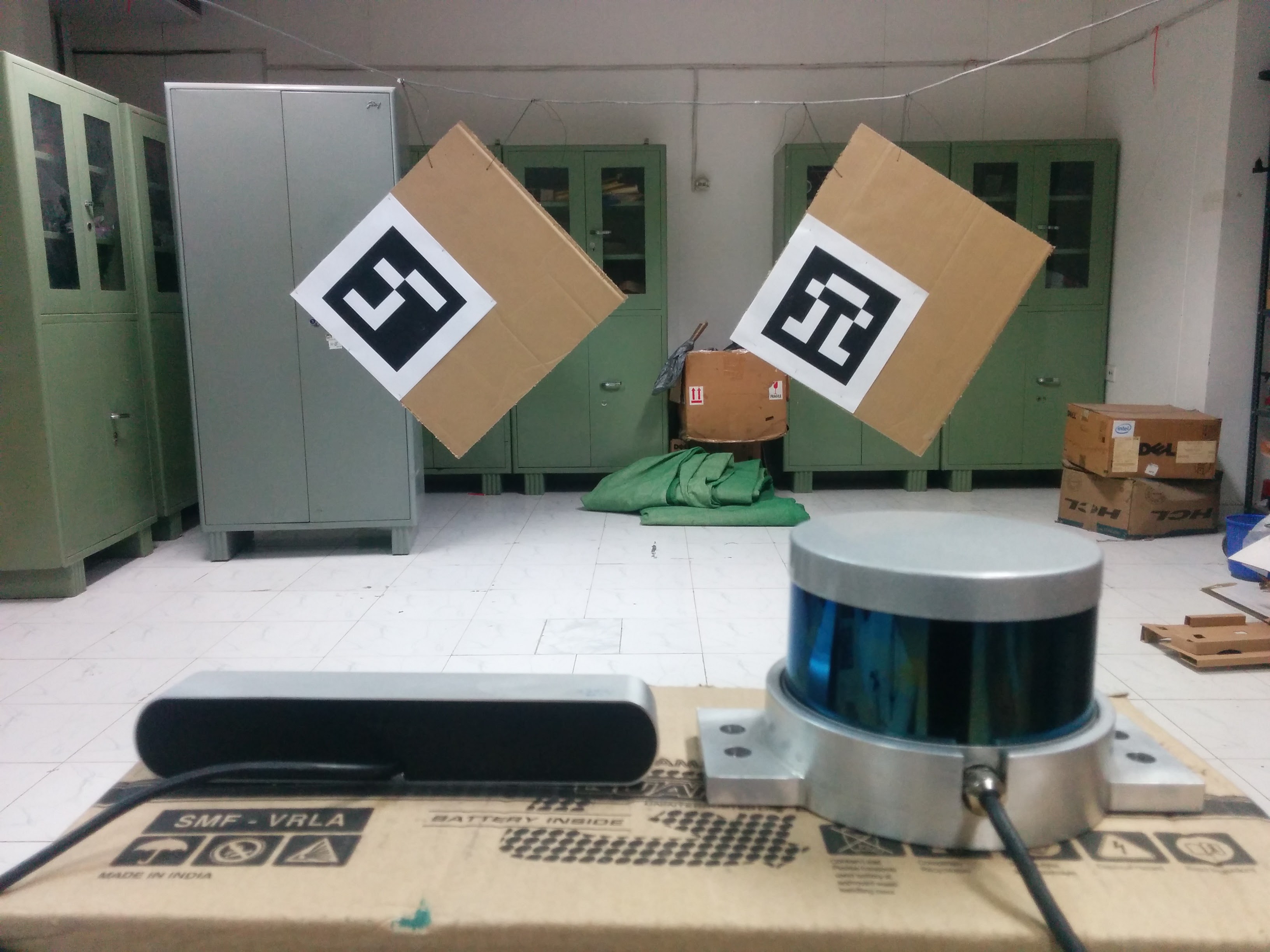

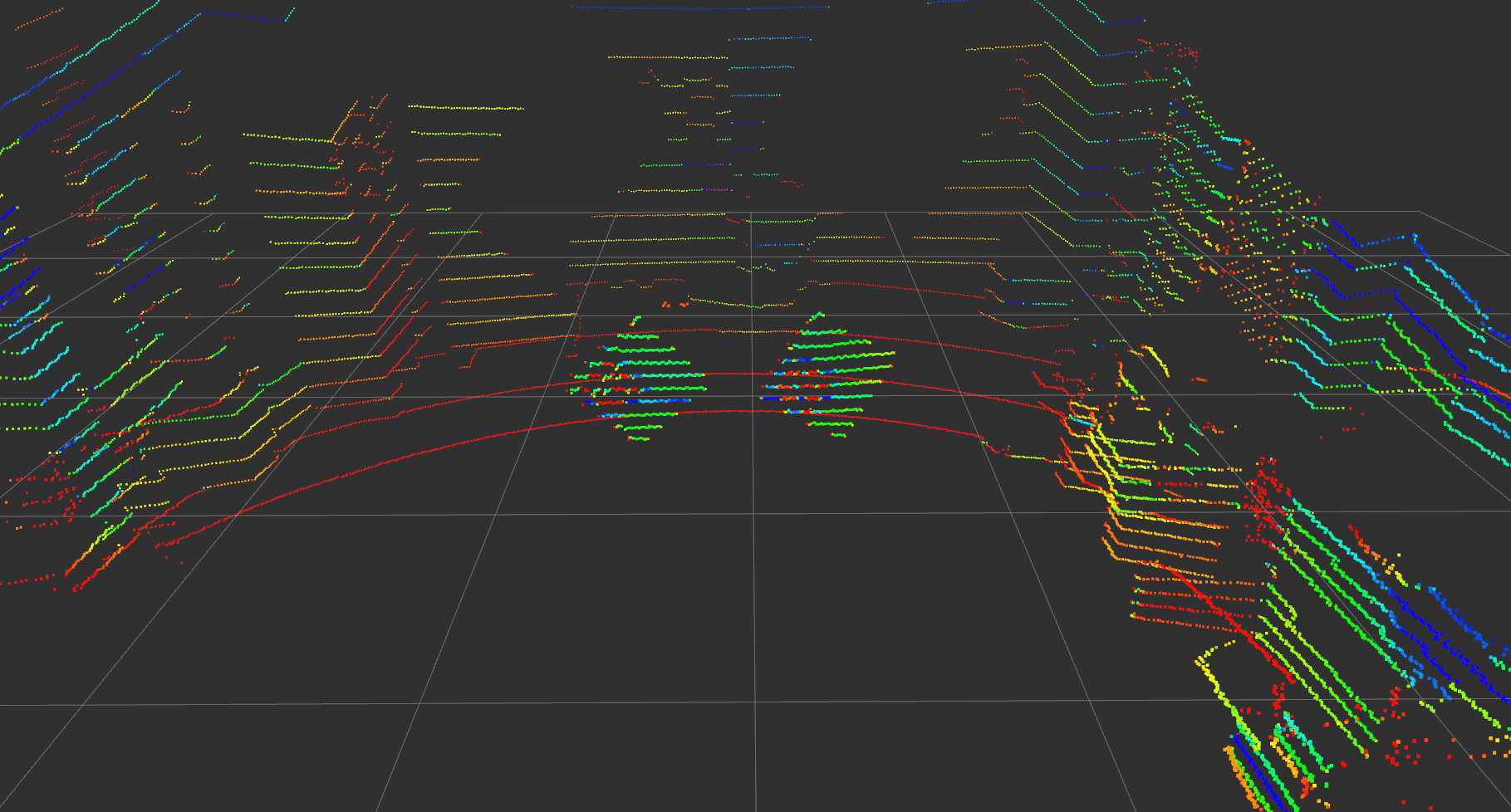

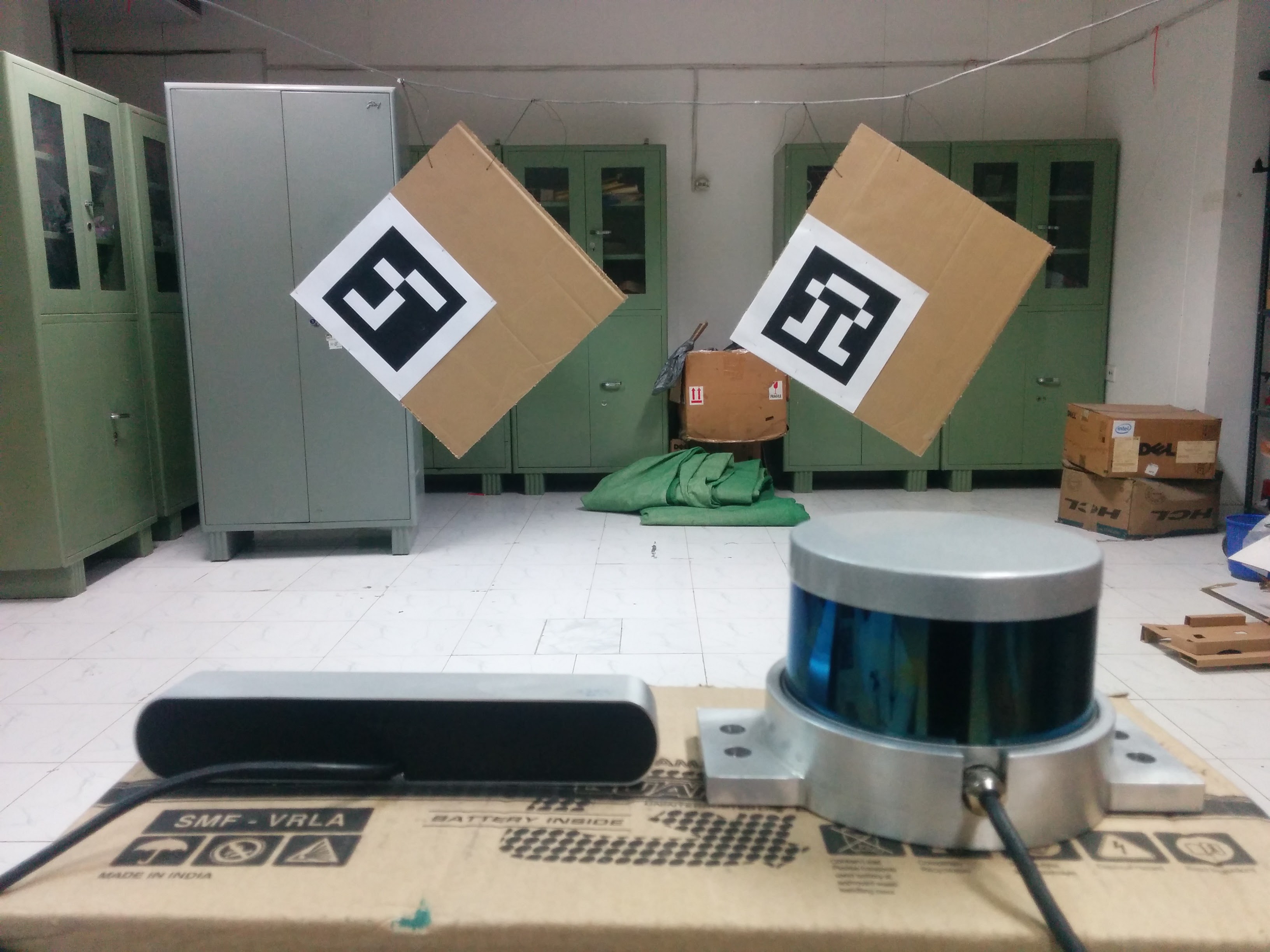

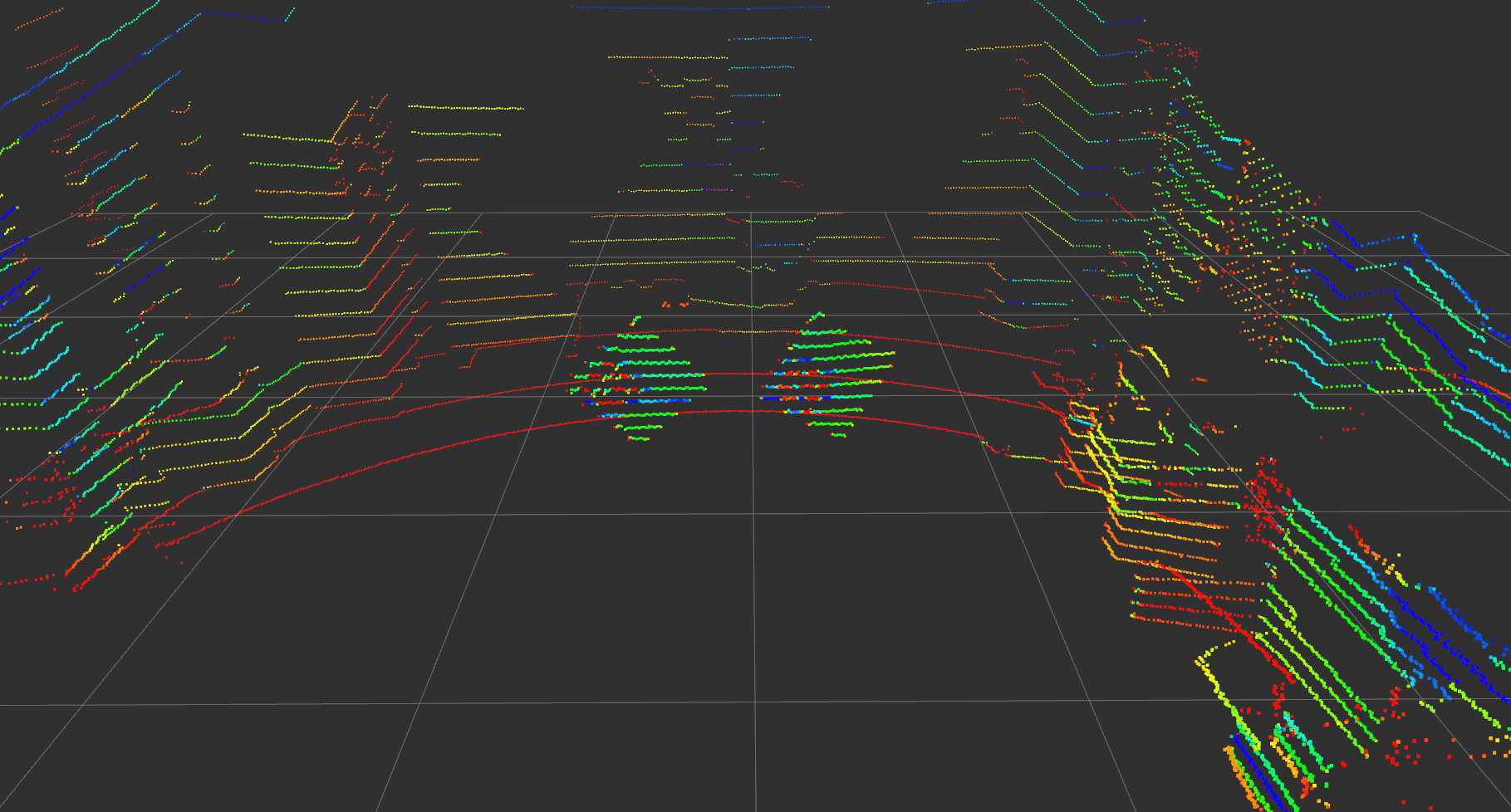

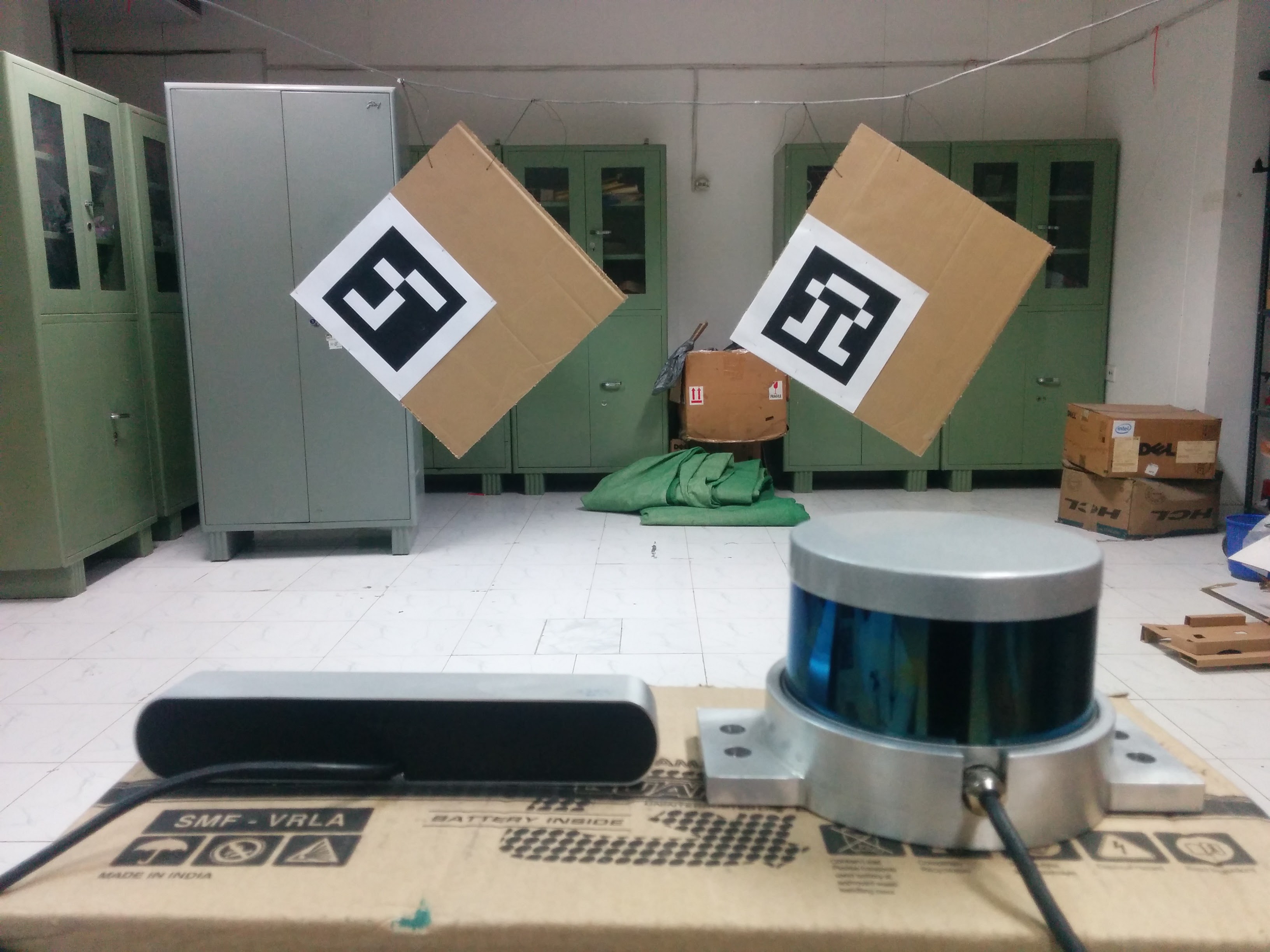

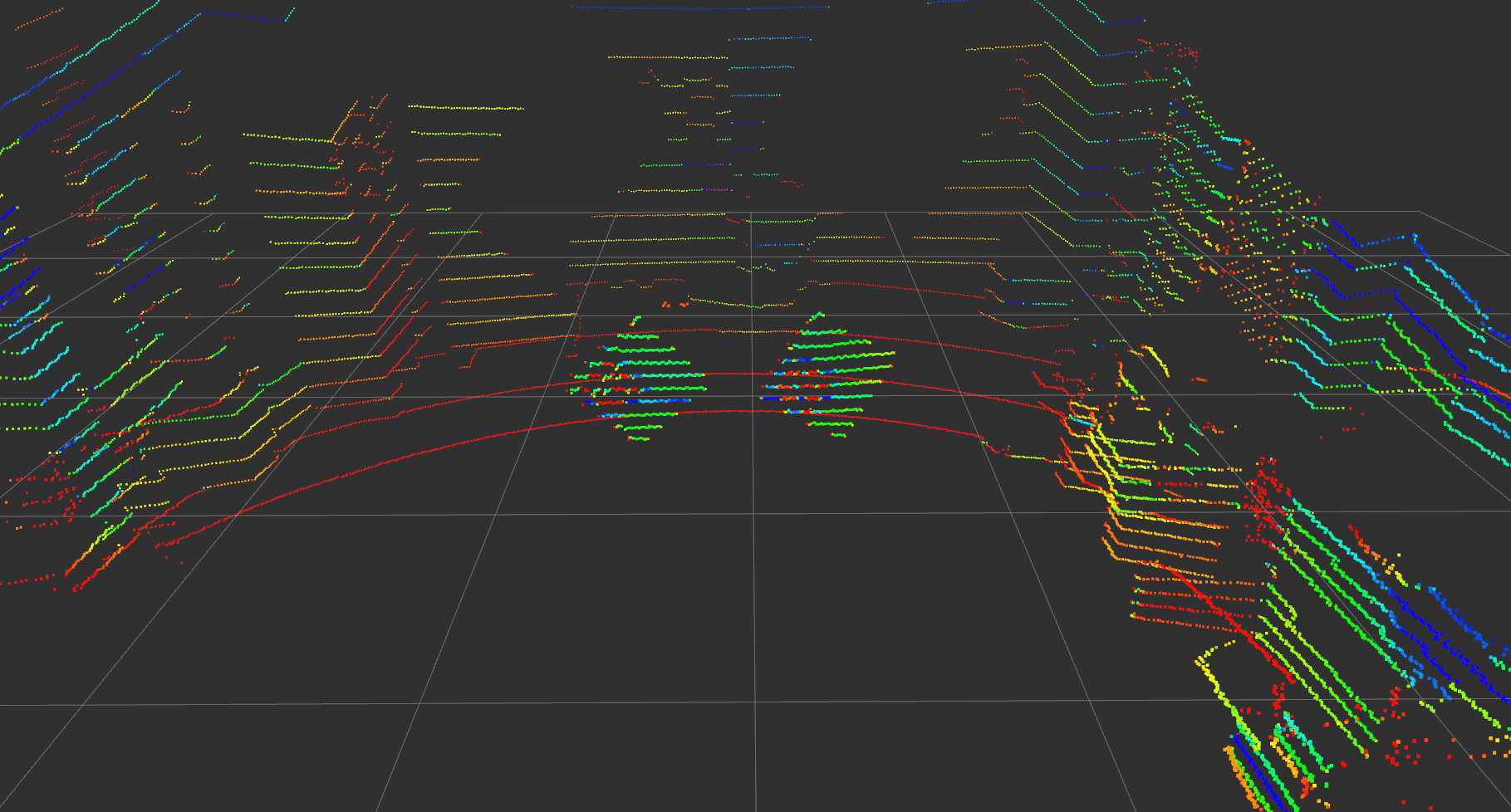

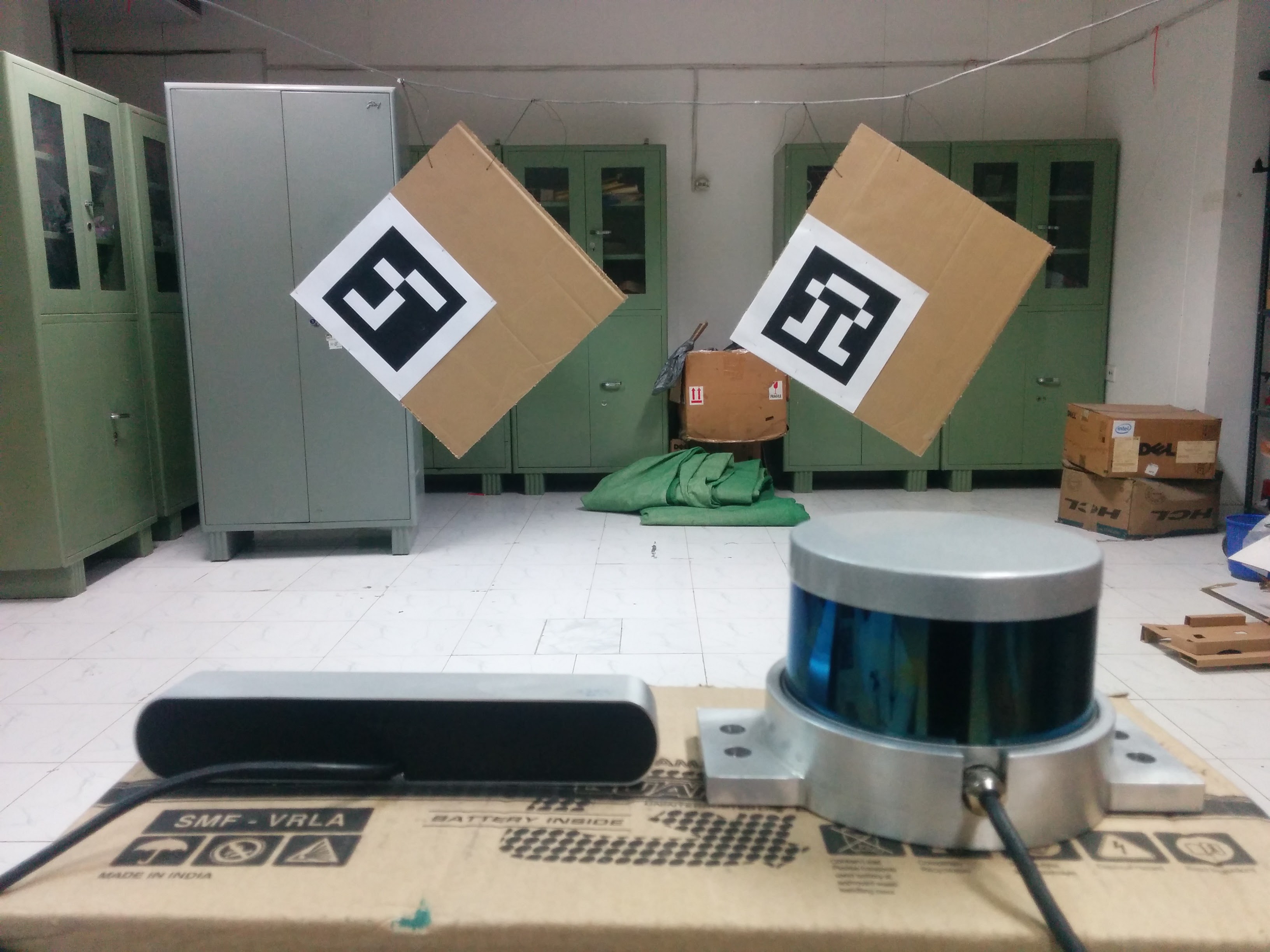

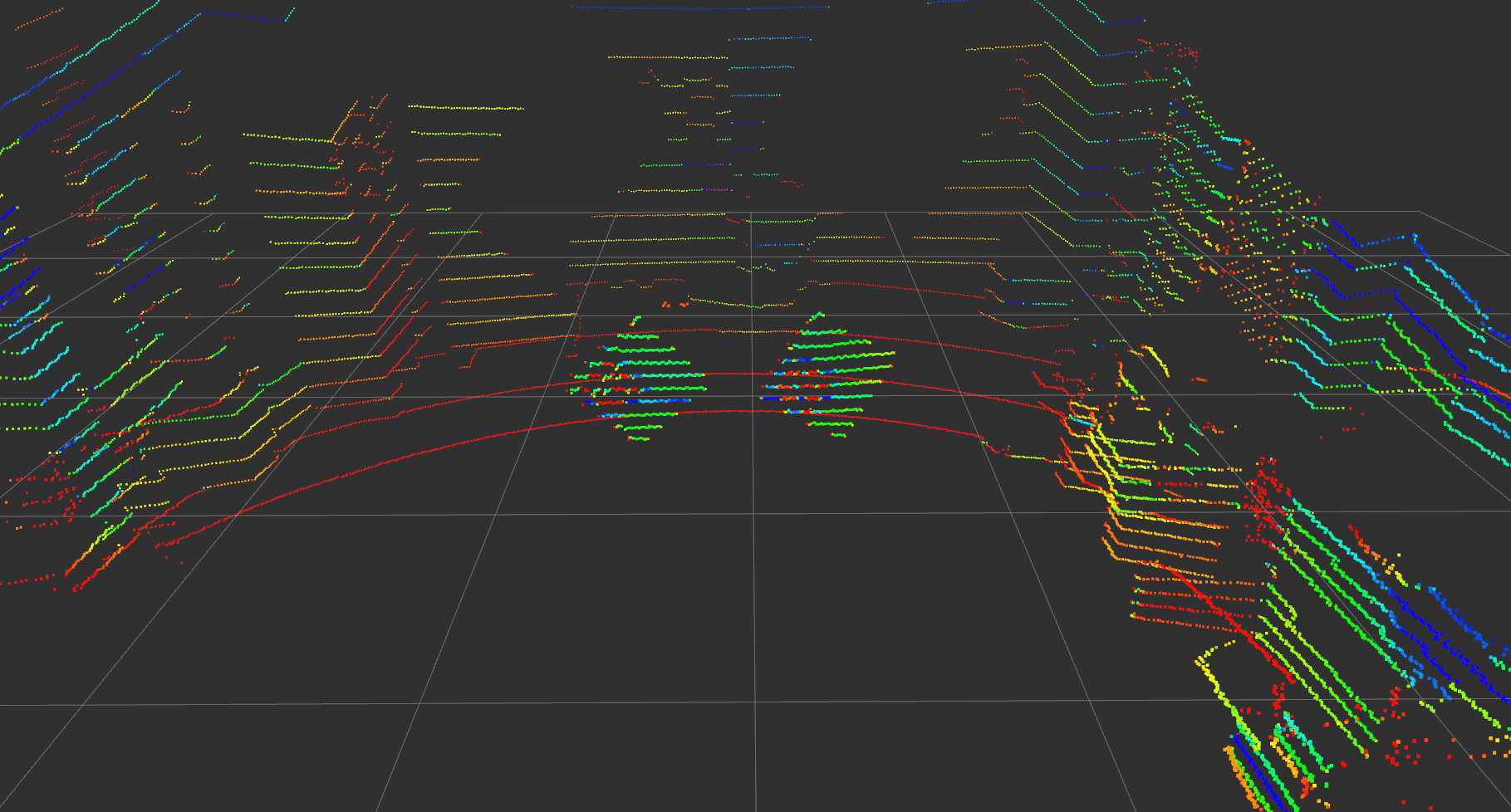

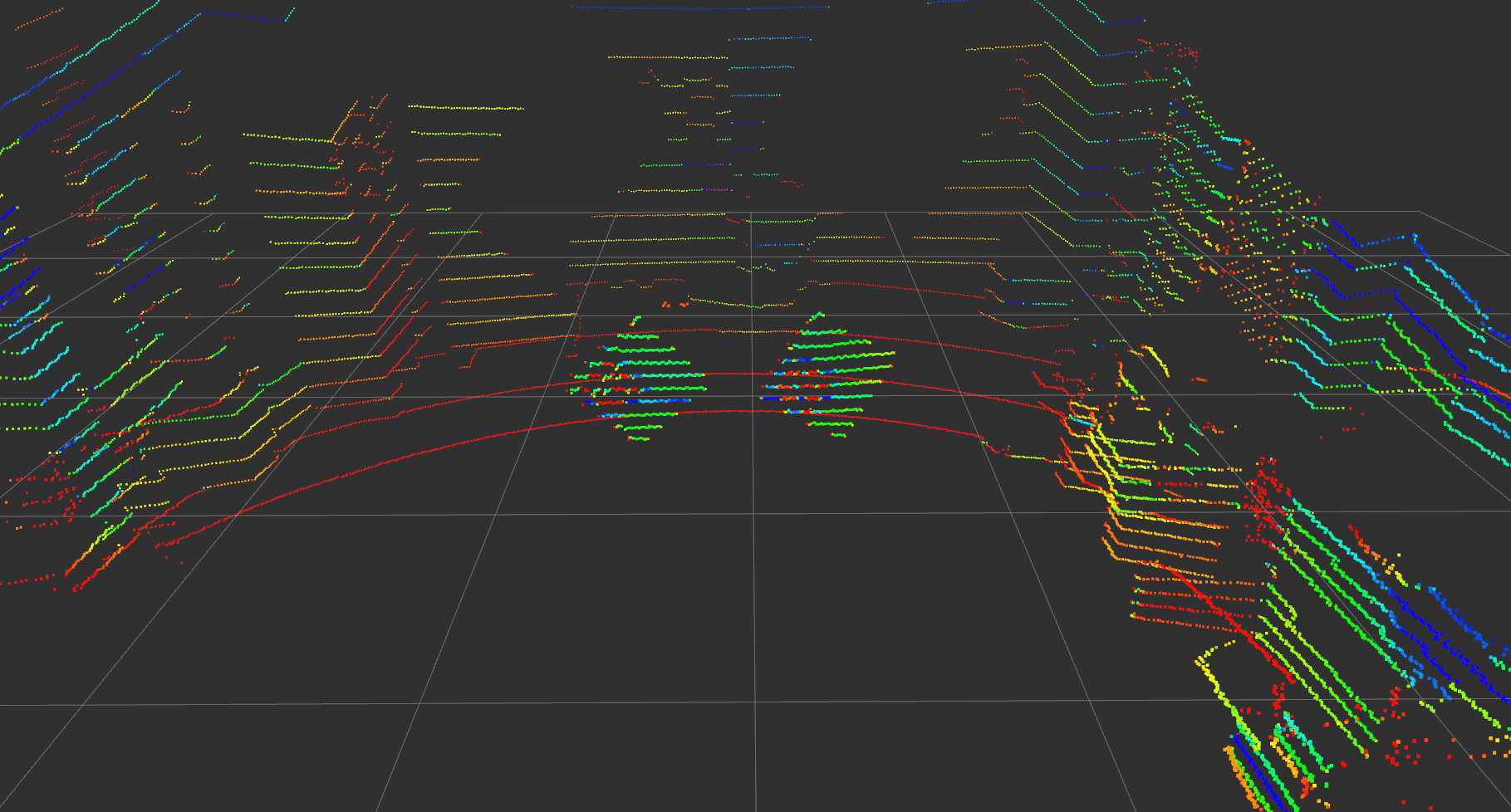

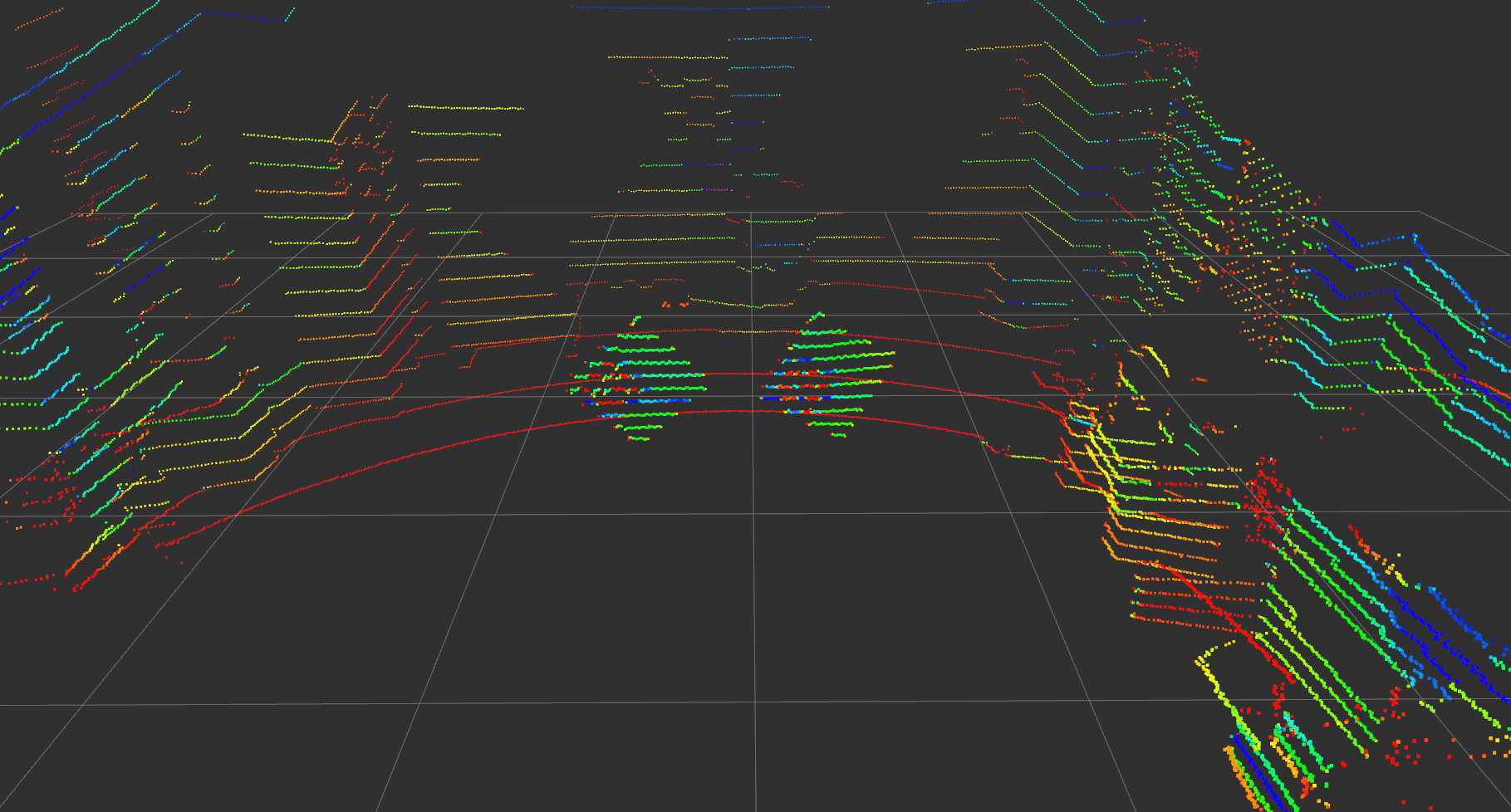

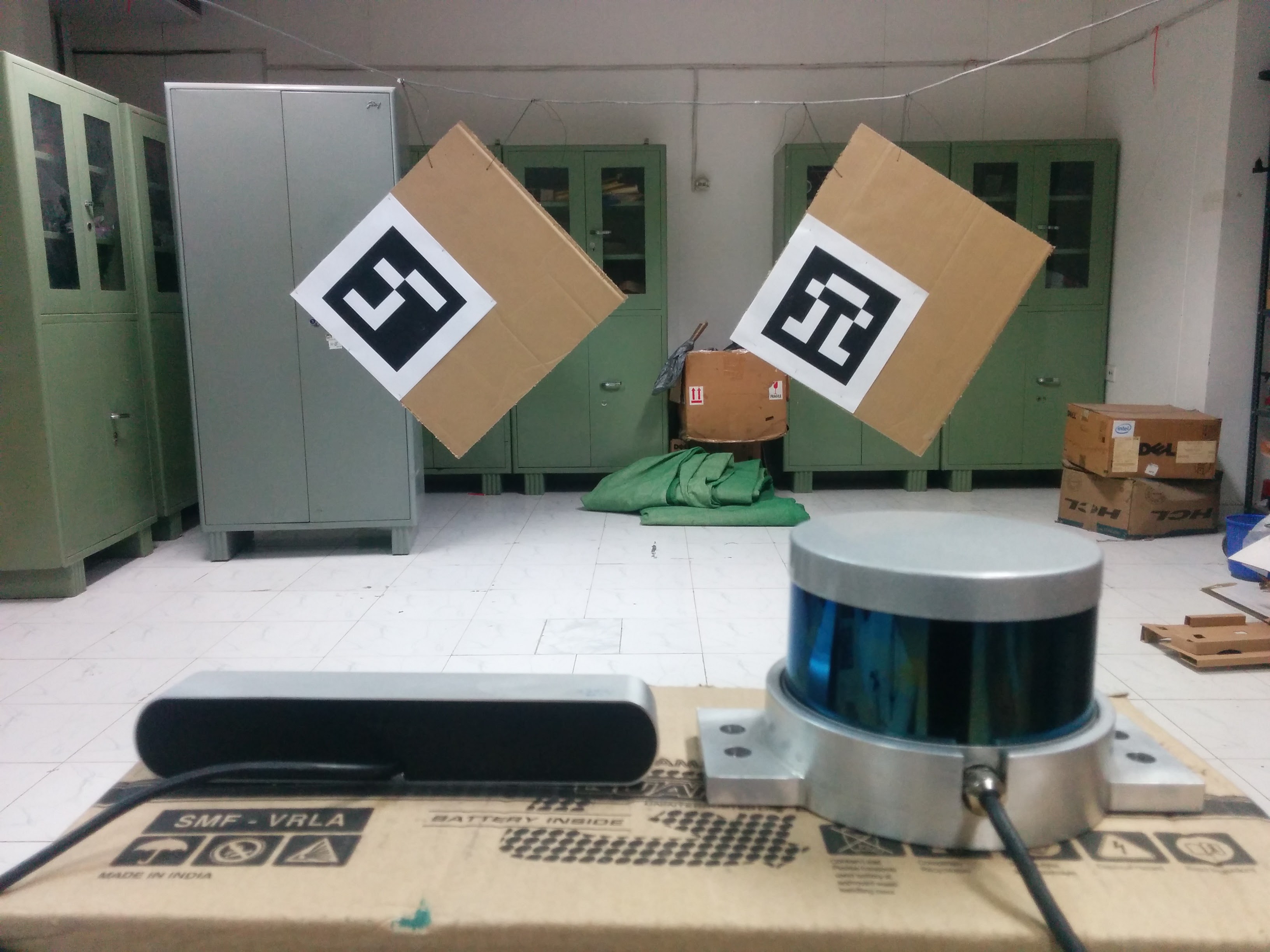

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,

author = {{Dhall}, A. and {Chelani}, K. and {Radhakrishnan}, V. and {Krishna}, K.~M.

},

title = "{LiDAR-Camera Calibration using 3D-3D Point correspondences}",

journal = {ArXiv e-prints},

archivePrefix = "arXiv",

eprint = {1705.09785},

primaryClass = "cs.RO",

keywords = {Computer Science - Robotics, Computer Science - Computer Vision and Pattern Recognition},

year = 2017,

month = may

}

Contents

- Setup and Installation :hammer_and_wrench:

- Contributing :hugs:

- Getting Started :zap:

- Usage :beginner:

-

Results and point cloud fusion using

lidar_camera_calibration:checkered_flag:

Setup and Installation

Please follow the installation instructions for your Ubuntu Distrubtion here on the Wiki

Contributing

As an open-source project, your contributions matter! If you would like to contribute and improve this project consider submitting a pull request. That way future users can find this package useful just like you did. Here is a non-exhaustive list of features that can be a good starting point:

-

Iterative process with

weightedaverage over multiple runs - Passing Workflows for Kinetic, Melodic and Noetic

- Hesai and Velodyne LiDAR options (see Getting Started)

- Integrate LiDAR hardware from other manufacturers

- Automate process of marking line-segments

- Github Workflow with functional test on dummy data

- Support for upcoming Linux Distros

- Support for running the package in ROS2

- Tests to improve the quality of the project

Getting Started

There are a couple of configuration files that need to be specfied in order to calibrate the camera and the LiDAR. The config files are available in the lidar_camera_calibration/conf directory. The find_transform.launch file is available in the lidar_camera_calibration/launch directory.

config_file.txt

1280 720

-2.5 2.5

-4.0 4.0

0.0 2.5

0.05

2

0

611.651245 0.0 642.388357 0.0

0.0 688.443726 365.971718 0.0

0.0 0.0 1.0 0.0

1.57 -1.57 0.0

0

The file contains specifications about the following:

image_width image_height

x- x+

y- y+

z- z+

cloud_intensity_threshold

number_of_markers

use_camera_info_topic?

File truncated at 100 lines see the full file

CONTRIBUTING

Repository Summary

| Checkout URI | https://github.com/ankitdhall/lidar_camera_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2023-04-04 |

| Dev Status | UNMAINTAINED |

| Released | UNRELEASED |

| Contributing |

Help Wanted (-)

Good First Issues (-) Pull Requests to Review (-) |

Packages

| Name | Version |

|---|---|

| lidar_camera_calibration | 0.0.1 |

README

LiDAR-Camera Calibration using 3D-3D Point correspondences

Ankit Dhall, Kunal Chelani, Vishnu Radhakrishnan, KM Krishna

Did you find this package useful and would like to contribute? :smile:

See how you can contribute and make this package better for future users. Go to Contributing section. :hugs:

ROS package to calibrate a camera and a LiDAR.

The package is used to calibrate a LiDAR (config to support Hesai and Velodyne hardware) with a camera (works for both monocular and stereo).

The package finds a rotation and translation that transform all the points in the LiDAR frame to the (monocular) camera frame. Please see Usage for a video tutorial. The lidar_camera_calibration/pointcloud_fusion provides a script to fuse point clouds obtained from two stereo cameras. Both of which were extrinsically calibrated using a LiDAR and lidar_camera_calibration. We show the accuracy of the proposed pipeline by fusing point clouds, with near perfection, from multiple cameras kept in various positions. See Fusion using lidar_camera_calibration for results of the point cloud fusion (videos).

For more details please refer to our paper.

Citing lidar_camera_calibration

Please cite our work if lidar_camera_calibration and our approach helps your research.

@article{2017arXiv170509785D,